University of Michigan-Dearborn

Researchers at the University of Michigan-Dearborn and UMTRI developed an automated, interpretable framework using a fine-tuned Llama 3.2 1B model to predict Driver Hazardous Actions (DHAs) from crash narratives and structured data. This approach achieved 80% accuracy in classifying DHAs and provided quantitative insights into how hypothetical scenarios like driver distraction and teen drivers probabilistically shift DHA classifications.

In clinical operations, teamwork can be the crucial factor that determines

the final outcome. Prior studies have shown that sufficient collaboration is

the key factor that determines the outcome of an operation. To understand how

the team practices teamwork during the operation, we collected CliniDial from

simulations of medical operations. CliniDial includes the audio data and its

transcriptions, the simulated physiology signals of the patient manikins, and

how the team operates from two camera angles. We annotate behavior codes

following an existing framework to understand the teamwork process for

CliniDial. We pinpoint three main characteristics of our dataset, including its

label imbalances, rich and natural interactions, and multiple modalities, and

conduct experiments to test existing LLMs' capabilities on handling data with

these characteristics. Experimental results show that CliniDial poses

significant challenges to the existing models, inviting future effort on

developing methods that can deal with real-world clinical data. We open-source

the codebase at this https URL

Architectural Transformations and Emerging Verification Demands in AI-Enabled Cyber-Physical Systems

Architectural Transformations and Emerging Verification Demands in AI-Enabled Cyber-Physical Systems

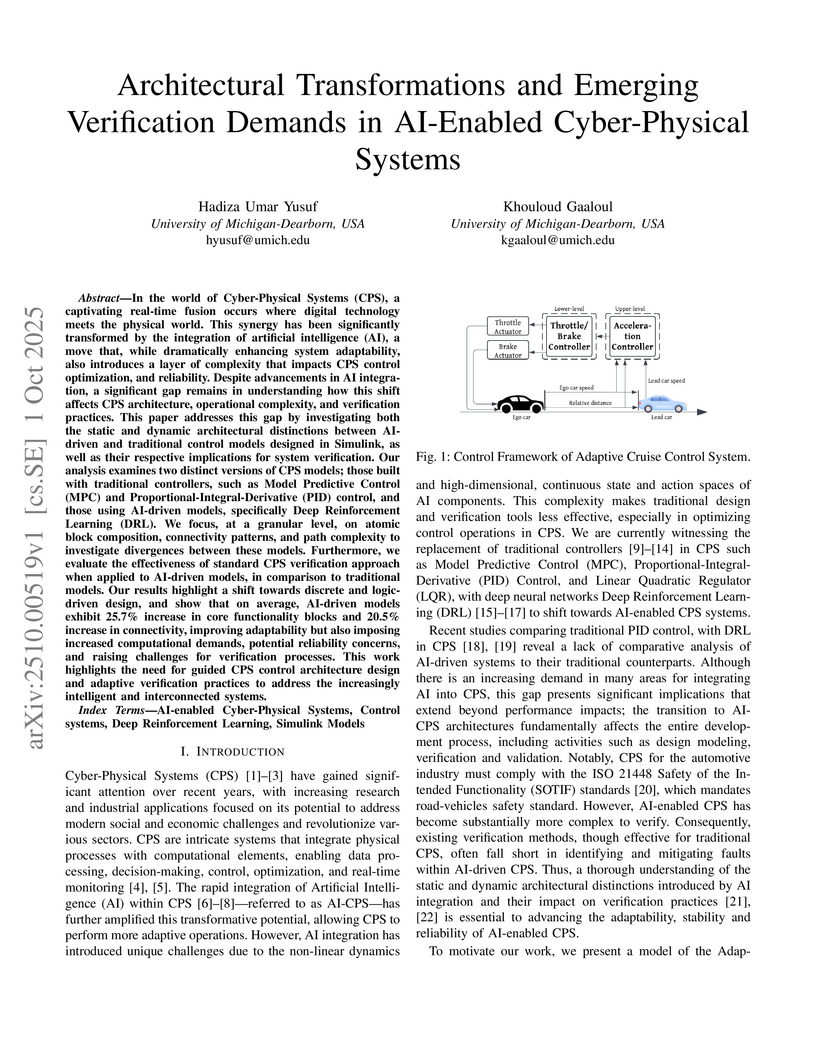

In the world of Cyber-Physical Systems (CPS), a captivating real-time fusion occurs where digital technology meets the physical world. This synergy has been significantly transformed by the integration of artificial intelligence (AI), a move that dramatically enhances system adaptability and introduces a layer of complexity that impacts CPS control optimization and reliability. Despite advancements in AI integration, a significant gap remains in understanding how this shift affects CPS architecture, operational complexity, and verification practices. The extended abstract addresses this gap by investigating architectural distinctions between AI-driven and traditional control models designed in Simulink and their respective implications for system verification.

17 Jun 2024

Performance bugs challenge software development, degrading performance and wasting computational resources. Software developers invest substantial effort in addressing these issues. Curating these performance bugs can offer valuable insights to the software engineering research community, aiding in developing new mitigation strategies. However, there is no large-scale open-source performance bugs dataset available. To bridge this gap, we propose PerfCurator, a repository miner that collects performance bug-related commits at scale. PerfCurator employs PcBERT-KD, a 125M parameter BERT model trained to classify performance bug-related commits. Our evaluation shows PcBERT-KD achieves accuracy comparable to 7 billion parameter LLMs but with significantly lower computational overhead, enabling cost-effective deployment on CPU clusters. Utilizing PcBERT-KD as the core component, we deployed PerfCurator on a 50-node CPU cluster to mine GitHub repositories. This extensive mining operation resulted in the construction of a large-scale dataset comprising 114K performance bug-fix commits in Python, 217.9K in C++, and 76.6K in Java. Our results demonstrate that this large-scale dataset significantly enhances the effectiveness of data-driven performance bug detection systems.

Deep neural networks (DNNs) are increasingly being deployed in high-stakes applications, from self-driving cars to biometric authentication. However, their unpredictable and unreliable behaviors in real-world settings require new approaches to characterize and ensure their reliability.

This paper introduces DeepProv, a novel and customizable system designed to capture and characterize the runtime behavior of DNNs during inference by using their underlying graph structure. Inspired by system audit provenance graphs, DeepProv models the computational information flow of a DNN's inference process through Inference Provenance Graphs (IPGs). These graphs provide a detailed structural representation of the behavior of DNN, allowing both empirical and structural analysis. DeepProv uses these insights to systematically repair DNNs for specific objectives, such as improving robustness, privacy, or fairness.

We instantiate DeepProv with adversarial robustness as the goal of model repair and conduct extensive case studies to evaluate its effectiveness. Our results demonstrate its effectiveness and scalability across diverse classification tasks, attack scenarios, and model complexities. DeepProv automatically identifies repair actions at the node and edge-level within IPGs, significantly enhancing the robustness of the model. In particular, applying DeepProv repair strategies to just a single layer of a DNN yields an average 55% improvement in adversarial accuracy. Moreover, DeepProv complements existing defenses, achieving substantial gains in adversarial robustness. Beyond robustness, we demonstrate the broader potential of DeepProv as an adaptable system to characterize DNN behavior in other critical areas, such as privacy auditing and fairness analysis.

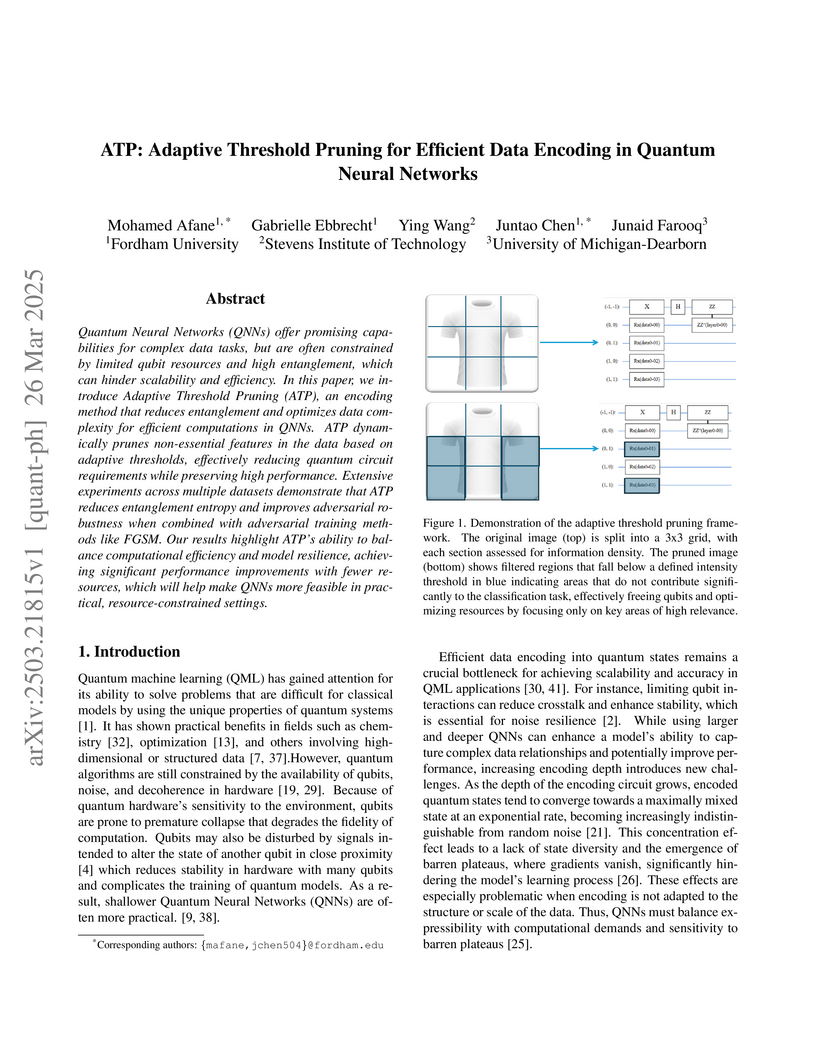

Researchers from Fordham University and collaborating institutions introduce Adaptive Threshold Pruning (ATP), a data encoding method for quantum neural networks that reduces qubit requirements while maintaining model performance, achieving 7% improved accuracy on IBM's quantum hardware compared to traditional encoding approaches.

End-to-end vision-based imitation learning has demonstrated promising results

in autonomous driving by learning control commands directly from expert

demonstrations. However, traditional approaches rely on either regressionbased

models, which provide precise control but lack confidence estimation, or

classification-based models, which offer confidence scores but suffer from

reduced precision due to discretization. This limitation makes it challenging

to quantify the reliability of predicted actions and apply corrections when

necessary. In this work, we introduce a dual-head neural network architecture

that integrates both regression and classification heads to improve decision

reliability in imitation learning. The regression head predicts continuous

driving actions, while the classification head estimates confidence, enabling a

correction mechanism that adjusts actions in low-confidence scenarios,

enhancing driving stability. We evaluate our approach in a closed-loop setting

within the CARLA simulator, demonstrating its ability to detect uncertain

actions, estimate confidence, and apply real-time corrections. Experimental

results show that our method reduces lane deviation and improves trajectory

accuracy by up to 50%, outperforming conventional regression-only models. These

findings highlight the potential of classification-guided confidence estimation

in enhancing the robustness of vision-based imitation learning for autonomous

driving. The source code is available at

this https URL

Georgia Institute of Technology

Georgia Institute of Technology University of Michigan

University of Michigan The Hong Kong Polytechnic University

The Hong Kong Polytechnic University ETH Zürich

ETH Zürich EPFLUniversity of ConnecticutIowa State UniversitySandia National LaboratoriesUniversity of Michigan-DearbornIndiana University—Purdue University IndianapolisCenter for Advances in Reliability and Safety (CAiRS)

EPFLUniversity of ConnecticutIowa State UniversitySandia National LaboratoriesUniversity of Michigan-DearbornIndiana University—Purdue University IndianapolisCenter for Advances in Reliability and Safety (CAiRS)On top of machine learning models, uncertainty quantification (UQ) functions as an essential layer of safety assurance that could lead to more principled decision making by enabling sound risk assessment and management. The safety and reliability improvement of ML models empowered by UQ has the potential to significantly facilitate the broad adoption of ML solutions in high-stakes decision settings, such as healthcare, manufacturing, and aviation, to name a few. In this tutorial, we aim to provide a holistic lens on emerging UQ methods for ML models with a particular focus on neural networks and the applications of these UQ methods in tackling engineering design as well as prognostics and health management problems. Toward this goal, we start with a comprehensive classification of uncertainty types, sources, and causes pertaining to UQ of ML models. Next, we provide a tutorial-style description of several state-of-the-art UQ methods: Gaussian process regression, Bayesian neural network, neural network ensemble, and deterministic UQ methods focusing on spectral-normalized neural Gaussian process. Established upon the mathematical formulations, we subsequently examine the soundness of these UQ methods quantitatively and qualitatively (by a toy regression example) to examine their strengths and shortcomings from different dimensions. Then, we review quantitative metrics commonly used to assess the quality of predictive uncertainty in classification and regression problems. Afterward, we discuss the increasingly important role of UQ of ML models in solving challenging problems in engineering design and health prognostics. Two case studies with source codes available on GitHub are used to demonstrate these UQ methods and compare their performance in the life prediction of lithium-ion batteries at the early stage and the remaining useful life prediction of turbofan engines.

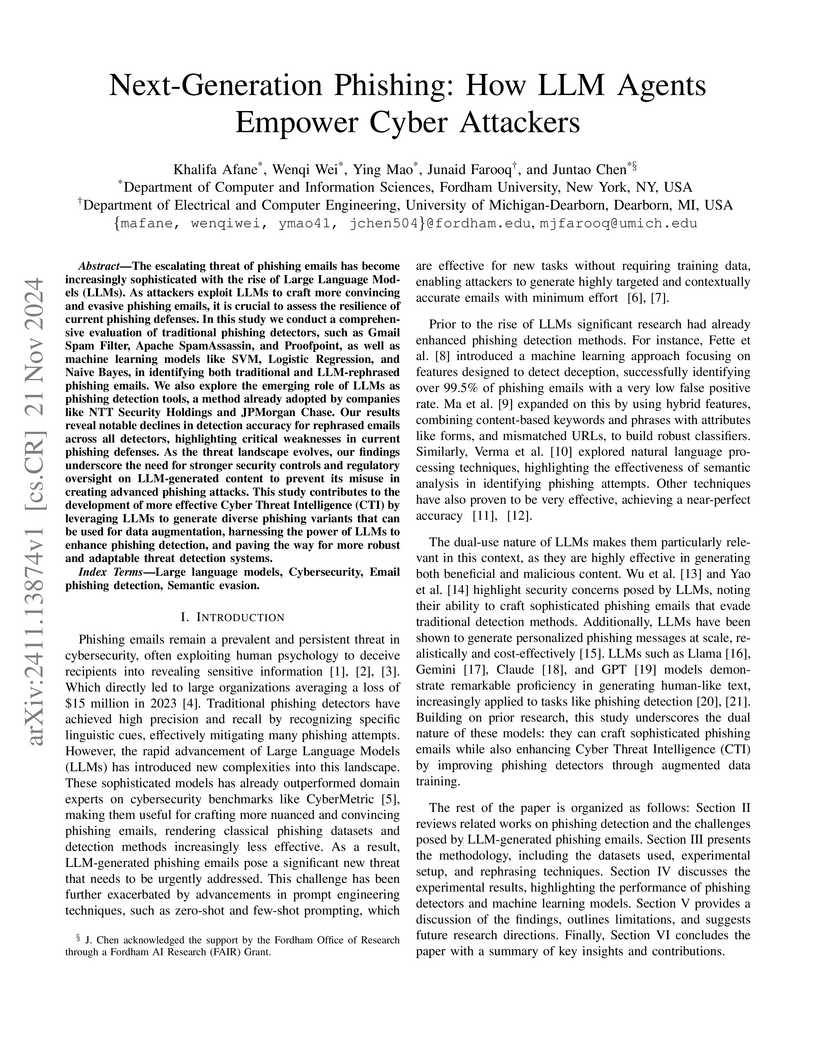

Researchers from Fordham University and the University of Michigan-Dearborn analyzed how Large Language Models can create sophisticated phishing emails with higher evasion rates while also exploring their potential for strengthening detection systems. The study found that LLM-rephrased emails achieved a 16.4% evasion rate, but training detection models on LLM-augmented datasets significantly improved their ability to detect these advanced threats.

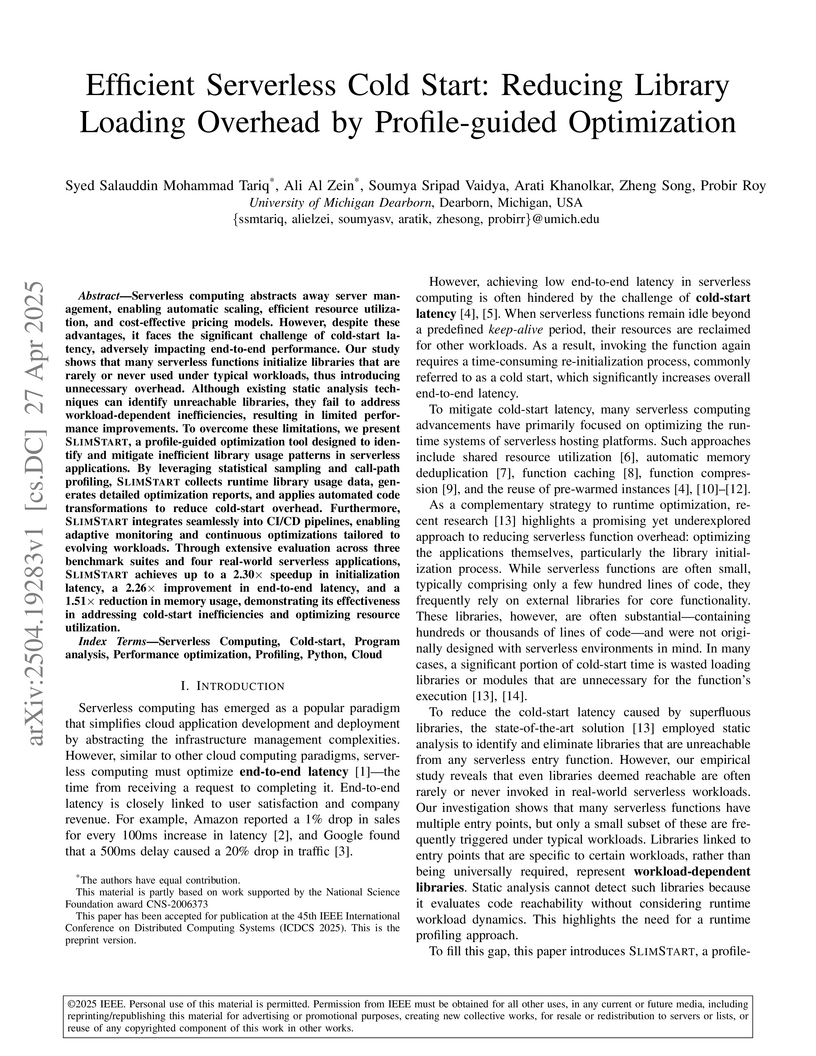

Serverless computing abstracts away server management, enabling automatic

scaling, efficient resource utilization, and cost-effective pricing models.

However, despite these advantages, it faces the significant challenge of

cold-start latency, adversely impacting end-to-end performance. Our study shows

that many serverless functions initialize libraries that are rarely or never

used under typical workloads, thus introducing unnecessary overhead. Although

existing static analysis techniques can identify unreachable libraries, they

fail to address workload-dependent inefficiencies, resulting in limited

performance improvements. To overcome these limitations, we present SLIMSTART,

a profile-guided optimization tool designed to identify and mitigate

inefficient library usage patterns in serverless applications. By leveraging

statistical sampling and call-path profiling, SLIMSTART collects runtime

library usage data, generates detailed optimization reports, and applies

automated code transformations to reduce cold-start overhead. Furthermore,

SLIMSTART integrates seamlessly into CI/CD pipelines, enabling adaptive

monitoring and continuous optimizations tailored to evolving workloads. Through

extensive evaluation across three benchmark suites and four real-world

serverless applications, SLIMSTART achieves up to a 2.30X speedup in

initialization latency, a 2.26X improvement in end-to-end latency, and a 1.51X

reduction in memory usage, demonstrating its effectiveness in addressing

cold-start inefficiencies and optimizing resource utilization.

03 Nov 2022

Extended Reality (XR) includes Virtual Reality (VR), Augmented Reality (AR)

and Mixed Reality (MR). XR is an emerging technology that simulates a realistic

environment for users. XR techniques have provided revolutionary user

experiences in various application scenarios (e.g., training, education,

product/architecture design, gaming, remote conference/tour, etc.). Due to the

high computational cost of rendering real-time animation in limited-resource

devices and constant interaction with user activity, XR applications often face

performance bottlenecks, and these bottlenecks create a negative impact on the

user experience of XR software. Thus, performance optimization plays an

essential role in many industry-standard XR applications. Even though

identifying performance bottlenecks in traditional software (e.g., desktop

applications) is a widely explored topic, those approaches cannot be directly

applied within XR software due to the different nature of XR applications.

Moreover, XR applications developed in different frameworks such as Unity and

Unreal Engine show different performance bottleneck patterns and thus,

bottleneck patterns of Unity projects can't be applied for Unreal Engine

(UE)-based XR projects. To fill the knowledge gap for XR performance

optimizations of Unreal Engine-based XR projects, we present the first

empirical study on performance optimizations from seven UE XR projects, 78 UE

XR discussion issues and three sources of UE documentation. Our analysis

identified 14 types of performance bugs, including 12 types of bugs related to

UE settings issues and two types of CPP source code-related issues. To further

assist developers in detecting performance bugs based on the identified bug

patterns, we also developed a static analyzer, UEPerfAnalyzer, that can detect

performance bugs in both configuration files and source code.

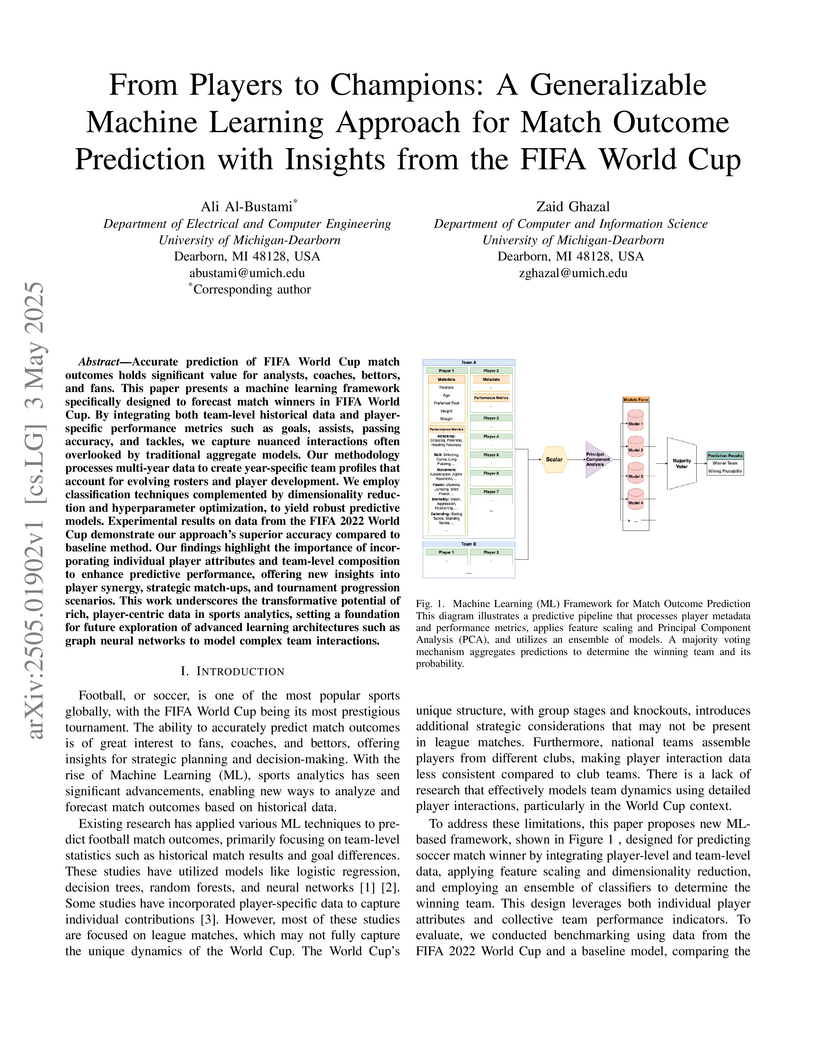

Accurate prediction of FIFA World Cup match outcomes holds significant value

for analysts, coaches, bettors, and fans. This paper presents a machine

learning framework specifically designed to forecast match winners in FIFA

World Cup. By integrating both team-level historical data and player-specific

performance metrics such as goals, assists, passing accuracy, and tackles, we

capture nuanced interactions often overlooked by traditional aggregate models.

Our methodology processes multi-year data to create year-specific team profiles

that account for evolving rosters and player development. We employ

classification techniques complemented by dimensionality reduction and

hyperparameter optimization, to yield robust predictive models. Experimental

results on data from the FIFA 2022 World Cup demonstrate our approach's

superior accuracy compared to baseline method. Our findings highlight the

importance of incorporating individual player attributes and team-level

composition to enhance predictive performance, offering new insights into

player synergy, strategic match-ups, and tournament progression scenarios. This

work underscores the transformative potential of rich, player-centric data in

sports analytics, setting a foundation for future exploration of advanced

learning architectures such as graph neural networks to model complex team

interactions.

26 Aug 2025

Peer-to-peer (P2P) energy trading is becoming central to modern distribution systems as rooftop PV and home energy management systems become pervasive, yet most existing market and reinforcement learning designs emphasize efficiency or private profit and offer little real-time guidance to ensure equitable outcomes under uncertainty. To address this gap, a fairness-aware multiagent reinforcement learning framework, FairMarket-RL, is proposed in which a large language model (LLM) critic shapes bidding policies within a continuous double auction under partial observability and discrete price-quantity actions. After each trading slot, the LLM returns normalized fairness scores Fairness-to-Grid (FTG), Fairness-Between-Sellers (FBS), and Fairness-of-Pricing (FPP) that are integrated into the reward via ramped coefficients and tunable scaling, so that fairness guidance complements, rather than overwhelms, economic incentives. The environment models realistic residential load and PV profiles and enforce hard constraints on prices, physical feasibility, and policy-update stability. Across a progression of experiments from a small pilot to a larger simulated community and a mixed-asset real-world dataset, the framework shifts exchanges toward local P2P trades, lowers consumer costs relative to grid-only procurement, sustains strong fairness across participants, and preserves utility viability. Sensitivity analyses over solar availability and aggregate demand further indicate robust performance, suggesting a scalable, LLM-guided pathway to decentralized electricity markets that are economically efficient, socially equitable, and technically sound.

24 Sep 2024

The high penetration of distributed energy resources (DERs) in modern smart power systems introduces unforeseen uncertainties for the electricity sector, leading to increased complexity and difficulty in the operation and control of power systems. As a cutting-edge machine learning technology, deep reinforcement learning (DRL) has been widely implemented in recent years to handle the uncertainty in power systems. However, in critical infrastructures such as power systems, safety issues always receive top priority, while DRL may not always meet the safety requirements of power system operators. The concept of safe reinforcement learning (safe RL) is emerging as a potential solution to overcome the shortcomings of conventional DRL in the operation and control of power systems. This study provides a rigorous review of the latest research efforts focused on safe RL to derive power system control policies while accounting for the unique safety requirements of power grids. Furthermore, this study highlights various safe RL algorithms applied in diverse applications within the power system sector, from single grid-connected power converters, residential smart homes, and buildings to large power distribution networks. For all methods outlined, a discussion on their bottlenecks, research challenges, and potential opportunities in the operation and control of power system applications is also presented. This review aims to support research in the area of safe RL algorithms, embracing smart power system operation with safety constraints amid high uncertainty from DERs.

As an emerging technology in the era of Industry 4.0, digital twin is gaining unprecedented attention because of its promise to further optimize process design, quality control, health monitoring, decision and policy making, and more, by comprehensively modeling the physical world as a group of interconnected digital models. In a two-part series of papers, we examine the fundamental role of different modeling techniques, twinning enabling technologies, and uncertainty quantification and optimization methods commonly used in digital twins. This first paper presents a thorough literature review of digital twin trends across many disciplines currently pursuing this area of research. Then, digital twin modeling and twinning enabling technologies are further analyzed by classifying them into two main categories: physical-to-virtual, and virtual-to-physical, based on the direction in which data flows. Finally, this paper provides perspectives on the trajectory of digital twin technology over the next decade, and introduces a few emerging areas of research which will likely be of great use in future digital twin research. In part two of this review, the role of uncertainty quantification and optimization are discussed, a battery digital twin is demonstrated, and more perspectives on the future of digital twin are shared.

Easy access to audio-visual content on social media, combined with the availability of modern tools such as Tensorflow or Keras, open-source trained models, and economical computing infrastructure, and the rapid evolution of deep-learning (DL) methods, especially Generative Adversarial Networks (GAN), have made it possible to generate deepfakes to disseminate disinformation, revenge porn, financial frauds, hoaxes, and to disrupt government functioning. The existing surveys have mainly focused on the detection of deepfake images and videos. This paper provides a comprehensive review and detailed analysis of existing tools and machine learning (ML) based approaches for deepfake generation and the methodologies used to detect such manipulations for both audio and visual deepfakes. For each category of deepfake, we discuss information related to manipulation approaches, current public datasets, and key standards for the performance evaluation of deepfake detection techniques along with their results. Additionally, we also discuss open challenges and enumerate future directions to guide future researchers on issues that need to be considered to improve the domains of both deepfake generation and detection. This work is expected to assist the readers in understanding the creation and detection mechanisms of deepfakes, along with their current limitations and future direction.

Voice-cloning (VC) systems have seen an exceptional increase in the realism of synthesized speech in recent years. The high quality of synthesized speech and the availability of low-cost VC services have given rise to many potential abuses of this technology. Several detection methodologies have been proposed over the years that can detect voice spoofs with reasonably good accuracy. However, these methodologies are mostly evaluated on clean audio databases, such as ASVSpoof 2019. This paper evaluates SOTA Audio Spoof Detection approaches in the presence of laundering attacks. In that regard, a new laundering attack database, called the ASVSpoof Laundering Database, is created. This database is based on the ASVSpoof 2019 (LA) eval database comprising a total of 1388.22 hours of audio recordings. Seven SOTA audio spoof detection approaches are evaluated on this laundered database. The results indicate that SOTA systems perform poorly in the presence of aggressive laundering attacks, especially reverberation and additive noise attacks. This suggests the need for robust audio spoof detection.

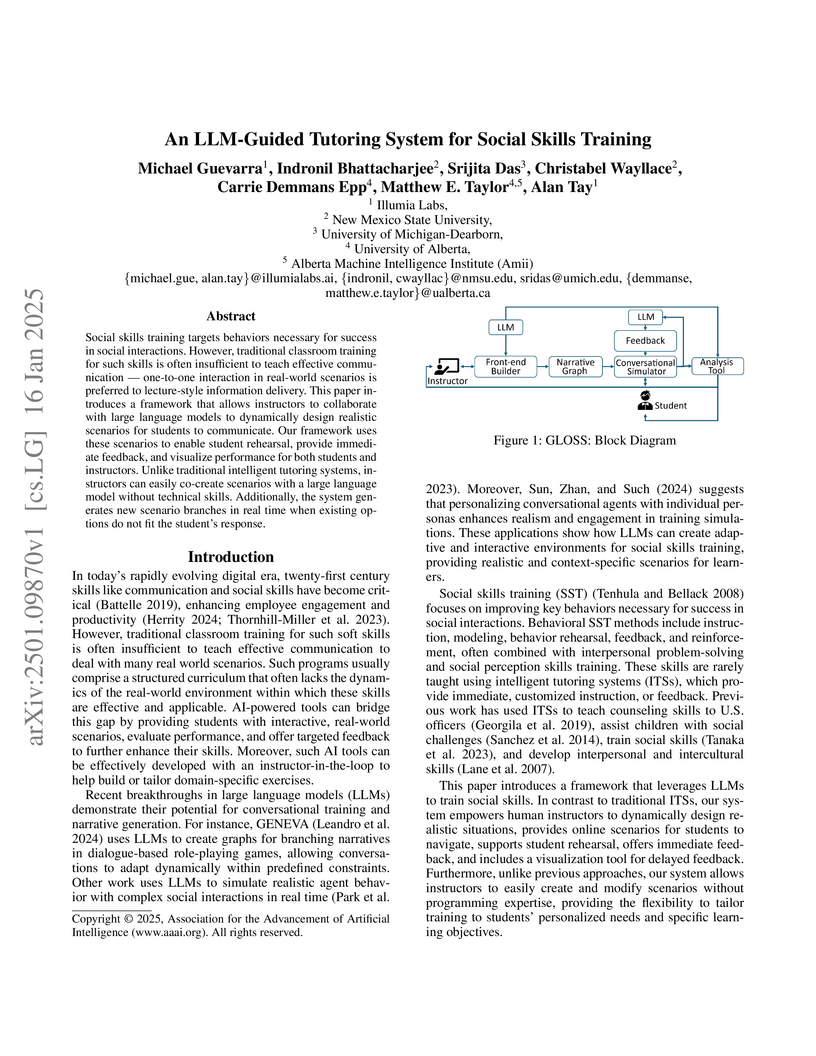

Social skills training targets behaviors necessary for success in social interactions. However, traditional classroom training for such skills is often insufficient to teach effective communication -- one-to-one interaction in real-world scenarios is preferred to lecture-style information delivery. This paper introduces a framework that allows instructors to collaborate with large language models to dynamically design realistic scenarios for students to communicate. Our framework uses these scenarios to enable student rehearsal, provide immediate feedback, and visualize performance for both students and instructors. Unlike traditional intelligent tutoring systems, instructors can easily co-create scenarios with a large language model without technical skills. Additionally, the system generates new scenario branches in real time when existing options do not fit the student's response.

23 Feb 2025

The growing popularity of machine learning (ML) and the integration of ML

components with other software artifacts has led to the use of continuous

integration and delivery (CI/CD) tools, such as Travis CI, GitHub Actions, etc.

that enable faster integration and testing for ML projects. Such CI/CD

configurations and services require synchronization during the life cycle of

the projects. Several works discussed how CI/CD configuration and services

change during their usage in traditional software systems. However, there is

very limited knowledge of how CI/CD configuration and services change in ML

projects.

To fill this knowledge gap, this work presents the first empirical analysis

of how CI/CD configuration evolves for ML software systems. We manually

analyzed 343 commits collected from 508 open-source ML projects to identify

common CI/CD configuration change categories in ML projects and devised a

taxonomy of 14 co-changes in CI/CD and ML components. Moreover, we developed a

CI/CD configuration change clustering tool that identified frequent CI/CD

configuration change patterns in 15,634 commits. Furthermore, we measured the

expertise of ML developers who modify CI/CD configurations. Based on this

analysis, we found that 61.8% of commits include a change to the build policy

and minimal changes related to performance and maintainability compared to

general open-source projects. Additionally, the co-evolution analysis

identified that CI/CD configurations, in many cases, changed unnecessarily due

to bad practices such as the direct inclusion of dependencies and a lack of

usage of standardized testing frameworks. More practices were found through the

change patterns analysis consisting of using deprecated settings and reliance

on a generic build language. Finally, our developer's expertise analysis

suggests that experienced developers are more inclined to modify CI/CD

configurations.

In this data article, we introduce the Multi-Modal Event-based Vehicle Detection and Tracking (MEVDT) dataset. This dataset provides a synchronized stream of event data and grayscale images of traffic scenes, captured using the Dynamic and Active-Pixel Vision Sensor (DAVIS) 240c hybrid event-based camera. MEVDT comprises 63 multi-modal sequences with approximately 13k images, 5M events, 10k object labels, and 85 unique object tracking trajectories. Additionally, MEVDT includes manually annotated ground truth labels \unicodex2014 consisting of object classifications, pixel-precise bounding boxes, and unique object IDs \unicodex2014 which are provided at a labeling frequency of 24 Hz. Designed to advance the research in the domain of event-based vision, MEVDT aims to address the critical need for high-quality, real-world annotated datasets that enable the development and evaluation of object detection and tracking algorithms in automotive environments.

There are no more papers matching your filters at the moment.