Ghent University

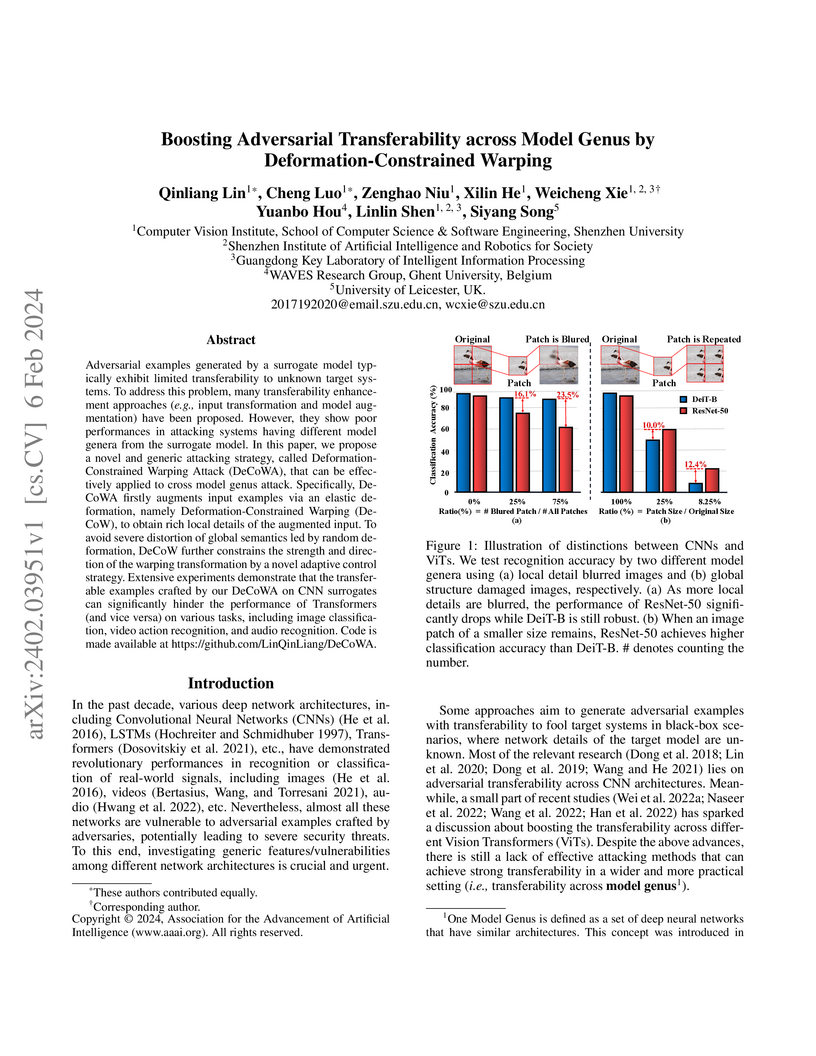

Researchers developed Deformation-Constrained Warping Attack (DeCoWA), a method that significantly boosts adversarial example transferability across different model architectures, particularly between Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs). This approach demonstrated state-of-the-art attack performance across image, video, and audio modalities, improving cross-genus transferability by over 14% on average compared to prior methods.

Current speaker verification techniques rely on a neural network to extract speaker representations. The successful x-vector architecture is a Time Delay Neural Network (TDNN) that applies statistics pooling to project variable-length utterances into fixed-length speaker characterizing embeddings. In this paper, we propose multiple enhancements to this architecture based on recent trends in the related fields of face verification and computer vision. Firstly, the initial frame layers can be restructured into 1-dimensional Res2Net modules with impactful skip connections. Similarly to SE-ResNet, we introduce Squeeze-and-Excitation blocks in these modules to explicitly model channel interdependencies. The SE block expands the temporal context of the frame layer by rescaling the channels according to global properties of the recording. Secondly, neural networks are known to learn hierarchical features, with each layer operating on a different level of complexity. To leverage this complementary information, we aggregate and propagate features of different hierarchical levels. Finally, we improve the statistics pooling module with channel-dependent frame attention. This enables the network to focus on different subsets of frames during each of the channel's statistics estimation. The proposed ECAPA-TDNN architecture significantly outperforms state-of-the-art TDNN based systems on the VoxCeleb test sets and the 2019 VoxCeleb Speaker Recognition Challenge.

SkillMatch introduces the first publicly available benchmark for intrinsic evaluation of professional skill relatedness, along with a self-supervised learning approach that adapts pre-trained language models for this domain. The fine-tuned Sentence-BERT model achieved the highest performance with an AUC-PR of 0.969 and MRR of 0.357, demonstrating the effectiveness of domain-specific adaptation for skill representation.

04 Oct 2024

Quantum error correction with biased-noise qubits can drastically reduce the

hardware overhead for universal and fault-tolerant quantum computation. Cat

qubits are a promising realization of biased-noise qubits as they feature an

exponential error bias inherited from their non-local encoding in the phase

space of a quantum harmonic oscillator. To confine the state of an oscillator

to the cat qubit manifold, two main approaches have been considered so far: a

Kerr-based Hamiltonian confinement with high gate performances, and a

dissipative confinement with robust protection against a broad range of noise

mechanisms. We introduce a new combined dissipative and Hamiltonian confinement

scheme based on two-photon dissipation together with a Two-Photon Exchange

(TPE) Hamiltonian. The TPE Hamiltonian is similar to Kerr nonlinearity, but

unlike the Kerr it only induces a bounded distinction between even- and

odd-photon eigenstates, a highly beneficial feature for protecting the cat

qubits with dissipative mechanisms. Using this combined confinement scheme, we

demonstrate fast and bias-preserving gates with drastically improved

performance compared to dissipative or Hamiltonian schemes. In addition, this

combined scheme can be implemented experimentally with only minor modifications

of existing dissipative cat qubit experiments.

We introduce DeepProbLog, a probabilistic logic programming language that incorporates deep learning by means of neural predicates. We show how existing inference and learning techniques can be adapted for the new language. Our experiments demonstrate that DeepProbLog supports both symbolic and subsymbolic representations and inference, 1) program induction, 2) probabilistic (logic) programming, and 3) (deep) learning from examples. To the best of our knowledge, this work is the first to propose a framework where general-purpose neural networks and expressive probabilistic-logical modeling and reasoning are integrated in a way that exploits the full expressiveness and strengths of both worlds and can be trained end-to-end based on examples.

Understanding labor market dynamics is essential for policymakers, employers, and job seekers. However, comprehensive datasets that capture real-world career trajectories are scarce. In this paper, we introduce JobHop, a large-scale public dataset derived from anonymized resumes provided by VDAB, the public employment service in Flanders, Belgium. Utilizing Large Language Models (LLMs), we process unstructured resume data to extract structured career information, which is then normalized to standardized ESCO occupation codes using a multi-label classification model. This results in a rich dataset of over 1.67 million work experiences, extracted from and grouped into more than 361,000 user resumes and mapped to standardized ESCO occupation codes, offering valuable insights into real-world occupational transitions. This dataset enables diverse applications, such as analyzing labor market mobility, job stability, and the effects of career breaks on occupational transitions. It also supports career path prediction and other data-driven decision-making processes. To illustrate its potential, we explore key dataset characteristics, including job distributions, career breaks, and job transitions, demonstrating its value for advancing labor market research.

Researchers at Ghent University developed FOCUS, an object-centric world model designed for robotics manipulation that learns structured representations of scenes by focusing on individual objects. This approach enables faster policy learning for dense-reward tasks, guides more meaningful exploration in sparse-reward settings, and accurately reconstructs object information in both simulated and real-world environments.

17 Nov 2025

Quantum complexity quantifies the difficulty of preparing a state or implementing a unitary transformation with limited resources. Applications range from quantum computation to condensed matter physics and quantum gravity. We seek to bridge the approaches of these fields, which define and study complexity using different frameworks and tools. We describe several definitions of complexity, along with their key properties. In quantum information theory, we focus on complexity growth in random quantum circuits. In quantum many-body systems and quantum field theory (QFT), we discuss a geometric definition of complexity in terms of geodesics on the unitary group. In dynamical systems, we explore a definition of complexity in terms of state or operator spreading, as well as concepts from tensor-networks. We also outline applications to simple quantum systems, quantum many-body models, and QFTs including conformal field theories (CFTs). Finally, we explain the proposed relationship between complexity and gravitational observables within the holographic anti-de Sitter (AdS)/CFT correspondence.

Eyke Hüllermeier and Willem Waegeman present a comprehensive overview distinguishing aleatoric and epistemic uncertainty in machine learning, surveying existing methods and formalisms for their representation and quantification. Their work provides a conceptual framework for developing more reliable AI systems capable of robustly handling inherent randomness and model-related ignorance.

this http URL is a Julia-based software package for tensor computations, especially focusing on tensors with internal symmetries. This paper introduces the design philosophy, core functionalities, and distinctive features, including how to handle abelian, non-abelian, and anyonic symmetries through the ``TensorMap'' type. We highlight the software's flexibility, performance, and its capability to extend to new tensor types and symmetries, illustrating its practical applications through select case studies.

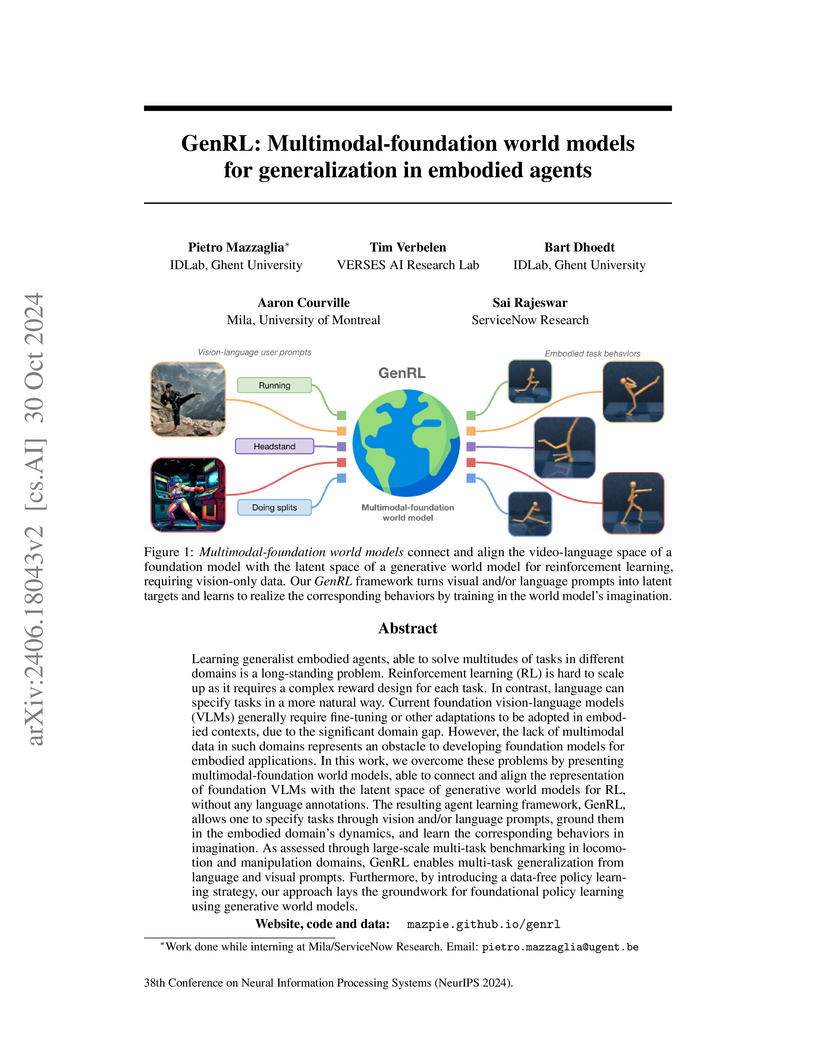

Learning generalist embodied agents, able to solve multitudes of tasks in different domains is a long-standing problem. Reinforcement learning (RL) is hard to scale up as it requires a complex reward design for each task. In contrast, language can specify tasks in a more natural way. Current foundation vision-language models (VLMs) generally require fine-tuning or other adaptations to be adopted in embodied contexts, due to the significant domain gap. However, the lack of multimodal data in such domains represents an obstacle to developing foundation models for embodied applications. In this work, we overcome these problems by presenting multimodal-foundation world models, able to connect and align the representation of foundation VLMs with the latent space of generative world models for RL, without any language annotations. The resulting agent learning framework, GenRL, allows one to specify tasks through vision and/or language prompts, ground them in the embodied domain's dynamics, and learn the corresponding behaviors in imagination. As assessed through large-scale multi-task benchmarking in locomotion and manipulation domains, GenRL enables multi-task generalization from language and visual prompts. Furthermore, by introducing a data-free policy learning strategy, our approach lays the groundwork for foundational policy learning using generative world models. Website, code and data: this https URL

We propose a new tensor network renormalization group (TNR) scheme based on global optimization and introduce a new method for constructing the finite-temperature density matrix of two-dimensional quantum systems. Combining these two into a new algorithm called thermal tensor network renormalization (TTNR), we obtain highly accurate conformal field theory (CFT) data at thermal transition points. This provides a new and efficient route for numerically identifying phase transitions, offering an alternative to the conventional analysis via critical exponents.

28 Aug 2025

University of Southern CaliforniaGhent University

University of Southern CaliforniaGhent University National University of SingaporeUniversity of the Basque Country (UPV/EHU)

National University of SingaporeUniversity of the Basque Country (UPV/EHU) Purdue UniversityZuse Institute BerlinTechnische Universität BerlinForschungszentrum JülichHiroshima UniversityIBM Research Europe - DublinFederal University of Rio de JaneiroE.ON Digital Technology GmbHKipu Quantum GmbHNTT DataQuantagoniaT-Systems International GmbHIBM Research

TokyoIBM Research Europe ","Zurich

Purdue UniversityZuse Institute BerlinTechnische Universität BerlinForschungszentrum JülichHiroshima UniversityIBM Research Europe - DublinFederal University of Rio de JaneiroE.ON Digital Technology GmbHKipu Quantum GmbHNTT DataQuantagoniaT-Systems International GmbHIBM Research

TokyoIBM Research Europe ","ZurichThrough recent progress in hardware development, quantum computers have advanced to the point where benchmarking of (heuristic) quantum algorithms at scale is within reach. Particularly in combinatorial optimization - where most algorithms are heuristics - it is key to empirically analyze their performance on hardware and track progress towards quantum advantage. To this extent, we present ten optimization problem classes that are difficult for existing classical algorithms and can (mostly) be linked to practically relevant applications, with the goal to enable systematic, fair, and comparable benchmarks for quantum optimization methods. Further, we introduce the Quantum Optimization Benchmarking Library (QOBLIB) where the problem instances and solution track records can be found. The individual properties of the problem classes vary in terms of objective and variable type, coefficient ranges, and density. Crucially, they all become challenging for established classical methods already at system sizes ranging from less than 100 to, at most, an order of 100,000 decision variables, allowing to approach them with today's quantum computers. We reference the results from state-of-the-art solvers for instances from all problem classes and demonstrate exemplary baseline results obtained with quantum solvers for selected problems. The baseline results illustrate a standardized form to present benchmarking solutions, which has been designed to ensure comparability of the used methods, reproducibility of the respective results, and trackability of algorithmic and hardware improvements over time. We encourage the optimization community to explore the performance of available classical or quantum algorithms and hardware platforms with the benchmarking problem instances presented in this work toward demonstrating quantum advantage in optimization.

VIME introduces a scalable exploration strategy for deep reinforcement learning that augments environment rewards with an intrinsic motivation term derived from information gain about the agent's uncertainty over environment dynamics. The method, which utilizes Bayesian Neural Networks and variational inference, allows agents to learn effectively in high-dimensional continuous control tasks with sparse rewards, often solving tasks where traditional heuristic exploration fails.

Trustworthy ML systems should not only return accurate predictions, but also a reliable representation of their uncertainty. Bayesian methods are commonly used to quantify both aleatoric and epistemic uncertainty, but alternative approaches, such as evidential deep learning methods, have become popular in recent years. The latter group of methods in essence extends empirical risk minimization (ERM) for predicting second-order probability distributions over outcomes, from which measures of epistemic (and aleatoric) uncertainty can be extracted. This paper presents novel theoretical insights of evidential deep learning, highlighting the difficulties in optimizing second-order loss functions and interpreting the resulting epistemic uncertainty measures. With a systematic setup that covers a wide range of approaches for classification, regression and counts, it provides novel insights into issues of identifiability and convergence in second-order loss minimization, and the relative (rather than absolute) nature of epistemic uncertainty measures.

Northwestern Polytechnical University Northeastern University

Northeastern University Sun Yat-Sen UniversityGhent UniversityKorea University

Sun Yat-Sen UniversityGhent UniversityKorea University Nanjing University

Nanjing University Zhejiang University

Zhejiang University University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute

University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute Yale UniversityUniversitat Pompeu Fabra

Yale UniversityUniversitat Pompeu Fabra NVIDIA

NVIDIA Huawei

Huawei Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology

Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology King’s College LondonSingapore University of Technology and Design

King’s College LondonSingapore University of Technology and Design Aalto University

Aalto University Virginia TechUniversity of HoustonEast China Normal University

Virginia TechUniversity of HoustonEast China Normal University KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

Northeastern University

Northeastern University Sun Yat-Sen UniversityGhent UniversityKorea University

Sun Yat-Sen UniversityGhent UniversityKorea University Nanjing University

Nanjing University Zhejiang University

Zhejiang University University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute

University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute Yale UniversityUniversitat Pompeu Fabra

Yale UniversityUniversitat Pompeu Fabra NVIDIA

NVIDIA Huawei

Huawei Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology

Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology King’s College LondonSingapore University of Technology and Design

King’s College LondonSingapore University of Technology and Design Aalto University

Aalto University Virginia TechUniversity of HoustonEast China Normal University

Virginia TechUniversity of HoustonEast China Normal University KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécomA comprehensive white paper from the GenAINet Initiative introduces Large Telecom Models (LTMs) as a novel framework for integrating AI into telecommunications infrastructure, providing a detailed roadmap for innovation while addressing critical challenges in scalability, hardware requirements, and regulatory compliance through insights from a diverse coalition of academic, industry and regulatory experts.

This research provides empirical evidence that Large Language Models reflect the ideological positions of their creators, with their stances significantly influenced by the geopolitical region of origin and the language used for prompting. The study demonstrates that LLMs from different regions and language groups exhibit distinct ideological leanings, challenging the concept of ideological neutrality in AI.

A generalized Density Matrix Renormalisation Group (DMRG) method integrates duality transformations from generalized symmetries to identify an "optimal dual model" for 1D gapped quantum lattice systems. This approach significantly reduces computational resources, including memory and variational parameters, by minimizing entanglement when the dual symmetry is completely broken in the ground state.

Active inference is a unifying theory for perception and action resting upon

the idea that the brain maintains an internal model of the world by minimizing

free energy. From a behavioral perspective, active inference agents can be seen

as self-evidencing beings that act to fulfill their optimistic predictions,

namely preferred outcomes or goals. In contrast, reinforcement learning

requires human-designed rewards to accomplish any desired outcome. Although

active inference could provide a more natural self-supervised objective for

control, its applicability has been limited because of the shortcomings in

scaling the approach to complex environments. In this work, we propose a

contrastive objective for active inference that strongly reduces the

computational burden in learning the agent's generative model and planning

future actions. Our method performs notably better than likelihood-based active

inference in image-based tasks, while also being computationally cheaper and

easier to train. We compare to reinforcement learning agents that have access

to human-designed reward functions, showing that our approach closely matches

their performance. Finally, we also show that contrastive methods perform

significantly better in the case of distractors in the environment and that our

method is able to generalize goals to variations in the background. Website and

code: https://contrastive-aif.github.io/

The real estate market is vital to global economies but suffers from

significant information asymmetry. This study examines how Large Language

Models (LLMs) can democratize access to real estate insights by generating

competitive and interpretable house price estimates through optimized

In-Context Learning (ICL) strategies. We systematically evaluate leading LLMs

on diverse international housing datasets, comparing zero-shot, few-shot,

market report-enhanced, and hybrid prompting techniques. Our results show that

LLMs effectively leverage hedonic variables, such as property size and

amenities, to produce meaningful estimates. While traditional machine learning

models remain strong for pure predictive accuracy, LLMs offer a more

accessible, interactive and interpretable alternative. Although

self-explanations require cautious interpretation, we find that LLMs explain

their predictions in agreement with state-of-the-art models, confirming their

trustworthiness. Carefully selected in-context examples based on feature

similarity and geographic proximity, significantly enhance LLM performance, yet

LLMs struggle with overconfidence in price intervals and limited spatial

reasoning. We offer practical guidance for structured prediction tasks through

prompt optimization. Our findings highlight LLMs' potential to improve

transparency in real estate appraisal and provide actionable insights for

stakeholders.

There are no more papers matching your filters at the moment.