NJIT

Bloomberg researchers present a systematization of knowledge for red-teaming Large Language Models, introducing a comprehensive threat model and a novel taxonomy that categorizes attacks based on an adversary's level of access to the LLM system. This work provides a structured framework for identifying vulnerabilities and guiding defense efforts across the LLM development lifecycle.

SAE-SSV: Supervised Steering in Sparse Representation Spaces for Reliable Control of Language Models

SAE-SSV: Supervised Steering in Sparse Representation Spaces for Reliable Control of Language Models

This paper presents SAE-SSV, a framework for precisely controlling Large Language Model behavior by operating in sparse, interpretable representation spaces learned by Sparse Autoencoders. The method consistently achieves higher steering success rates while maintaining or improving generated text quality across various models and tasks, such as sentiment and truthfulness control.

30 Jul 2025

DeepSieve introduces a multi-stage information sieving framework that utilizes a Large Language Model as a knowledge router to navigate heterogeneous data sources for Retrieval-Augmented Generation. The system dynamically decomposes complex queries and employs iterative self-correction, achieving state-of-the-art accuracy in multi-hop question answering while significantly reducing LLM token usage by over 90% compared to existing agentic RAG paradigms.

Researchers at New Jersey Institute of Technology developed SAIF, a framework leveraging Sparse Autoencoders to interpret and steer Large Language Models' instruction-following capabilities by identifying specific, interpretable latent features. SAIF enabled steered models to achieve over 30% strict accuracy across various tasks, with loose accuracy comparable to traditional prompting, while also revealing the distributed nature of instruction representations in LLMs.

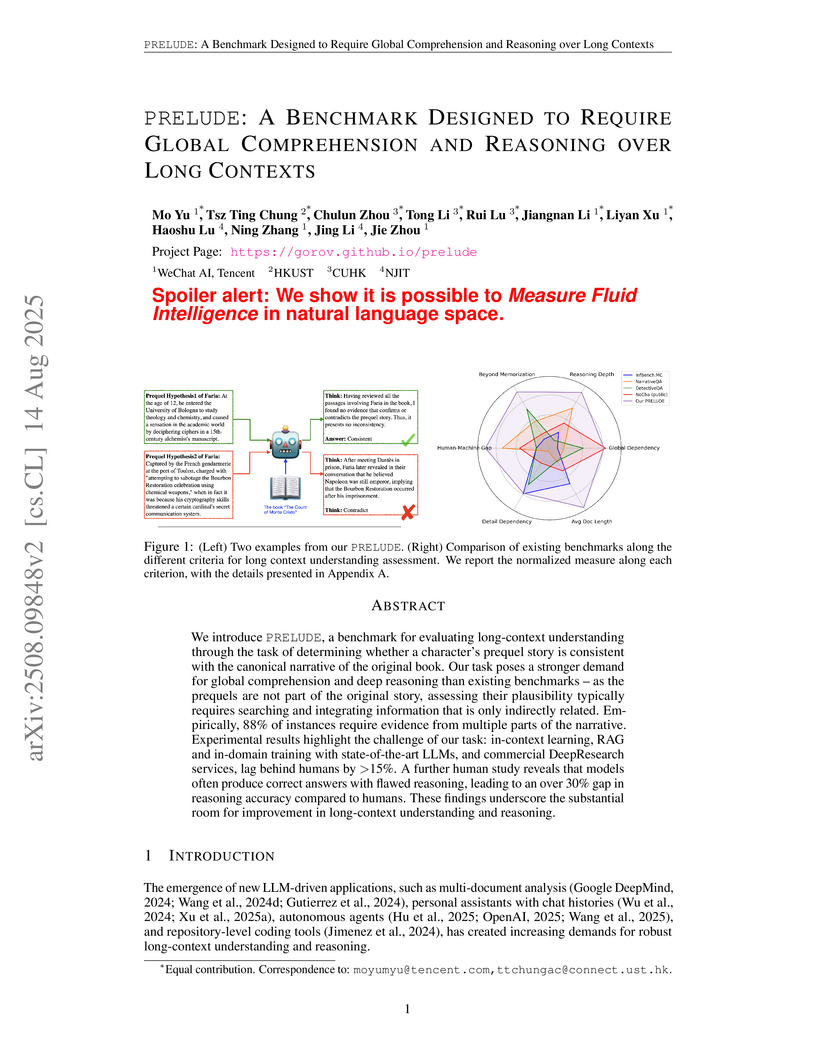

PRELUDE is a benchmark designed to assess Large Language Models' global comprehension and deep reasoning over long contexts by tasking them to verify the consistency of character prequels with canonical novels. Experiments revealed a substantial performance gap between state-of-the-art LLMs and human annotators, particularly in the quality of underlying reasoning.

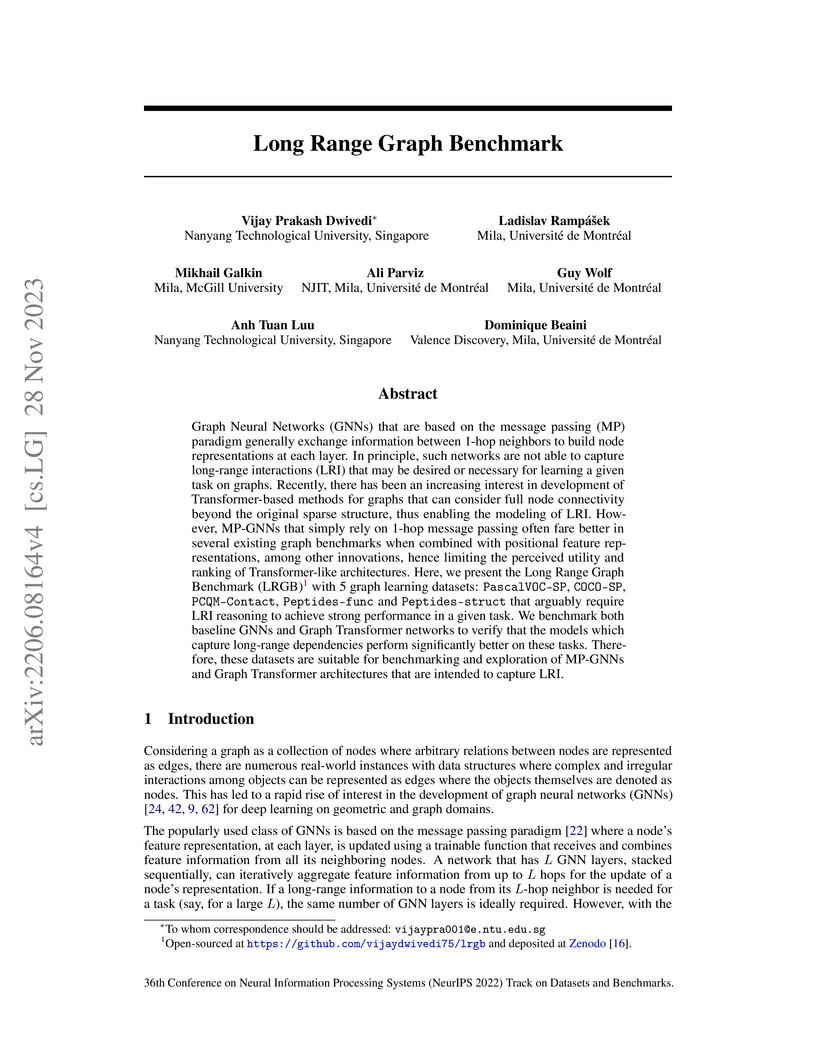

Graph Neural Networks (GNNs) that are based on the message passing (MP)

paradigm generally exchange information between 1-hop neighbors to build node

representations at each layer. In principle, such networks are not able to

capture long-range interactions (LRI) that may be desired or necessary for

learning a given task on graphs. Recently, there has been an increasing

interest in development of Transformer-based methods for graphs that can

consider full node connectivity beyond the original sparse structure, thus

enabling the modeling of LRI. However, MP-GNNs that simply rely on 1-hop

message passing often fare better in several existing graph benchmarks when

combined with positional feature representations, among other innovations,

hence limiting the perceived utility and ranking of Transformer-like

architectures. Here, we present the Long Range Graph Benchmark (LRGB) with 5

graph learning datasets: PascalVOC-SP, COCO-SP, PCQM-Contact, Peptides-func and

Peptides-struct that arguably require LRI reasoning to achieve strong

performance in a given task. We benchmark both baseline GNNs and Graph

Transformer networks to verify that the models which capture long-range

dependencies perform significantly better on these tasks. Therefore, these

datasets are suitable for benchmarking and exploration of MP-GNNs and Graph

Transformer architectures that are intended to capture LRI.

The prediction of protein-protein interactions (PPIs) is crucial for understanding biological functions and diseases. Previous machine learning approaches to PPI prediction mainly focus on direct physical interactions, ignoring the broader context of nonphysical connections through intermediate proteins, thus limiting their effectiveness. The emergence of Large Language Models (LLMs) provides a new opportunity for addressing this complex biological challenge. By transforming structured data into natural language prompts, we can map the relationships between proteins into texts. This approach allows LLMs to identify indirect connections between proteins, tracing the path from upstream to downstream. Therefore, we propose a novel framework ProLLM that employs an LLM tailored for PPI for the first time. Specifically, we propose Protein Chain of Thought (ProCoT), which replicates the biological mechanism of signaling pathways as natural language prompts. ProCoT considers a signaling pathway as a protein reasoning process, which starts from upstream proteins and passes through several intermediate proteins to transmit biological signals to downstream proteins. Thus, we can use ProCoT to predict the interaction between upstream proteins and downstream proteins. The training of ProLLM employs the ProCoT format, which enhances the model's understanding of complex biological problems. In addition to ProCoT, this paper also contributes to the exploration of embedding replacement of protein sites in natural language prompts, and instruction fine-tuning in protein knowledge datasets. We demonstrate the efficacy of ProLLM through rigorous validation against benchmark datasets, showing significant improvement over existing methods in terms of prediction accuracy and generalizability. The code is available at: this https URL.

We propose a novel PDE-driven corruption process for generative image synthesis based on advection-diffusion processes which generalizes existing PDE-based approaches. Our forward pass formulates image corruption via a physically motivated PDE that couples directional advection with isotropic diffusion and Gaussian noise, controlled by dimensionless numbers (Peclet, Fourier). We implement this PDE numerically through a GPU-accelerated custom Lattice Boltzmann solver for fast evaluation. To induce realistic turbulence, we generate stochastic velocity fields that introduce coherent motion and capture multi-scale mixing. In the generative process, a neural network learns to reverse the advection-diffusion operator thus constituting a novel generative model. We discuss how previous methods emerge as specific cases of our operator, demonstrating that our framework generalizes prior PDE-based corruption techniques. We illustrate how advection improves the diversity and quality of the generated images while keeping the overall color palette unaffected. This work bridges fluid dynamics, dimensionless PDE theory, and deep generative modeling, offering a fresh perspective on physically informed image corruption processes for diffusion-based synthesis.

The scarcity of accessible, compliant, and ethically sourced data presents a

considerable challenge to the adoption of artificial intelligence (AI) in

sensitive fields like healthcare, finance, and biomedical research.

Furthermore, access to unrestricted public datasets is increasingly constrained

due to rising concerns over privacy, copyright, and competition. Synthetic data

has emerged as a promising alternative, and diffusion models -- a cutting-edge

generative AI technology -- provide an effective solution for generating

high-quality and diverse synthetic data. In this paper, we introduce a novel

federated learning framework for training diffusion models on decentralized

private datasets. Our framework leverages personalization and the inherent

noise in the forward diffusion process to produce high-quality samples while

ensuring robust differential privacy guarantees. Our experiments show that our

framework outperforms non-collaborative training methods, particularly in

settings with high data heterogeneity, and effectively reduces biases and

imbalances in synthetic data, resulting in fairer downstream models.

Working memory involves the temporary retention of information over short

periods. It is a critical cognitive function that enables humans to perform

various online processing tasks, such as dialing a phone number, recalling

misplaced items' locations, or navigating through a store. However, inherent

limitations in an individual's capacity to retain information often result in

forgetting important details during such tasks. Although previous research has

successfully utilized wearable and assistive technologies to enhance long-term

memory functions (e.g., episodic memory), their application to supporting

short-term recall in daily activities remains underexplored. To address this

gap, we present Memento, a framework that uses multimodal wearable sensor data

to detect significant changes in cognitive state and provide intelligent in

situ cues to enhance recall. Through two user studies involving 15 and 25

participants in visual search navigation tasks, we demonstrate that

participants receiving visual cues from Memento achieved significantly better

route recall, improving approximately 20-23% compared to free recall.

Furthermore, Memento reduced cognitive load and review time by 46% while also

substantially reducing computation time (3.86 seconds vs. 15.35 seconds),

offering an average of 75% effectiveness compared to computer vision-based cue

selection approaches.

02 Oct 2023

Stochastic approximation (SA) is a powerful and scalable computational method

for iteratively estimating the solution of optimization problems in the

presence of randomness, particularly well-suited for large-scale and streaming

data settings. In this work, we propose a theoretical framework for stochastic

approximation (SA) applied to non-parametric least squares in reproducing

kernel Hilbert spaces (RKHS), enabling online statistical inference in

non-parametric regression models. We achieve this by constructing

asymptotically valid pointwise (and simultaneous) confidence intervals (bands)

for local (and global) inference of the nonlinear regression function, via

employing an online multiplier bootstrap approach to functional stochastic

gradient descent (SGD) algorithm in the RKHS. Our main theoretical

contributions consist of a unified framework for characterizing the

non-asymptotic behavior of the functional SGD estimator and demonstrating the

consistency of the multiplier bootstrap method. The proof techniques involve

the development of a higher-order expansion of the functional SGD estimator

under the supremum norm metric and the Gaussian approximation of suprema of

weighted and non-identically distributed empirical processes. Our theory

specifically reveals an interesting relationship between the tuning of step

sizes in SGD for estimation and the accuracy of uncertainty quantification.

04 Oct 2025

Predicting corporate earnings surprises is a profitable yet challenging task, as accurate forecasts can inform significant investment decisions. However, progress in this domain has been constrained by a reliance on expensive, proprietary, and text-only data, limiting the development of advanced models. To address this gap, we introduce \textbf{FinCall-Surprise} (Financial Conference Call for Earning Surprise Prediction), the first large-scale, open-source, and multi-modal dataset for earnings surprise prediction. Comprising 2,688 unique corporate conference calls from 2019 to 2021, our dataset features word-to-word conference call textual transcripts, full audio recordings, and corresponding presentation slides. We establish a comprehensive benchmark by evaluating 26 state-of-the-art unimodal and multi-modal LLMs. Our findings reveal that (1) while many models achieve high accuracy, this performance is often an illusion caused by significant class imbalance in the real-world data. (2) Some specialized financial models demonstrate unexpected weaknesses in instruction-following and language generation. (3) Although incorporating audio and visual modalities provides some performance gains, current models still struggle to leverage these signals effectively. These results highlight critical limitations in the financial reasoning capabilities of existing LLMs and establish a challenging new baseline for future research.

Backdoor attack is a severe threat to the trustworthiness of DNN-based language models. In this paper, we first extend the definition of memorization of language models from sample-wise to more fine-grained sentence element-wise (e.g., word, phrase, structure, and style), and then point out that language model backdoors are a type of element-wise memorization. Through further analysis, we find that the strength of such memorization is positively correlated to the frequency of duplicated elements in the training dataset. In conclusion, duplicated sentence elements are necessary for successful backdoor attacks. Based on this, we propose a data-centric defense. We first detect trigger candidates in training data by finding memorizable elements, i.e., duplicated elements, and then confirm real triggers by testing if the candidates can activate backdoor behaviors (i.e., malicious elements). Results show that our method outperforms state-of-the-art defenses in defending against different types of NLP backdoors.

01 Sep 2022

This paper proposes a novel inverse kinematics (IK) solver of articulated robotic systems for path planning. IK is a traditional but essential problem for robot manipulation. Recently, data-driven methods have been proposed to quickly solve the IK for path planning. These methods can handle a large amount of IK requests at once with the advantage of GPUs. However, the accuracy is still low, and the model requires considerable time for training. Therefore, we propose an IK solver that improves accuracy and memory efficiency by utilizing the continuous hidden dynamics of Neural ODE. The performance is compared using multiple robots.

The global financial system stands at an inflection point. Stablecoins represent the most significant evolution in banking since the abandonment of the gold standard, positioned to enable "Banking 2.0" by seamlessly integrating cryptocurrency innovation with traditional finance infrastructure. This transformation rivals artificial intelligence as the next major disruptor in the financial sector. Modern fiat currencies derive value entirely from institutional trust rather than physical backing, creating vulnerabilities that stablecoins address through enhanced stability, reduced fraud risk, and unified global transactions that transcend national boundaries. Recent developments demonstrate accelerating institutional adoption: landmark U.S. legislation including the GENIUS Act of 2025, strategic industry pivots from major players like JPMorgan's crypto-backed loan initiatives, and PayPal's comprehensive "Pay with Crypto" service. Widespread stablecoin implementation addresses critical macroeconomic imbalances, particularly the inflation-productivity gap plaguing modern monetary systems, through more robust and diversified backing mechanisms. Furthermore, stablecoins facilitate deregulation and efficiency gains, paving the way for a more interconnected international financial system. This whitepaper comprehensively explores how stablecoins are poised to reshape banking, supported by real-world examples, current market data, and analysis of their transformative potential.

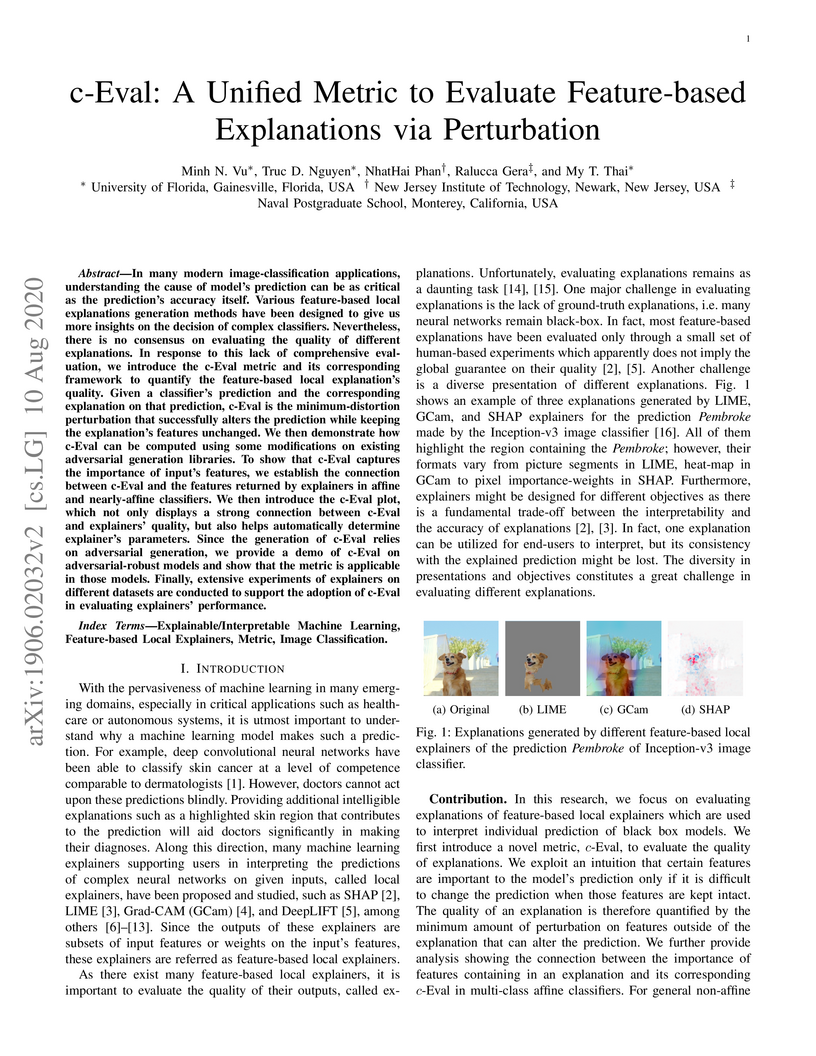

In many modern image-classification applications, understanding the cause of model's prediction can be as critical as the prediction's accuracy itself. Various feature-based local explanations generation methods have been designed to give us more insights on the decision of complex classifiers. Nevertheless, there is no consensus on evaluating the quality of different explanations. In response to this lack of comprehensive evaluation, we introduce the c-Eval metric and its corresponding framework to quantify the feature-based local explanation's quality. Given a classifier's prediction and the corresponding explanation on that prediction, c-Eval is the minimum-distortion perturbation that successfully alters the prediction while keeping the explanation's features unchanged. We then demonstrate how c-Eval can be computed using some modifications on existing adversarial generation libraries. To show that c-Eval captures the importance of input's features, we establish the connection between c-Eval and the features returned by explainers in affine and nearly-affine classifiers. We then introduce the c-Eval plot, which not only displays a strong connection between c-Eval and explainers' quality, but also helps automatically determine explainer's parameters. Since the generation of c-Eval relies on adversarial generation, we provide a demo of c-Eval on adversarial-robust models and show that the metric is applicable in those models. Finally, extensive experiments of explainers on different datasets are conducted to support the adoption of c-Eval in evaluating explainers' performance.

Exploring the outer atmosphere of the sun has remained a significant bottleneck in astrophysics, given the intricate magnetic formations that significantly influence diverse solar events. Magnetohydrodynamics (MHD) simulations allow us to model the complex interactions between the sun's plasma, magnetic fields, and the surrounding environment. However, MHD simulation is extremely time-consuming, taking days or weeks for simulation. The goal of this study is to accelerate coronal magnetic field simulation using deep learning, specifically, the Fourier Neural Operator (FNO). FNO has been proven to be an ideal tool for scientific computing and discovery in the literature. In this paper, we proposed a global-local Fourier Neural Operator (GL-FNO) that contains two branches of FNOs: the global FNO branch takes downsampled input to reconstruct global features while the local FNO branch takes original resolution input to capture fine details. The performance of the GLFNO is compared with state-of-the-art deep learning methods, including FNO, U-NO, U-FNO, Vision Transformer, CNN-RNN, and CNN-LSTM, to demonstrate its accuracy, computational efficiency, and scalability. Furthermore, physics analysis from domain experts is also performed to demonstrate the reliability of GL-FNO. The results demonstrate that GL-FNO not only accelerates the MHD simulation (a few seconds for prediction, more than \times 20,000 speed up) but also provides reliable prediction capabilities, thus greatly contributing to the understanding of space weather dynamics. Our code implementation is available at this https URL

12 Oct 2025

Data-sharing ecosystems enable entities -- such as providers, consumers, and intermediaries -- to access, exchange, and utilize data for various downstream tasks and applications. Due to privacy concerns, data providers typically anonymize datasets before sharing them; however, the existence of multiple masking configurations results in masked datasets with varying utility. Consequently, a key challenge lies in efficiently determining the optimal masking configuration that maximizes a dataset's utility. This paper presents AEGIS, a middleware framework for identifying the optimal masking configuration for machine learning datasets that consist of features and a class label. We introduce a utility optimizer that minimizes predictive utility deviation -- a metric based on the changes in feature-label correlations before and after masking. Our framework leverages limited data summaries (such as 1D histograms) or none to estimate the feature-label joint distribution, making it suitable for scenarios where raw data is inaccessible due to privacy restrictions. To achieve this, we propose a joint distribution estimator based on iterative proportional fitting, which allows supporting various feature-label correlation quantification methods such as g3, mutual information, or chi-square. Our experimental evaluation on real-world datasets shows that AEGIS identifies optimal masking configurations over an order of magnitude faster, while the resulting masked datasets achieve predictive performance on downstream ML tasks that is on par with baseline approaches.

26 Aug 2015

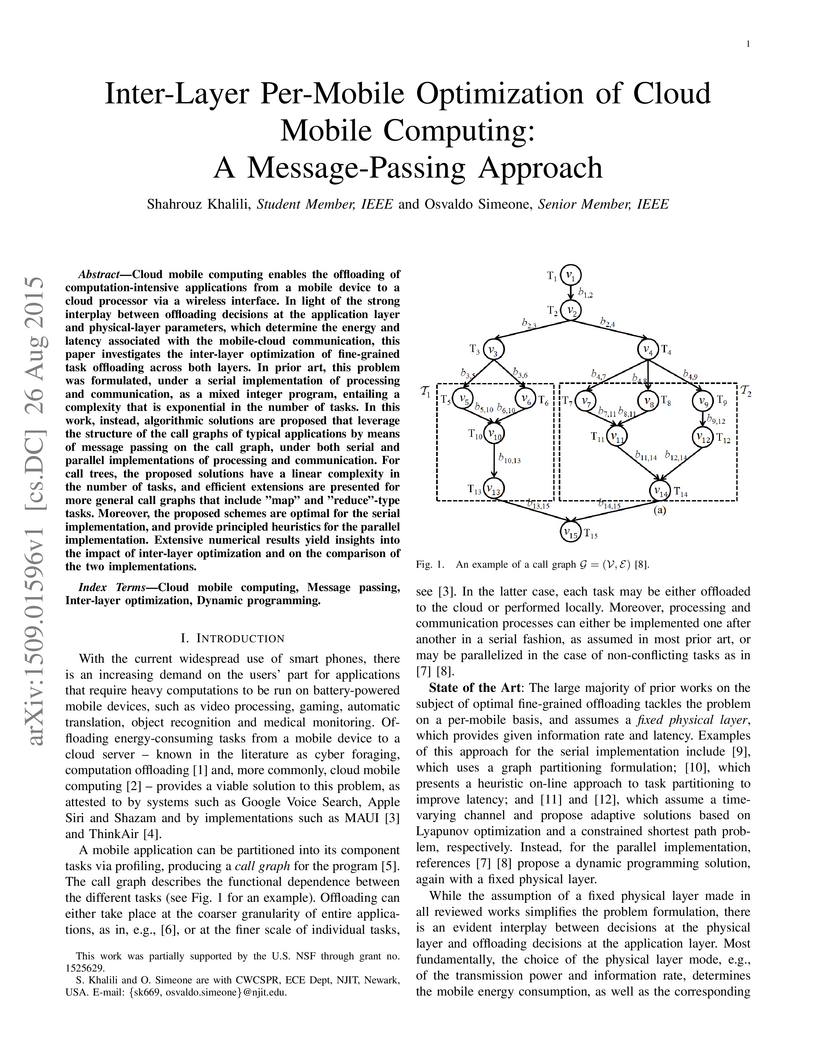

Cloud mobile computing enables the offloading of computation-intensive

applications from a mobile device to a cloud processor via a wireless

interface. In light of the strong interplay between offloading decisions at the

application layer and physical-layer parameters, which determine the energy and

latency associated with the mobile-cloud communication, this paper investigates

the inter-layer optimization of fine-grained task offloading across both

layers. In prior art, this problem was formulated, under a serial

implementation of processing and communication, as a mixed integer program,

entailing a complexity that is exponential in the number of tasks. In this

work, instead, algorithmic solutions are proposed that leverage the structure

of the call graphs of typical applications by means of message passing on the

call graph, under both serial and parallel implementations of processing and

communication. For call trees, the proposed solutions have a linear complexity

in the number of tasks, and efficient extensions are presented for more general

call graphs that include "map" and "reduce"-type tasks. Moreover, the proposed

schemes are optimal for the serial implementation, and provide principled

heuristics for the parallel implementation. Extensive numerical results yield

insights into the impact of inter-layer optimization and on the comparison of

the two implementations.

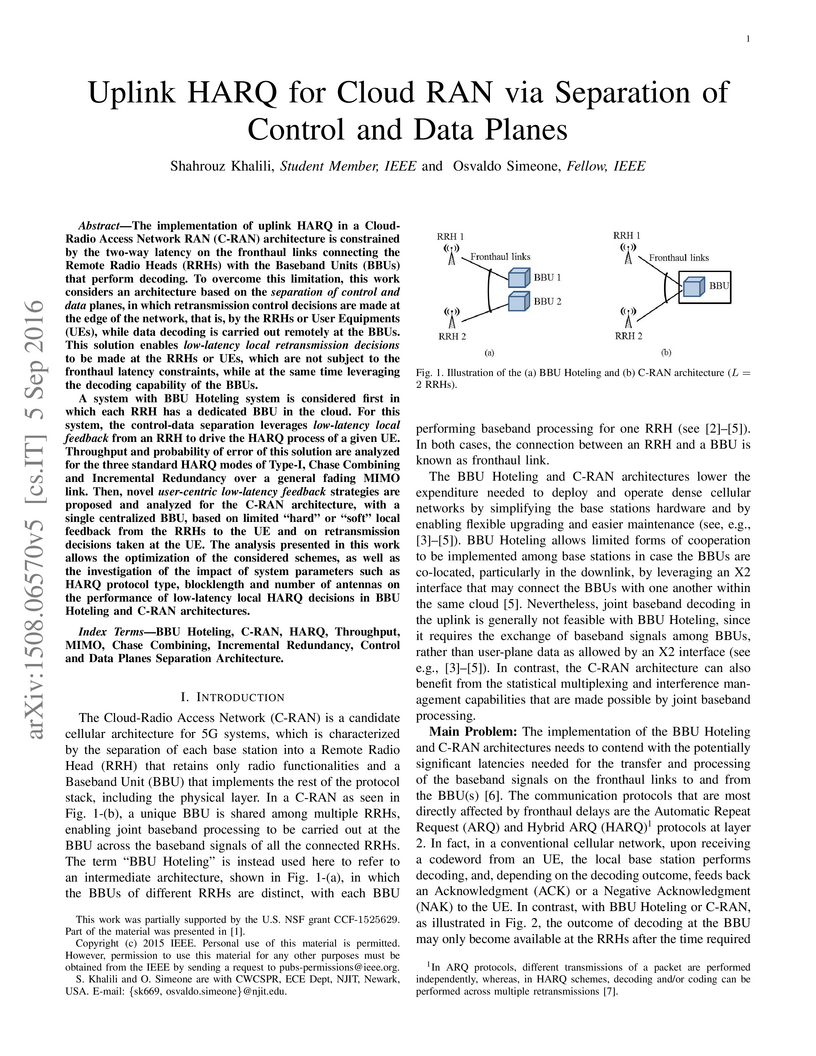

The implementation of uplink HARQ in a Cloud- Radio Access Network RAN

(C-RAN) architecture is constrained by the two-way latency on the fronthaul

links connecting the Remote Radio Heads (RRHs) with the Baseband Units (BBUs)

that perform decoding. To overcome this limitation, this work considers an

architecture based on the separation of control and data planes, in which

retransmission control decisions are made at the edge of the network, that is,

by the RRHs or User Equipments (UEs), while data decoding is carried out

remotely at the BBUs. This solution enables low-latency local retransmission

decisions to be made at the RRHs or UEs, which are not subject to the fronthaul

latency constraints, while at the same time leveraging the decoding capability

of the BBUs.

A system with BBU Hoteling system is considered first in which each RRH has a

dedicated BBU in the cloud. For this system, the control-data separation

leverages low-latency local feedback from an RRH to drive the HARQ process of a

given UE. Throughput and probability of error of this solution are analyzed for

the three standard HARQ modes of Type-I, Chase Combining and Incremental

Redundancy over a general fading MIMO link. Then, novel user-centric

low-latency feedback strategies are proposed and analyzed for the C-RAN

architecture, with a single centralized BBU, based on limited "hard" or "soft"

local feedback from the RRHs to the UE and on retransmission decisions taken at

the UE. The analysis presented in this work allows the optimization of the

considered schemes, as well as the investigation of the impact of system

parameters such as HARQ protocol type, blocklength and number of antennas on

the performance of low-latency local HARQ decisions in BBU Hoteling and C-RAN

architectures.

There are no more papers matching your filters at the moment.