PUC-Rio

We propose a methodology that combines several advanced techniques in Large Language Model (LLM) retrieval to support the development of robust, multi-source question-answer systems. This methodology is designed to integrate information from diverse data sources, including unstructured documents (PDFs) and structured databases, through a coordinated multi-agent orchestration and dynamic retrieval approach. Our methodology leverages specialized agents-such as SQL agents, Retrieval-Augmented Generation (RAG) agents, and router agents - that dynamically select the most appropriate retrieval strategy based on the nature of each query. To further improve accuracy and contextual relevance, we employ dynamic prompt engineering, which adapts in real time to query-specific contexts. The methodology's effectiveness is demonstrated within the domain of Contract Management, where complex queries often require seamless interaction between unstructured and structured data. Our results indicate that this approach enhances response accuracy and relevance, offering a versatile and scalable framework for developing question-answer systems that can operate across various domains and data sources.

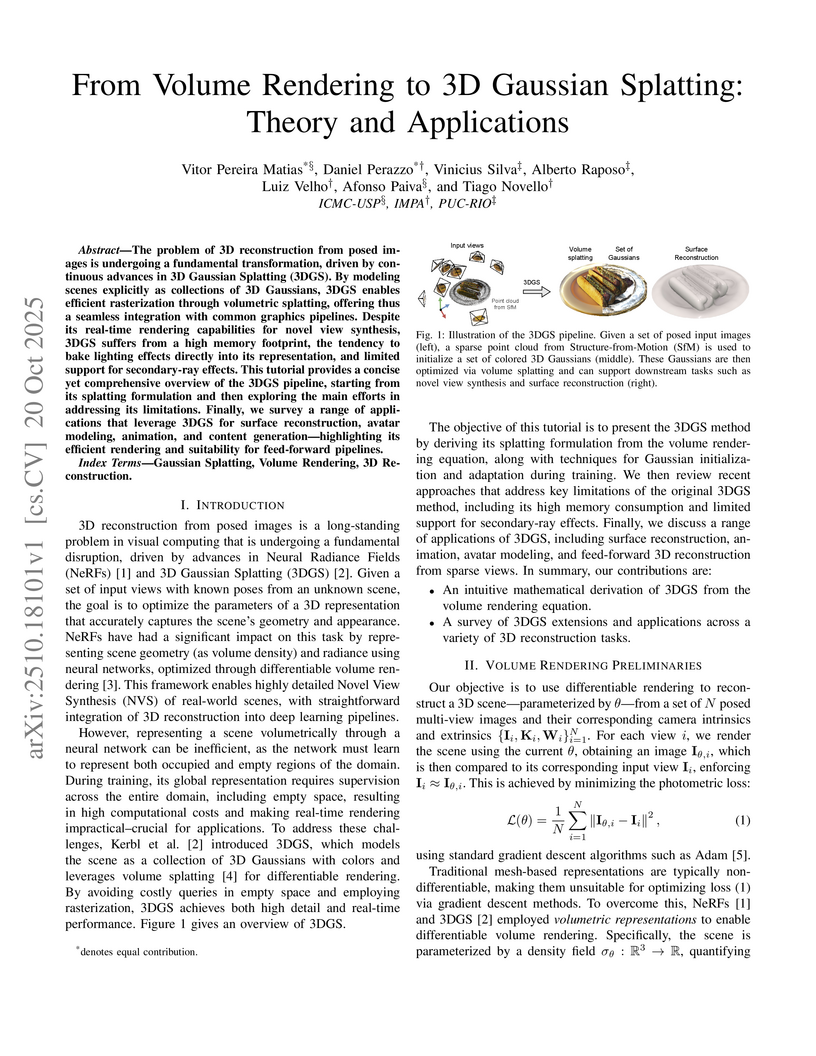

The problem of 3D reconstruction from posed images is undergoing a fundamental transformation, driven by continuous advances in 3D Gaussian Splatting (3DGS). By modeling scenes explicitly as collections of 3D Gaussians, 3DGS enables efficient rasterization through volumetric splatting, offering thus a seamless integration with common graphics pipelines. Despite its real-time rendering capabilities for novel view synthesis, 3DGS suffers from a high memory footprint, the tendency to bake lighting effects directly into its representation, and limited support for secondary-ray effects. This tutorial provides a concise yet comprehensive overview of the 3DGS pipeline, starting from its splatting formulation and then exploring the main efforts in addressing its limitations. Finally, we survey a range of applications that leverage 3DGS for surface reconstruction, avatar modeling, animation, and content generation-highlighting its efficient rendering and suitability for feed-forward pipelines.

17 Oct 2025

Traditional end-to-end contextual robust optimization models are trained for specific contextual data, requiring complete retraining whenever new contextual information arrives. This limitation hampers their use in online decision-making problems such as energy scheduling, where multiperiod optimization must be solved every few minutes. In this paper, we propose a novel Data-Driven Contextual Uncertainty Set, which gives rise to a new End-to-End Data-Driven Contextual Robust Optimization model. For right-hand-side uncertainty, we introduce a reformulation scheme that enables the development of a variant of the Column-and-Constraint Generation (CCG) algorithm. This new CCG method explicitly considers the contextual vector within the cut expressions, allowing previously generated cuts to remain valid for new contexts, thereby significantly accelerating convergence in online applications. Numerical experiments on energy and reserve scheduling problems, based on classical test cases and large-scale networks (with more than 10,000 nodes), demonstrate that the proposed method reduces computation times and operational costs while capturing context-dependent risk structures. The proposed framework (model and method), therefore, offers a unified, scalable, and prescriptive approach to robust decision-making under uncertainty, effectively bridging data-driven learning and optimization.

17 Oct 2025

The quantum geometric properties of typical diamond-type (C, Si, Ge) and zincblende-type (GaAs, InP, etc) semiconductors are investigated by means of the sp3s∗ tight-binding model, which allows to calculate the quantum metric of the valence band states throughout the entire Brillouin zone. The global maximum of the metric is at the Γ point, but other differential geometric properties like Ricci scalar, Ricci tensor, and Einstein tensor are found to vary significantly in the momentum space, indicating a highly distorted momentum space manifold. The momentum integration of the quantum metric further yields the gauge-invariant part of the spread of valence band Wannier function, whose value agrees well with that experimentally extracted from an optical sum rule of the dielectric function. Furthermore, the dependence of these geometric properties on the energy gap offers a way to quantify the quantum criticality of these common semiconductors.

25 Aug 2022

We present SigniFYI-CDN, an inspection method built from previously proposed methods combining Semiotic Engineering and the Cognitive Dimensions of Notations. Compared to its predecessors, SigniFYI-CDN simplifies procedural steps and supports them with more analytic scaffolds. It is especially fit for the study of interaction with technologies where notations are created and used by various people, or by a single person in various, and potentially distant, occasions. In such cases, notations may serve several purposes, like (mutual) comprehension, recall, coordination, negotiation, and documentation. We illustrate SigniFYI-CDN with highlights from the evaluation of a computer tool that supports qualitative data analysis. Our contribution is a simpler tool for researchers and practitioners to probe the power of combined communicability and usability analysis of interaction with increasingly complex data-intensive applications.

Detective fiction, a genre defined by its complex narrative structures and

character-driven storytelling, presents unique challenges for computational

narratology, a research field focused on integrating literary theory into

automated narrative generation. While traditional literary studies have offered

deep insights into the methods and archetypes of fictional detectives, these

analyses often focus on a limited number of characters and lack the scalability

needed for the extraction of unique traits that can be used to guide narrative

generation methods. In this paper, we present an AI-driven approach for

systematically characterizing the investigative methods of fictional

detectives. Our multi-phase workflow explores the capabilities of 15 Large

Language Models (LLMs) to extract, synthesize, and validate distinctive

investigative traits of fictional detectives. This approach was tested on a

diverse set of seven iconic detectives - Hercule Poirot, Sherlock Holmes,

William Murdoch, Columbo, Father Brown, Miss Marple, and Auguste Dupin -

capturing the distinctive investigative styles that define each character. The

identified traits were validated against existing literary analyses and further

tested in a reverse identification phase, achieving an overall accuracy of

91.43%, demonstrating the method's effectiveness in capturing the distinctive

investigative approaches of each detective. This work contributes to the

broader field of computational narratology by providing a scalable framework

for character analysis, with potential applications in AI-driven interactive

storytelling and automated narrative generation.

Forecasting and decision-making are generally modeled as two sequential steps

with no feedback, following an open-loop approach. In this paper, we present

application-driven learning, a new closed-loop framework in which the processes

of forecasting and decision-making are merged and co-optimized through a

bilevel optimization problem. We present our methodology in a general format

and prove that the solution converges to the best estimator in terms of the

expected cost of the selected application. Then, we propose two solution

methods: an exact method based on the KKT conditions of the second-level

problem and a scalable heuristic approach suitable for decomposition methods.

The proposed methodology is applied to the relevant problem of defining dynamic

reserve requirements and conditional load forecasts, offering an alternative

approach to current ad hoc procedures implemented in industry practices. We

benchmark our methodology with the standard sequential least-squares forecast

and dispatch planning process. We apply the proposed methodology to an

illustrative system and to a wide range of instances, from dozens of buses to

large-scale realistic systems with thousands of buses. Our results show that

the proposed methodology is scalable and yields consistently better performance

than the standard open-loop approach.

18 Jan 2022

Real Bruhat cells give an important and well studied stratification of such spaces as GLn+1, Flagn+1=SLn+1/B, SOn+1 and Spinn+1. We study the intersections of a top dimensional cell with another cell (for another basis). Such an intersection is naturally identified with a subset of the lower nilpotent group Lon+11. We are particularly interested in the homotopy type of such intersections. In this paper we define a stratification of such intersections. As a consequence, we obtain a finite CW complex which is homotopically equivalent to the intersection.

We compute the homotopy type for several examples. It turns out that for n≤4 all connected components of such subsets of Lon+11 are contractible: we prove this by explicitly constructing the corresponding CW complexes. Conversely, for n≥5 and the top permutation, there is always a connected component with even Euler characteristic, and therefore not contractible. This follows from formulas for the number of cells per dimension of the corresponding CW complex. For instance, for the top permutation S6, there exists a connected component with Euler characteristic equal to 2. We also give an example of a permutation in S6 for which there exists a connected component which is homotopically equivalent to the circle S1.

Researchers at PUC-Rio developed a theoretical framework for defining and calculating a quantum metric directly in the real space of solid materials. This real-space metric is shown to be experimentally measurable via Angle-Resolved Photoemission Spectroscopy (ARPES) through its identification as the momentum variance of electrons, and its local curvature can be influenced by disorder and material parameters.

We introduce this http URL, a Julia library to differentiate through the solution of optimization problems with respect to arbitrary parameters present in the objective and/or constraints. The library builds upon MathOptInterface, thus leveraging the rich ecosystem of solvers and composing well with modeling languages like JuMP. DiffOpt offers both forward and reverse differentiation modes, enabling multiple use cases from hyperparameter optimization to backpropagation and sensitivity analysis, bridging constrained optimization with end-to-end differentiable programming. DiffOpt is built on two known rules for differentiating quadratic programming and conic programming standard forms. However, thanks ability to differentiate through model transformation, the user is not limited to these forms and can differentiate with respect to the parameters of any model that can be reformulated into these standard forms. This notably includes programs mixing affine conic constraints and convex quadratic constraints or objective function.

Context: The number of TV series offered nowadays is very high. Due to its large amount, many series are canceled due to a lack of originality that generates a low audience.

Problem: Having a decision support system that can show why some shows are a huge success or not would facilitate the choices of renewing or starting a show.

Solution: We studied the case of the series Arrow broadcasted by CW Network and used descriptive and predictive modeling techniques to predict the IMDb rating. We assumed that the theme of the episode would affect its evaluation by users, so the dataset is composed only by the director of the episode, the number of reviews that episode got, the percentual of each theme extracted by the Latent Dirichlet Allocation (LDA) model of an episode, the number of viewers from Wikipedia and the rating from IMDb. The LDA model is a generative probabilistic model of a collection of documents made up of words.

Method: In this prescriptive research, the case study method was used, and its results were analyzed using a quantitative approach.

Summary of Results: With the features of each episode, the model that performed the best to predict the rating was Catboost due to a similar mean squared error of the KNN model but a better standard deviation during the test phase. It was possible to predict IMDb ratings with an acceptable root mean squared error of 0.55.

This work proposes a novel model-free Reinforcement Learning (RL) agent that is able to learn how to complete an unknown task having access to only a part of the input observation. We take inspiration from the concepts of visual attention and active perception that are characteristic of humans and tried to apply them to our agent, creating a hard attention mechanism. In this mechanism, the model decides first which region of the input image it should look at, and only after that it has access to the pixels of that region. Current RL agents do not follow this principle and we have not seen these mechanisms applied to the same purpose as this work. In our architecture, we adapt an existing model called recurrent attention model (RAM) and combine it with the proximal policy optimization (PPO) algorithm. We investigate whether a model with these characteristics is capable of achieving similar performance to state-of-the-art model-free RL agents that access the full input observation. This analysis is made in two Atari games, Pong and SpaceInvaders, which have a discrete action space, and in CarRacing, which has a continuous action space. Besides assessing its performance, we also analyze the movement of the attention of our model and compare it with what would be an example of the human behavior. Even with such visual limitation, we show that our model matches the performance of PPO+LSTM in two of the three games tested.

03 Jun 2018

In this work we present a data-driven method for the discovery of parametric

partial differential equations (PDEs), thus allowing one to disambiguate

between the underlying evolution equations and their parametric dependencies.

Group sparsity is used to ensure parsimonious representations of observed

dynamics in the form of a parametric PDE, while also allowing the coefficients

to have arbitrary time series, or spatial dependence. This work builds on

previous methods for the identification of constant coefficient PDEs, expanding

the field to include a new class of equations which until now have eluded

machine learning based identification methods. We show that group sequentially

thresholded ridge regression outperforms group LASSO in identifying the fewest

terms in the PDE along with their parametric dependency. The method is

demonstrated on four canonical models with and without the introduction of

noise.

In Brazil, all legal professionals must demonstrate their knowledge of the

law and its application by passing the OAB exams, the national bar exams. The

OAB exams therefore provide an excellent benchmark for the performance of legal

information systems since passing the exam would arguably signal that the

system has acquired capacity of legal reasoning comparable to that of a human

lawyer. This article describes the construction of a new data set and some

preliminary experiments on it, treating the problem of finding the

justification for the answers to questions. The results provide a baseline

performance measure against which to evaluate future improvements. We discuss

the reasons to the poor performance and propose next steps.

Standard Direction of Arrival (DOA) estimation methods are typically derived based on the Gaussian noise assumption, making them highly sensitive to outliers. Therefore, in the presence of impulsive noise, the performance of these methods may significantly deteriorate. In this paper, we model impulsive noise as Gaussian noise mixed with sparse outliers. By exploiting their statistical differences, we propose a novel DOA estimation method based on sparse signal recovery (SSR). Furthermore, to address the issue of grid mismatch, we utilize an alternating optimization approach that relies on the estimated outlier matrix and the on-grid DOA estimates to obtain the off-grid DOA estimates. Simulation results demonstrate that the proposed method exhibits robustness against large outliers.

Many classical problems in theoretical computer science involve norm, even if

implicitly; for example, both XOS functions and downward-closed sets are

equivalent to some norms. The last decade has seen a lot of interest in

designing algorithms beyond the standard ℓp norms ∥⋅∥p. Despite

notable advancements, many existing methods remain tailored to specific

problems, leaving a broader applicability to general norms less understood.

This paper investigates the intrinsic properties of ℓp norms that

facilitate their widespread use and seeks to abstract these qualities to a more

general setting.

We identify supermodularity -- often reserved for combinatorial set functions

and characterized by monotone gradients -- as a defining feature beneficial for

∥⋅∥pp. We introduce the notion of p-supermodularity for norms,

asserting that a norm is p-supermodular if its pth power function

exhibits supermodularity. The association of supermodularity with norms offers

a new lens through which to view and construct algorithms.

Our work demonstrates that for a large class of problems p-supermodularity

is a sufficient criterion for developing good algorithms. This is either by

reframing existing algorithms for problems like Online Load-Balancing and

Bandits with Knapsacks through a supermodular lens, or by introducing novel

analyses for problems such as Online Covering, Online Packing, and Stochastic

Probing. Moreover, we prove that every symmetric norm can be approximated by a

p-supermodular norm. Together, these recover and extend several results from

the literature, and support p-supermodularity as a unified theoretical

framework for optimization challenges centered around norm-related problems.

In this paper we show that corpus-level aggregation hinders considerably the capability of lexical metrics to accurately evaluate machine translation (MT) systems. With empirical experiments we demonstrate that averaging individual segment-level scores can make metrics such as BLEU and chrF correlate much stronger with human judgements and make them behave considerably more similar to neural metrics such as COMET and BLEURT. We show that this difference exists because corpus- and segment-level aggregation differs considerably owing to the classical average of ratio versus ratio of averages Mathematical problem. Moreover, as we also show, such difference affects considerably the statistical robustness of corpus-level aggregation. Considering that neural metrics currently only cover a small set of sufficiently-resourced languages, the results in this paper can help make the evaluation of MT systems for low-resource languages more trustworthy.

Employing a recent technique which allows the representation of nonstationary data by means of a juxtaposition of locally stationary patches of different length, we introduce a comprehensive analysis of the key observables in a financial market: the trading volume and the price fluctuations. From the segmentation procedure we are able to introduce a quantitative description of a group of statistical features (stylizes facts) of the trading volume and price fluctuations, namely the tails of each distribution, the U-shaped profile of the volume in a trading session and the evolution of the trading volume autocorrelation function. The segmentation of the trading volume series provides evidence of slow evolution of the fluctuating parameters of each patch, pointing to the mixing scenario. Assuming that long-term features are the outcome of a statistical mixture of simple local forms, we test and compare different probability density functions to provide the long-term distribution of the trading volume, concluding that the log-normal gives the best agreement with the empirical distribution. Moreover, the segmentation of the magnitude price fluctuations are quite different from the results for the trading volume, indicating that changes in the statistics of price fluctuations occur at a faster scale than in the case of trading volume.

22 Jan 2018

A defining feature of a symmetry protected topological phase (SPT) in

one-dimension is the degeneracy of the Schmidt values for any given

bipartition. For the system to go through a topological phase transition

separating two SPTs, the Schmidt values must either split or cross at the

critical point in order to change their degeneracies. A renormalization group

(RG) approach based on this splitting or crossing is proposed, through which we

obtain an RG flow that identifies the topological phase transitions in the

parameter space. Our approach can be implemented numerically in an efficient

manner, for example, using the matrix product state formalism, since only the

largest first few Schmidt values need to be calculated with sufficient

accuracy. Using several concrete models, we demonstrate that the critical

points and fixed points of the RG flow coincide with the maxima and minima of

the entanglement entropy, respectively, and the method can serve as a

numerically efficient tool to analyze interacting SPTs in the parameter space.

01 Jun 2024

Given any algorithm for convex optimization that uses exact first-order

information (i.e., function values and subgradients), we show how to use such

an algorithm to solve the problem with access to inexact first-order

information. This is done in a ``black-box'' manner without knowledge of the

internal workings of the algorithm. This complements previous work that

considers the performance of specific algorithms like (accelerated) gradient

descent with inexact information. In particular, our results apply to a wider

range of algorithms beyond variants of gradient descent, e.g., projection-free

methods, cutting-plane methods, or any other first-order methods formulated in

the future. Further, they also apply to algorithms that handle structured

nonconvexities like mixed-integer decision variables.

There are no more papers matching your filters at the moment.