Universidade Federal de Pernambuco

Power line maintenance and inspection are essential to avoid power supply interruptions, reducing its high social and financial impacts yearly. Automating power line visual inspections remains a relevant open problem for the industry due to the lack of public real-world datasets of power line components and their various defects to foster new research. This paper introduces InsPLAD, a Power Line Asset Inspection Dataset and Benchmark containing 10,607 high-resolution Unmanned Aerial Vehicles colour images. The dataset contains seventeen unique power line assets captured from real-world operating power lines. Additionally, five of those assets present six defects: four of which are corrosion, one is a broken component, and one is a bird's nest presence. All assets were labelled according to their condition, whether normal or the defect name found on an image level. We thoroughly evaluate state-of-the-art and popular methods for three image-level computer vision tasks covered by InsPLAD: object detection, through the AP metric; defect classification, through Balanced Accuracy; and anomaly detection, through the AUROC metric. InsPLAD offers various vision challenges from uncontrolled environments, such as multi-scale objects, multi-size class instances, multiple objects per image, intra-class variation, cluttered background, distinct point-of-views, perspective distortion, occlusion, and varied lighting conditions. To the best of our knowledge, InsPLAD is the first large real-world dataset and benchmark for power line asset inspection with multiple components and defects for various computer vision tasks, with a potential impact to improve state-of-the-art methods in the field. It will be publicly available in its integrity on a repository with a thorough description. It can be found at this https URL.

15 Oct 2025

The generalized Marshall-Olkin Lomax distribution is introduced, and its properties are easily obtained from those of the Lomax distribution. A regression model for censored data is proposed. The parameters are estimated through maximum likelihood, and consistency is verified by simulations. Three real datasets are selected to illustrate the superiority of the new models compared to those from two well-known classes.

13 Feb 2023

This research introduces new decomposition schemes for n-qubit multi-controlled Special Unitary (SU(2)) single-qubit gates, reducing CNOT gate count by 28-30% for general SU(2) gates and 43-46% for those with real-valued diagonals compared to state-of-the-art methods. These optimizations enhance circuit depth and fidelity on Noisy Intermediate-Scale Quantum (NISQ) devices, as demonstrated by higher-fidelity sparse quantum state preparation on IBM hardware.

Most reinforcement learning algorithms take advantage of an experience replay buffer to repeatedly train on samples the agent has observed in the past. Not all samples carry the same amount of significance and simply assigning equal importance to each of the samples is a naïve strategy. In this paper, we propose a method to prioritize samples based on how much we can learn from a sample. We define the learn-ability of a sample as the steady decrease of the training loss associated with this sample over time. We develop an algorithm to prioritize samples with high learn-ability, while assigning lower priority to those that are hard-to-learn, typically caused by noise or stochasticity. We empirically show that our method is more robust than random sampling and also better than just prioritizing with respect to the training loss, i.e. the temporal difference loss, which is used in prioritized experience replay.

Maps are essential to news media as they provide a familiar way to convey spatial context and present engaging narratives. However, the design of journalistic maps may be challenging, as editorial teams need to balance multiple aspects, such as aesthetics, the audience's expected data literacy, tight publication deadlines, and the team's technical skills. Data journalists often come from multiple areas and lack a cartography, data visualization, and data science background, limiting their competence in creating maps. While previous studies have examined spatial visualizations in data stories, this research seeks to gain a deeper understanding of the map design process employed by news outlets. To achieve this, we strive to answer two specific research questions: what is the design space of journalistic maps? and how do editorial teams produce journalistic map articles? To answer the first one, we collected and analyzed a large corpus of 462 journalistic maps used in news articles from five major news outlets published over three months. As a result, we created a design space comprised of eight dimensions that involved both properties describing the articles' aspects and the visual/interactive features of maps. We approach the second research question via semi-structured interviews with four data journalists who create data-driven articles daily. Through these interviews, we identified the most common design rationales made by editorial teams and potential gaps in current practices. We also collected the practitioners' feedback on our design space to externally validate it. With these results, we aim to provide researchers and journalists with empirical data to design and study journalistic maps.

This paper presents a hierarchical reinforcement learning approach using the Soft Actor-Critic algorithm for robot motion planning in dynamic RoboCup Small Size League environments. It introduces techniques like Frame Skip and Conditioning for Action Policy Smoothness (CAPS) to improve action stability, demonstrating successful simulation-to-reality transfer with up to a 70% reduction in action instability and robust obstacle avoidance on physical robots.

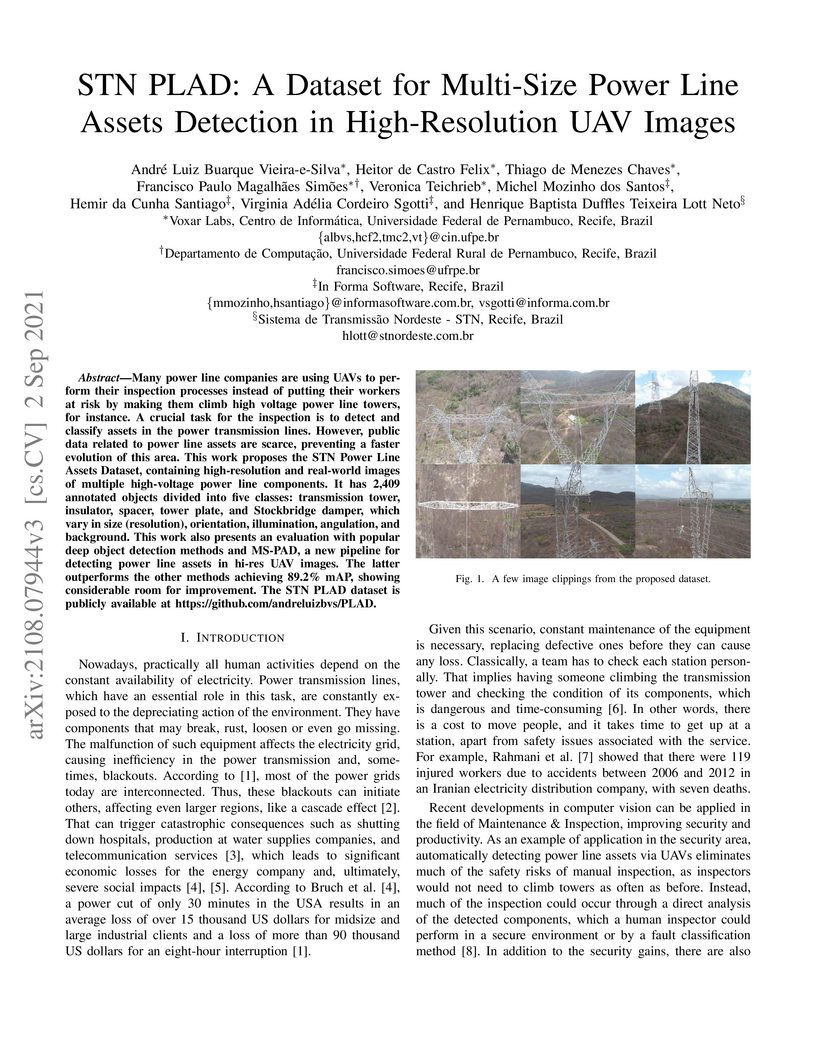

Many power line companies are using UAVs to perform their inspection processes instead of putting their workers at risk by making them climb high voltage power line towers, for instance. A crucial task for the inspection is to detect and classify assets in the power transmission lines. However, public data related to power line assets are scarce, preventing a faster evolution of this area. This work proposes the Power Line Assets Dataset, containing high-resolution and real-world images of multiple high-voltage power line components. It has 2,409 annotated objects divided into five classes: transmission tower, insulator, spacer, tower plate, and Stockbridge damper, which vary in size (resolution), orientation, illumination, angulation, and background. This work also presents an evaluation with popular deep object detection methods, showing considerable room for improvement. The STN PLAD dataset is publicly available at this https URL.

12 Nov 2024

We employ the isomonodromic method to study linear scalar massive

perturbations of Kerr black holes for generic scalar masses Mμ and generic

black hole spins a/M. We find that the longest-living quasinormal mode and

the first overtone coincide for (Mμ)c≃0.3704981 and $(a/M)_c\simeq

0.9994660$. We also show that the longest-living mode and the first overtone

change continuously into each other as we vary the parameters around the point

of degeneracy, providing evidence for the existence of a geometric phase around

an exceptional point. We interpret our findings through a thermodynamic

analogy.

Dataset scaling, also known as normalization, is an essential preprocessing step in a machine learning pipeline. It is aimed at adjusting attributes scales in a way that they all vary within the same range. This transformation is known to improve the performance of classification models, but there are several scaling techniques to choose from, and this choice is not generally done carefully. In this paper, we execute a broad experiment comparing the impact of 5 scaling techniques on the performances of 20 classification algorithms among monolithic and ensemble models, applying them to 82 publicly available datasets with varying imbalance ratios. Results show that the choice of scaling technique matters for classification performance, and the performance difference between the best and the worst scaling technique is relevant and statistically significant in most cases. They also indicate that choosing an inadequate technique can be more detrimental to classification performance than not scaling the data at all. We also show how the performance variation of an ensemble model, considering different scaling techniques, tends to be dictated by that of its base model. Finally, we discuss the relationship between a model's sensitivity to the choice of scaling technique and its performance and provide insights into its applicability on different model deployment scenarios. Full results and source code for the experiments in this paper are available in a GitHub repository.\footnote{this https URL\_matters}

15 Sep 2025

Many-body interactions strongly influence the structure, stability, and dynamics of condensed-matter systems, from atomic lattices to interacting quasi-particles such as superconducting vortices. Here, we investigate theoretically the pairwise and many-body interaction terms among skyrmions in helimagnets, considering both the ferromagnetic and conical spin backgrounds. Using micromagnetic simulations, we separate the exchange, Dzyaloshinskii-Moriya, and Zeeman contributions to the skyrmion-skyrmion pair potential, and show that the binding energy of skyrmions within the conical phase depends strongly on the film thickness. For small skyrmion clusters in the conical phase, three-body interactions make a substantial contribution to the cohesive energy, comparable to that of pairwise terms, while four-body terms become relevant only at small magnetic fields. As the system approaches the ferromagnetic phase, these higher-order contributions vanish, and the interactions become essentially pairwise. Our results indicate that realistic models of skyrmion interactions in helimagnets in the conical phase must incorporate many-body terms to accurately capture the behavior of skyrmion crystals and guide strategies for controlling skyrmion phases and dynamics.

ETH Zurich

ETH Zurich Chinese Academy of SciencesUniversity of Stuttgart

Chinese Academy of SciencesUniversity of Stuttgart National University of Singapore

National University of Singapore Stanford University

Stanford University City University of Hong Kong

City University of Hong Kong Peking UniversityJinan UniversitySoutheast University

Peking UniversityJinan UniversitySoutheast University Australian National UniversityKing Abdullah University of Science and TechnologyMax Planck Institute for Solid State ResearchPohang University of Science and TechnologyNational Cheng Kung UniversityUniversidade Federal de PernambucoUniversity of the WitwatersrandÉcole Polytechnique Fédérale de LausanneSamsungPohang University of Science and Technology (POSTECH)ICREA-Institució Catalana de Recerca i Estudis AvançatsICFO-Institut de Ciencies Fotoniques, The Barcelona Institute of Science and TechnologyUniversity of RostockLeibniz-Institute of Photonic TechnologyUniversidad de La FronteraGraduate Center of the City University of New YorkChangchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of SciencesAdvanced Science Research Center, City University of New YorkLudwig-Maximilians-Universtität MünchenThe City College of New York (USA)RomaTre UniversityNational Yang Ming-Chiao Tung UniversityBeijing Institute of Technology

Australian National UniversityKing Abdullah University of Science and TechnologyMax Planck Institute for Solid State ResearchPohang University of Science and TechnologyNational Cheng Kung UniversityUniversidade Federal de PernambucoUniversity of the WitwatersrandÉcole Polytechnique Fédérale de LausanneSamsungPohang University of Science and Technology (POSTECH)ICREA-Institució Catalana de Recerca i Estudis AvançatsICFO-Institut de Ciencies Fotoniques, The Barcelona Institute of Science and TechnologyUniversity of RostockLeibniz-Institute of Photonic TechnologyUniversidad de La FronteraGraduate Center of the City University of New YorkChangchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of SciencesAdvanced Science Research Center, City University of New YorkLudwig-Maximilians-Universtität MünchenThe City College of New York (USA)RomaTre UniversityNational Yang Ming-Chiao Tung UniversityBeijing Institute of TechnologyScientific research needs a new system that appropriately values science and scientists. Key innovations, within institutions and funding agencies, are driving better assessment of research, with open knowledge and FAIR (findable, accessible, interoperable, and reusable) principles as central pillars. Furthermore, coalitions, agreements, and robust infrastructures have emerged to promote more accurate assessment metrics and efficient knowledge sharing. However, despite these efforts, the system still relies on outdated methods where standardized metrics such as h-index and journal impact factor dominate evaluations. These metrics have had the unintended consequence of pushing researchers to produce more outputs at the expense of integrity and reproducibility. In this community paper, we bring together a global community of researchers, funding institutions, industrial partners, and publishers from 14 different countries across the 5 continents. We aim at collectively envision an evolved knowledge sharing and research evaluation along with the potential positive impact on every stakeholder involved. We imagine these ideas to set the groundwork for a cultural change to redefine a more fair and equitable scientific landscape.

This paper proposes a question-answering system that can answer questions whose supporting evidence is spread over multiple (potentially long) documents. The system, called Visconde, uses a three-step pipeline to perform the task: decompose, retrieve, and aggregate. The first step decomposes the question into simpler questions using a few-shot large language model (LLM). Then, a state-of-the-art search engine is used to retrieve candidate passages from a large collection for each decomposed question. In the final step, we use the LLM in a few-shot setting to aggregate the contents of the passages into the final answer. The system is evaluated on three datasets: IIRC, Qasper, and StrategyQA. Results suggest that current retrievers are the main bottleneck and that readers are already performing at the human level as long as relevant passages are provided. The system is also shown to be more effective when the model is induced to give explanations before answering a question. Code is available at \url{this https URL}.

15 Sep 2025

The critical brain hypothesis posits that neural systems operate near a phase transition, optimizing the processing of information. While scale invariance and non-Gaussian dynamics--hallmarks of criticality--have been observed in brain activity, a direct link between criticality and behavioral performance remains unexplored. Here, we use a phenomenological renormalization group approach to examine neuronal activity in the primary visual cortex of mice performing a visual recognition task. We show that nontrivial scaling in neuronal activity is associated with enhanced task performance, with pronounced scaling observed during successful task completion. When rewards were removed or non-natural stimuli presented, scaling signatures diminished. These results suggest that critical dynamics in the brain is crucial for optimizing behavioral outcomes, offering new insights into the functional role of criticality in cortical processing.

26 Sep 2025

Open Quantum Walks (OQW) are a type of quantum walk governed by the system's interaction with its environment. We explore the time evolution and the limit behavior of the OQW framework for Quantum Computation and show how we can represent random unitary quantum channels, such as the dephasing and depolarizing channels, in this model. We also develop a simulation protocol with circuit representation for this model, which is heavily inspired by the fact that graphs represent OQW and are, thereby, local (meaning that the state in a particular node interacts only with its neighborhood). We obtain asymptotic advantages in the system's dimension, circuit depth, and CNOT count compared to other simulation methods.

Given graphs G and H, we say that G is H-good if the Ramsey number R(G,H) equals the trivial lower bound (∣G∣−1)(χ(H)−1)+σ(H), where χ(H) denotes the usual chromatic number of H, and σ(H) denotes the minimum size of a color class in a χ(H)-coloring of H. Pokrovskiy and Sudakov [Ramsey goodness of paths. Journal of Combinatorial Theory, Series B, 122:384-390, 2017.] proved that Pn is H-good whenever n≥4∣H∣. In this paper, given ε>0, we show that if H satisfy a special unbalance condition, then Pn is H-good whenever n≥(2+ε)∣H∣. More specifically, we show that if m1,…,mk are such that ε⋅mi≥2mi−12 for 2≤i≤k, and n≥(2+ε)(m1+⋯+mk), then Pn is Km1,…,mk-good.

We report a comprehensive experimental investigation of orbital-to-charge conversion in metallic and semiconductor materials, emphasizing the fundamental roles of the inverse orbital Hall effect (IOHE) and the inverse orbital Rashba effect. Using spin pumping driven by ferromagnetic resonance (SP-FMR) and the spin Seebeck effect (SSE), we demonstrate efficient orbital current generation and detection in YIG/Pt/NM structures, where NM is either a metal or a semiconductor. A central finding is the dominance of orbital contributions over spin-related effects, even in systems with weak spin-orbit coupling. In particular, a large enhancement of the SP-FMR and SSE signals is observed in the presence of naturally oxidized Cu in different heterostructures. Furthermore, we identify positive and negative IOHE signals in Ti and Ge, respectively, and extract orbital diffusion lengths in both systems using a diffusive model. Our results confirm the presence of orbital transport and offer valuable insights that may guide the further development of orbitronics.

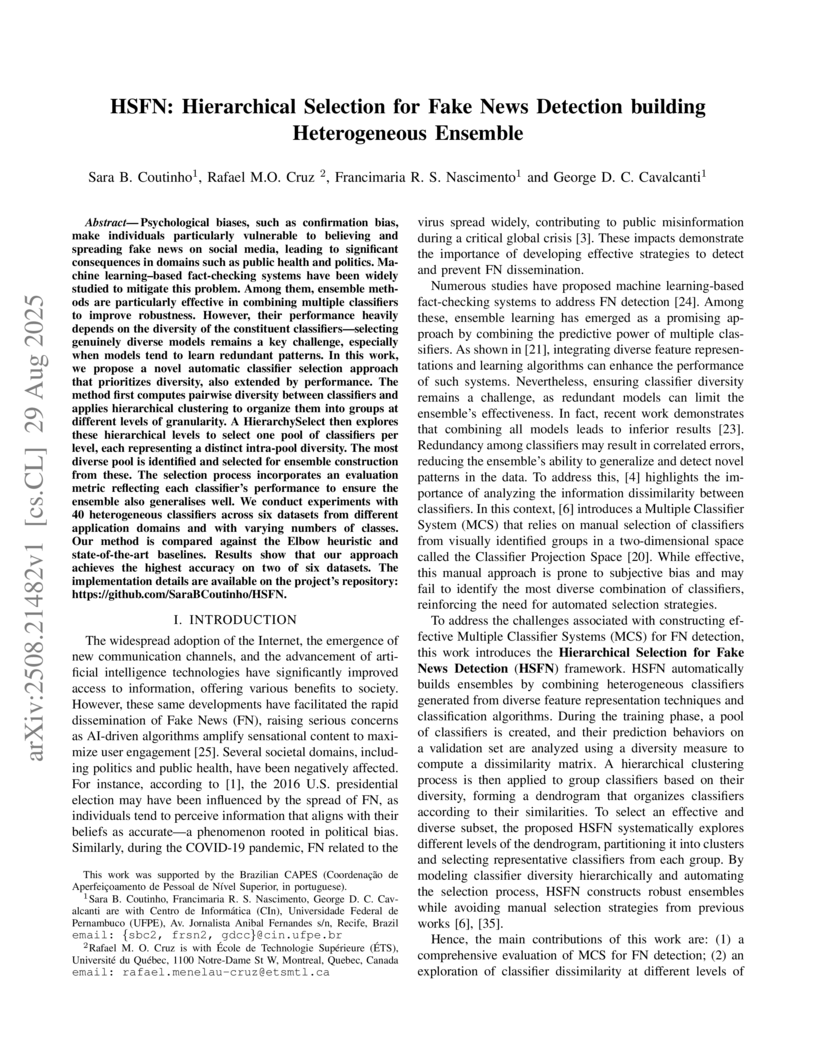

Psychological biases, such as confirmation bias, make individuals particularly vulnerable to believing and spreading fake news on social media, leading to significant consequences in domains such as public health and politics. Machine learning-based fact-checking systems have been widely studied to mitigate this problem. Among them, ensemble methods are particularly effective in combining multiple classifiers to improve robustness. However, their performance heavily depends on the diversity of the constituent classifiers-selecting genuinely diverse models remains a key challenge, especially when models tend to learn redundant patterns. In this work, we propose a novel automatic classifier selection approach that prioritizes diversity, also extended by performance. The method first computes pairwise diversity between classifiers and applies hierarchical clustering to organize them into groups at different levels of granularity. A HierarchySelect then explores these hierarchical levels to select one pool of classifiers per level, each representing a distinct intra-pool diversity. The most diverse pool is identified and selected for ensemble construction from these. The selection process incorporates an evaluation metric reflecting each classifiers's performance to ensure the ensemble also generalises well. We conduct experiments with 40 heterogeneous classifiers across six datasets from different application domains and with varying numbers of classes. Our method is compared against the Elbow heuristic and state-of-the-art baselines. Results show that our approach achieves the highest accuracy on two of six datasets. The implementation details are available on the project's repository: this https URL .

24 Aug 2024

This work extends the Rose–Terao–Yuzvinsky (RTY) theorem by investigating the homological depth of gradient ideals for products of general forms of arbitrary degrees and classifying the RTY-property for arbitrary forms. It provides new matrix-theoretic proofs for generic hyperplane arrangements and offers explicit free resolutions and homological classifications for various classes of polynomials, including products of smooth quadrics.

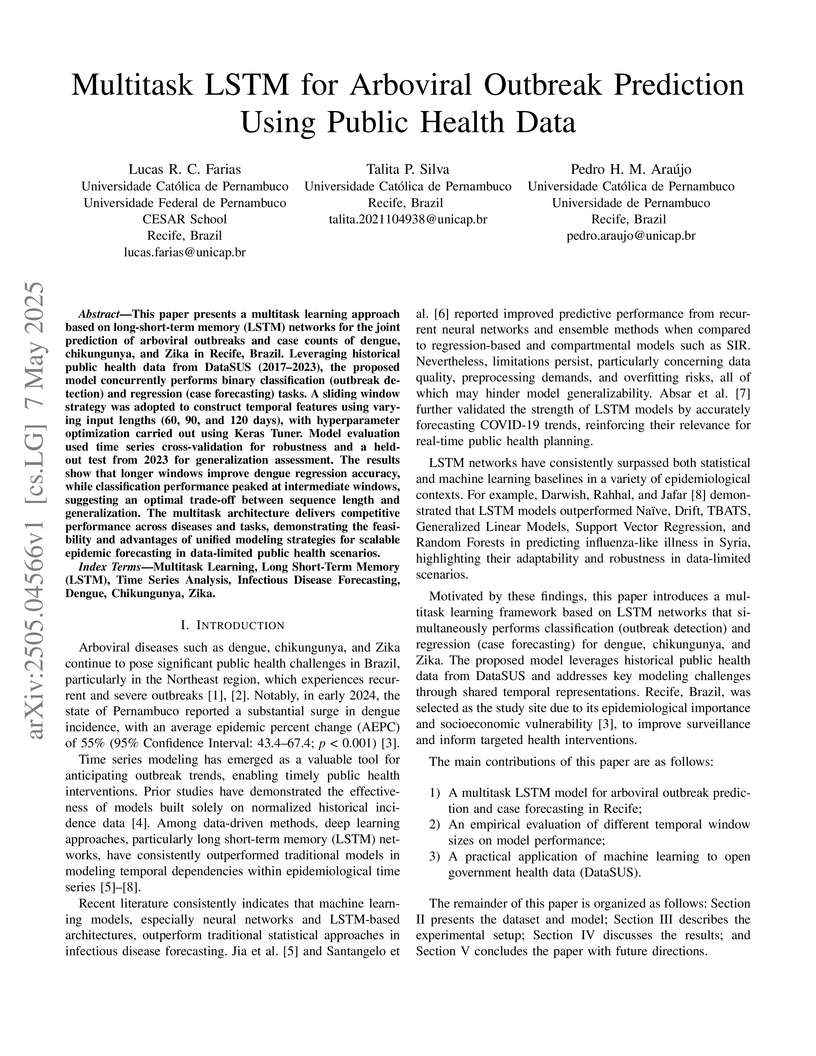

This paper presents a multitask learning approach based on long-short-term

memory (LSTM) networks for the joint prediction of arboviral outbreaks and case

counts of dengue, chikungunya, and Zika in Recife, Brazil. Leveraging

historical public health data from DataSUS (2017-2023), the proposed model

concurrently performs binary classification (outbreak detection) and regression

(case forecasting) tasks. A sliding window strategy was adopted to construct

temporal features using varying input lengths (60, 90, and 120 days), with

hyperparameter optimization carried out using Keras Tuner. Model evaluation

used time series cross-validation for robustness and a held-out test from 2023

for generalization assessment. The results show that longer windows improve

dengue regression accuracy, while classification performance peaked at

intermediate windows, suggesting an optimal trade-off between sequence length

and generalization. The multitask architecture delivers competitive performance

across diseases and tasks, demonstrating the feasibility and advantages of

unified modeling strategies for scalable epidemic forecasting in data-limited

public health scenarios.

Handwritten Mathematical Expression Recognition (HMER) is a challenging task

with many educational applications. Recent methods for HMER have been developed

for complex mathematical expressions in standard horizontal format. However,

solutions for elementary mathematical expression, such as vertical addition and

subtraction, have not been explored in the literature. This work proposes a new

handwritten elementary mathematical expression dataset composed of addition and

subtraction expressions in a vertical format. We also extended the MNIST

dataset to generate artificial images with this structure. Furthermore, we

proposed a solution for offline HMER, able to recognize vertical addition and

subtraction expressions. Our analysis evaluated the object detection algorithms

YOLO v7, YOLO v8, YOLO-NAS, NanoDet and FCOS for identifying the mathematical

symbols. We also proposed a transcription method to map the bounding boxes from

the object detection stage to a mathematical expression in the LATEX markup

sequence. Results show that our approach is efficient, achieving a high

expression recognition rate. The code and dataset are available at

this https URL

There are no more papers matching your filters at the moment.