University of Idaho

The KnoVo framework quantifies multi-dimensional research novelty and tracks knowledge evolution by using open-source LLMs to dynamically extract specific contribution dimensions from papers and perform fine-grained comparisons. It generates overall and temporal novelty scores, and reconstructs evolutionary pathways of ideas within specific dimensions, offering a content-aware, automated approach to scientific literature analysis.

The circuit complexity of time-evolved pure quantum states grows linearly in time for an exponentially long time. This behavior has been proven in certain models, is conjectured to hold for generic quantum many-body systems, and is believed to be dual to the long-time growth of black hole interiors in AdS/CFT. Achieving a similar understanding for mixed states remains an important problem. In this work, we study the circuit complexity of time-evolved subsystems of pure quantum states. We find that for greater-than-half subsystem sizes, the complexity grows linearly in time for an exponentially long time, similarly to that of the full state. However, for less-than-half subsystem sizes, the complexity rises and then falls, returning to low complexity as the subsystem equilibrates. Notably, the transition between these two regimes occurs sharply at half system size. We use holographic duality to map out this picture of subsystem complexity dynamics and rigorously prove the existence of the sharp transition in random quantum circuits. Furthermore, we use holography to predict features of complexity growth at finite temperature that lie beyond the reach of techniques based on random quantum circuits. In particular, at finite temperature, we argue for an additional sharp transition at a critical less-than-half subsystem size. Below this critical value, the subsystem complexity saturates nearly instantaneously rather than exhibiting a rise and fall. This novel phenomenon, as well as an analogous transition above half system size, provides a target for future studies based on rigorous methods.

21 Jun 2025

The exponential growth of scientific literature challenges researchers extracting and synthesizing knowledge. Traditional search engines return many sources without direct, detailed answers, while general-purpose LLMs may offer concise responses that lack depth or omit current information. LLMs with search capabilities are also limited by context window, yielding short, incomplete answers. This paper introduces WISE (Workflow for Intelligent Scientific Knowledge Extraction), a system addressing these limits by using a structured workflow to extract, refine, and rank query-specific knowledge. WISE uses an LLM-powered, tree-based architecture to refine data, focusing on query-aligned, context-aware, and non-redundant information. Dynamic scoring and ranking prioritize unique contributions from each source, and adaptive stopping criteria minimize processing overhead. WISE delivers detailed, organized answers by systematically exploring and synthesizing knowledge from diverse sources. Experiments on HBB gene-associated diseases demonstrate WISE reduces processed text by over 80% while achieving significantly higher recall over baselines like search engines and other LLM-based approaches. ROUGE and BLEU metrics reveal WISE's output is more unique than other systems, and a novel level-based metric shows it provides more in-depth information. We also explore how the WISE workflow can be adapted for diverse domains like drug discovery, material science, and social science, enabling efficient knowledge extraction and synthesis from unstructured scientific papers and web sources.

High-fidelity gravitational waveform models are essential for realizing the scientific potential of next-generation gravitational-wave observatories. While highly accurate, state-of-the-art models often rely on extensive phenomenological calibrations to numerical relativity (NR) simulations for the late-inspiral and merger phases, which can limit physical insight and extrapolation to regions where NR data is sparse. To address this, we introduce the Spinning Effective-to-Backwards One Body (SEBOB) formalism, a hybrid approach that combines the well-established Effective-One-Body (EOB) framework with the analytically-driven Backwards-One-Body (BOB) model, which describes the merger-ringdown from first principles as a perturbation of the final remnant black hole. We present two variants building on the state-of-the-art SEOBNRv5HM model: \texttt{seobnrv5_nrnqc_bob}, which retains standard NR-calibrated non-quasi-circular (NQC) corrections and attaches a BOB-based merger-ringdown; and a more ambitious variant, \texttt{seobnrv5_bob}, which uses BOB to also inform the NQC corrections, thereby reducing reliance on NR fitting and enabling higher-order (C2) continuity by construction. Implemented in the open-source NRPy framework for optimized C-code generation, the SEBOB model is transparent, extensible, and computationally efficient. By comparing our waveforms to a large catalog of NR simulations, we demonstrate that SEBOB yields accuracies comparable to the highly-calibrated SEOBNRv5HM model, providing a viable pathway towards more physically motivated and robust waveform models

Life sciences research increasingly requires identifying, accessing, and effectively processing data from an ever-evolving array of information sources on the Linked Open Data (LOD) network. This dynamic landscape places a significant burden on researchers, as the quality of query responses depends heavily on the selection and semantic integration of data sources --processes that are often labor-intensive, error-prone, and costly. While the adoption of FAIR (Findable, Accessible, Interoperable, and Reusable) data principles has aimed to address these challenges, barriers to efficient and accurate scientific data processing persist.

In this paper, we introduce FAIRBridge, an experimental natural language-based query processing system designed to empower scientists to discover, access, and query biological databases, even when they are not FAIR-compliant. FAIRBridge harnesses the capabilities of AI to interpret query intents, map them to relevant databases described in scientific literature, and generate executable queries via intelligent resource access plans. The system also includes robust tools for mitigating low-quality query processing, ensuring high fidelity and responsiveness in the information delivered.

FAIRBridge's autonomous query processing framework enables users to explore alternative data sources, make informed choices at every step, and leverage community-driven crowd curation when needed. By providing a user-friendly, automated hypothesis-testing platform in natural English, FAIRBridge significantly enhances the integration and processing of scientific data, offering researchers a powerful new tool for advancing their inquiries.

Effective clinical deployment of deep learning models in healthcare demands

high generalization performance to ensure accurate diagnosis and treatment

planning. In recent years, significant research has focused on improving the

generalization of deep learning models by regularizing the sharpness of the

loss landscape. Among the optimization approaches that explicitly minimize

sharpness, Sharpness-Aware Minimization (SAM) has shown potential in enhancing

generalization performance on general domain image datasets. This success has

led to the development of several advanced sharpness-based algorithms aimed at

addressing the limitations of SAM, such as Adaptive SAM, surrogate-Gap SAM,

Weighted SAM, and Curvature Regularized SAM. These sharpness-based optimizers

have shown improvements in model generalization compared to conventional

stochastic gradient descent optimizers and their variants on general domain

image datasets, but they have not been thoroughly evaluated on medical images.

This work provides a review of recent sharpness-based methods for improving the

generalization of deep learning networks and evaluates the methods performance

on medical breast ultrasound images. Our findings indicate that the initial SAM

method successfully enhances the generalization of various deep learning

models. While Adaptive SAM improves generalization of convolutional neural

networks, it fails to do so for vision transformers. Other sharpness-based

optimizers, however, do not demonstrate consistent results. The results reveal

that, contrary to findings in the non-medical domain, SAM is the only

recommended sharpness-based optimizer that consistently improves generalization

in medical image analysis, and further research is necessary to refine the

variants of SAM to enhance generalization performance in this field

Recent research on the application of remote sensing and deep learning-based analysis in precision agriculture demonstrated a potential for improved crop management and reduced environmental impacts of agricultural production. Despite the promising results, the practical relevance of these technologies for field deployment requires novel algorithms that are customized for analysis of agricultural images and robust to implementation on natural field imagery. The paper presents an approach for analyzing aerial images of a potato (Solanum tuberosum L.) crop using deep neural networks. The main objective is to demonstrate automated spatial recognition of healthy vs. stressed crop at a plant level. Specifically, we examine premature plant senescence resulting in drought stress on Russet Burbank potato plants. We propose a novel deep learning (DL) model for detecting crop stress, named Retina-UNet-Ag. The proposed architecture is a variant of Retina-UNet and includes connections from low-level semantic representation maps to the feature pyramid network. The paper also introduces a dataset of aerial field images acquired with a Parrot Sequoia camera. The dataset includes manually annotated bounding boxes of healthy and stressed plant regions. Experimental validation demonstrated the ability for distinguishing healthy and stressed plants in field images, achieving an average dice score coefficient (DSC) of 0.74. A comparison to related state-of-the-art DL models for object detection revealed that the presented approach is effective for this task. The proposed method is conducive toward the assessment and recognition of potato crop stress in aerial field images collected under natural conditions.

Black hole - neutron star (BHNS) mergers are a promising target of current gravitational-wave (GW) and electromagnetic (EM) searches, being the putative origin of ultra-relativistic jets, gamma-ray emission, and r-process nucleosynthesis. However, the possibility of any EM emission accompanying a GW detection crucially depends on the amount of baryonic mass left after the coalescence, i.e. whether the neutron star (NS) undergoes a `tidal disruption' or `plunges' into the black hole (BH) while remaining essentially intact. As the first of a series of two papers, we here report the most systematic investigation to date of quasi-equilibrium sequences of initial data across a range of stellar compactnesses C, mass ratios q, BH spins $\chi_{_{\rm

BH}},andequationsofstatesatisfyingallpresentobservationalconstraints.Usinganimprovedversionoftheellipticinitial−datasolverFUKA,wehavecomputedmorethan1000individualconfigurationsandestimatedtheonsetofmass−sheddingorthecrossingoftheinnermoststablecircularorbitintermsofthecorrespondingcharacteristicorbitalangularvelocities\Omega_{_{\rm MS}}and\Omega_{_{\rm

ISCO}}asafunctionof\mathcal{C}, q,and\chi_{_{\rm BH}}.Tothebestofourknowledge,thisisthefirsttimethatthedependenceofthesefrequenciesontheBHspinisinvestigated.Inturn,bysetting\Omega_{_{\rm MS}} = \Omega_{_{\rm ISCO}}itispossibletodeterminetheseparatrixbetweenthe‘tidaldisruption′or‘plunge′scenariosasafunctionofthefundamentalparametersofthesesystems,namely,q, \mathcal{C},and\chi_{_{\rm BH}}$. Finally, we present a novel analysis of quantities related to the tidal forces in the initial data and discuss their dependence on spin and separation.

23 Jan 2020

Computer-aided assessment of physical rehabilitation entails evaluation of patient performance in completing prescribed rehabilitation exercises, based on processing movement data captured with a sensory system. Despite the essential role of rehabilitation assessment toward improved patient outcomes and reduced healthcare costs, existing approaches lack versatility, robustness, and practical relevance. In this paper, we propose a deep learning-based framework for automated assessment of the quality of physical rehabilitation exercises. The main components of the framework are metrics for quantifying movement performance, scoring functions for mapping the performance metrics into numerical scores of movement quality, and deep neural network models for generating quality scores of input movements via supervised learning. The proposed performance metric is defined based on the log-likelihood of a Gaussian mixture model, and encodes low-dimensional data representation obtained with a deep autoencoder network. The proposed deep spatio-temporal neural network arranges data into temporal pyramids, and exploits the spatial characteristics of human movements by using sub-networks to process joint displacements of individual body parts. The presented framework is validated using a dataset of ten rehabilitation exercises. The significance of this work is that it is the first that implements deep neural networks for assessment of rehabilitation performance.

27 Jul 2025

The integration of heterogeneous multi-omics datasets at a systems level remains a central challenge for developing analytical and computational models in precision cancer diagnostics. This paper introduces Multi-Omics Graph Kolmogorov-Arnold Network (MOGKAN), a deep learning framework that utilizes messenger-RNA, micro-RNA sequences, and DNA methylation samples together with Protein-Protein Interaction (PPI) networks for cancer classification across 31 different cancer types. The proposed approach combines differential gene expression with DESeq2, Linear Models for Microarray (LIMMA), and Least Absolute Shrinkage and Selection Operator (LASSO) regression to reduce multi-omics data dimensionality while preserving relevant biological features. The model architecture is based on the Kolmogorov-Arnold theorem principle and uses trainable univariate functions to enhance interpretability and feature analysis. MOGKAN achieves classification accuracy of 96.28 percent and exhibits low experimental variability in comparison to related deep learning-based models. The biomarkers identified by MOGKAN were validated as cancer-related markers through Gene Ontology (GO) and Kyoto Encyclopedia of Genes and Genomes (KEGG) enrichment analysis. By integrating multi-omics data with graph-based deep learning, our proposed approach demonstrates robust predictive performance and interpretability with potential to enhance the translation of complex multi-omics data into clinically actionable cancer diagnostics.

With the growing development and deployment of large language models (LLMs)

in both industrial and academic fields, their security and safety concerns have

become increasingly critical. However, recent studies indicate that LLMs face

numerous vulnerabilities, including data poisoning, prompt injections, and

unauthorized data exposure, which conventional methods have struggled to

address fully. In parallel, blockchain technology, known for its data

immutability and decentralized structure, offers a promising foundation for

safeguarding LLMs. In this survey, we aim to comprehensively assess how to

leverage blockchain technology to enhance LLMs' security and safety. Besides,

we propose a new taxonomy of blockchain for large language models (BC4LLMs) to

systematically categorize related works in this emerging field. Our analysis

includes novel frameworks and definitions to delineate security and safety in

the context of BC4LLMs, highlighting potential research directions and

challenges at this intersection. Through this study, we aim to stimulate

targeted advancements in blockchain-integrated LLM security.

This article proposes a method for mathematical modeling of human movements

related to patient exercise episodes performed during physical therapy sessions

by using artificial neural networks. The generative adversarial network

structure is adopted, whereby a discriminative and a generative model are

trained concurrently in an adversarial manner. Different network architectures

are examined, with the discriminative and generative models structured as deep

subnetworks of hidden layers comprised of convolutional or recurrent

computational units. The models are validated on a data set of human movements

recorded with an optical motion tracker. The results demonstrate an ability of

the networks for classification of new instances of motions, and for generation

of motion examples that resemble the recorded motion sequences.

19 Apr 2018

The use of unmanned aerial vehicles (UAVs) is growing rapidly across many civil application domains including real-time monitoring, providing wireless coverage, remote sensing, search and rescue, delivery of goods, security and surveillance, precision agriculture, and civil infrastructure inspection. Smart UAVs are the next big revolution in UAV technology promising to provide new opportunities in different applications, especially in civil infrastructure in terms of reduced risks and lower cost. Civil infrastructure is expected to dominate the more that $45 Billion market value of UAV usage. In this survey, we present UAV civil applications and their challenges. We also discuss current research trends and provide future insights for potential UAV uses. Furthermore, we present the key challenges for UAV civil applications, including: charging challenges, collision avoidance and swarming challenges, and networking and security related challenges. Based on our review of the recent literature, we discuss open research challenges and draw high-level insights on how these challenges might be approached.

This study investigates the near-future impacts of generative artificial

intelligence (AI) technologies on occupational competencies across the U.S.

federal workforce. We develop a multi-stage Retrieval-Augmented Generation

system to leverage large language models for predictive AI modeling that

projects shifts in required competencies and to identify vulnerable occupations

on a knowledge-by-skill-by-ability basis across the federal government

workforce. This study highlights policy recommendations essential for workforce

planning in the era of AI. We integrate several sources of detailed data on

occupational requirements across the federal government from both centralized

and decentralized human resource sources, including from the U.S. Office of

Personnel Management (OPM) and various federal agencies. While our preliminary

findings suggest some significant shifts in required competencies and potential

vulnerability of certain roles to AI-driven changes, we provide nuanced

insights that support arguments against abrupt or generic approaches to

strategic human capital planning around the development of generative AI. The

study aims to inform strategic workforce planning and policy development within

federal agencies and demonstrates how this approach can be replicated across

other large employment institutions and labor markets.

U-Net and its extensions have achieved great success in medical image

segmentation. However, due to the inherent local characteristics of ordinary

convolution operations, U-Net encoder cannot effectively extract global context

information. In addition, simple skip connections cannot capture salient

features. In this work, we propose a fully convolutional segmentation network

(CMU-Net) which incorporates hybrid convolutions and multi-scale attention

gate. The ConvMixer module extracts global context information by mixing

features at distant spatial locations. Moreover, the multi-scale attention gate

emphasizes valuable features and achieves efficient skip connections. We

evaluate the proposed method using both breast ultrasound datasets and a

thyroid ultrasound image dataset; and CMU-Net achieves average Intersection

over Union (IoU) values of 73.27% and 84.75%, and F1 scores of 84.81% and

91.71%. The code is available at https://github.com/FengheTan9/CMU-Net.

24 Aug 2024

This work extends the Rose–Terao–Yuzvinsky (RTY) theorem by investigating the homological depth of gradient ideals for products of general forms of arbitrary degrees and classifying the RTY-property for arbitrary forms. It provides new matrix-theoretic proofs for generic hyperplane arrangements and offers explicit free resolutions and homological classifications for various classes of polynomials, including products of smooth quadrics.

26 Jan 2025

The generalization performance of deep neural networks (DNNs) is a critical

factor in achieving robust model behavior on unseen data. Recent studies have

highlighted the importance of sharpness-based measures in promoting

generalization by encouraging convergence to flatter minima. Among these

approaches, Sharpness-Aware Minimization (SAM) has emerged as an effective

optimization technique for reducing the sharpness of the loss landscape,

thereby improving generalization. However, SAM's computational overhead and

sensitivity to noisy gradients limit its scalability and efficiency. To address

these challenges, we propose Gradient-Centralized Sharpness-Aware Minimization

(GCSAM), which incorporates Gradient Centralization (GC) to stabilize gradients

and accelerate convergence. GCSAM normalizes gradients before the ascent step,

reducing noise and variance, and improving stability during training. Our

evaluations indicate that GCSAM consistently outperforms SAM and the Adam

optimizer in terms of generalization and computational efficiency. These

findings demonstrate GCSAM's effectiveness across diverse domains, including

general and medical imaging tasks.

Additive Manufacturing (AM) is a transformative manufacturing technology

enabling direct fabrication of complex parts layer-be-layer from 3D modeling

data. Among AM applications, the fabrication of Functionally Graded Materials

(FGMs) has significant importance due to the potential to enhance component

performance across several industries. FGMs are manufactured with a gradient

composition transition between dissimilar materials, enabling the design of new

materials with location-dependent mechanical and physical properties. This

study presents a comprehensive review of published literature pertaining to the

implementation of Machine Learning (ML) techniques in AM, with an emphasis on

ML-based methods for optimizing FGMs fabrication processes. Through an

extensive survey of the literature, this review article explores the role of ML

in addressing the inherent challenges in FGMs fabrication and encompasses

parameter optimization, defect detection, and real-time monitoring. The article

also provides a discussion of future research directions and challenges in

employing ML-based methods in AM fabrication of FGMs.

CNRS

CNRS California Institute of TechnologyCharles University

California Institute of TechnologyCharles University Imperial College London

Imperial College London Cornell University

Cornell University Yale University

Yale University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of Maryland

University of Maryland Université Paris-Saclay

Université Paris-Saclay Stockholm University

Stockholm University University of Arizona

University of Arizona Sorbonne UniversitéInstitut Polytechnique de Paris

Sorbonne UniversitéInstitut Polytechnique de Paris MITUniversité d’OrléansUtrecht UniversityUniversity of LeicesterJohns Hopkins University Applied Physics LaboratoryObservatoire de ParisJet Propulsion LaboratoryBrigham Young UniversityUniversity of IdahoLa Sapienza University of RomeSouthwest Research InstituteFree University BerlinAeolis ResearchENSUniversité de Reims Champagne ArdenneInstitut de Physique du Globe de ParisUVSQ Université Paris-SaclayAurora Technology B.V.ESA, European Space AgencyPlanetary Science Institute ColoradoUniversit PSLUniversit

de NantesUniversit

de ParisNASA, Ames Research CenterAix-Marseille Universit",Universit

Paris-Est`Ecole PolytechniqueUniversit

Bordeaux

MITUniversité d’OrléansUtrecht UniversityUniversity of LeicesterJohns Hopkins University Applied Physics LaboratoryObservatoire de ParisJet Propulsion LaboratoryBrigham Young UniversityUniversity of IdahoLa Sapienza University of RomeSouthwest Research InstituteFree University BerlinAeolis ResearchENSUniversité de Reims Champagne ArdenneInstitut de Physique du Globe de ParisUVSQ Université Paris-SaclayAurora Technology B.V.ESA, European Space AgencyPlanetary Science Institute ColoradoUniversit PSLUniversit

de NantesUniversit

de ParisNASA, Ames Research CenterAix-Marseille Universit",Universit

Paris-Est`Ecole PolytechniqueUniversit

BordeauxIn response to ESA Voyage 2050 announcement of opportunity, we propose an ambitious L-class mission to explore one of the most exciting bodies in the Solar System, Saturn largest moon Titan. Titan, a "world with two oceans", is an organic-rich body with interior-surface-atmosphere interactions that are comparable in complexity to the Earth. Titan is also one of the few places in the Solar System with habitability potential. Titan remarkable nature was only partly revealed by the Cassini-Huygens mission and still holds mysteries requiring a complete exploration using a variety of vehicles and instruments. The proposed mission concept POSEIDON (Titan POlar Scout/orbitEr and In situ lake lander DrONe explorer) would perform joint orbital and in situ investigations of Titan. It is designed to build on and exceed the scope and scientific/technological accomplishments of Cassini-Huygens, exploring Titan in ways that were not previously possible, in particular through full close-up and in situ coverage over long periods of time. In the proposed mission architecture, POSEIDON consists of two major elements: a spacecraft with a large set of instruments that would orbit Titan, preferably in a low-eccentricity polar orbit, and a suite of in situ investigation components, i.e. a lake lander, a "heavy" drone (possibly amphibious) and/or a fleet of mini-drones, dedicated to the exploration of the polar regions. The ideal arrival time at Titan would be slightly before the next northern Spring equinox (2039), as equinoxes are the most active periods to monitor still largely unknown atmospheric and surface seasonal changes. The exploration of Titan northern latitudes with an orbiter and in situ element(s) would be highly complementary with the upcoming NASA New Frontiers Dragonfly mission that will provide in situ exploration of Titan equatorial regions in the mid-2030s.

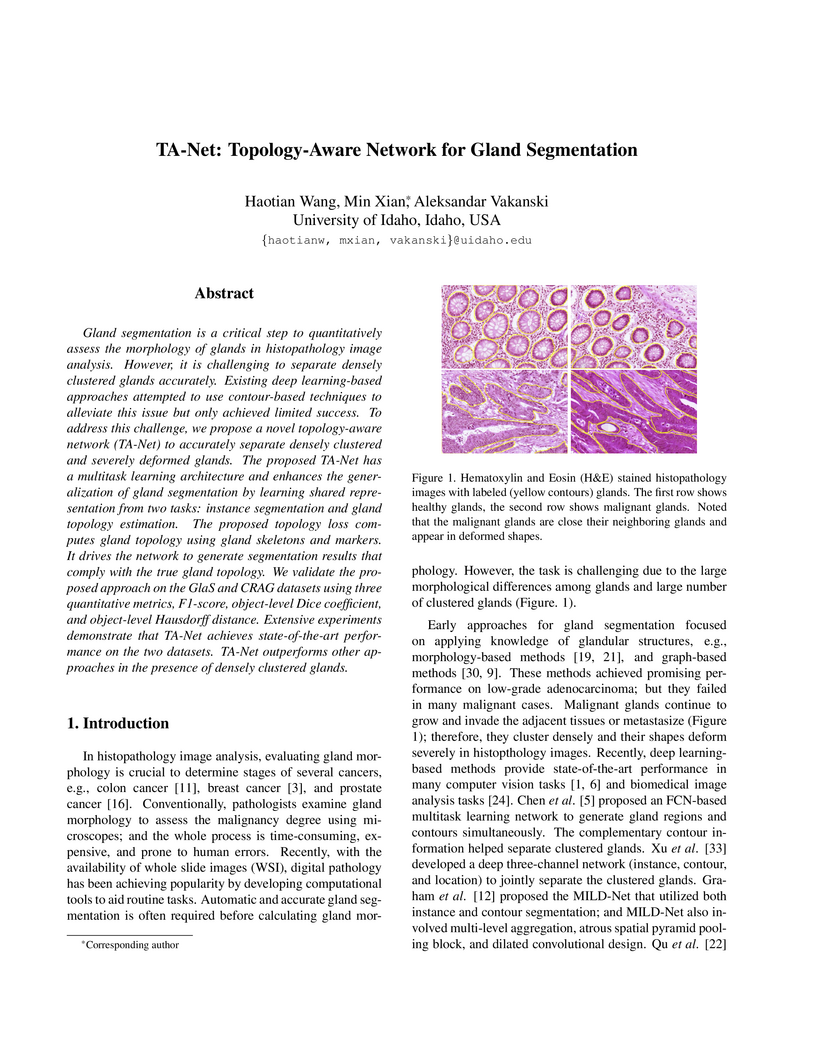

Gland segmentation is a critical step to quantitatively assess the morphology

of glands in histopathology image analysis. However, it is challenging to

separate densely clustered glands accurately. Existing deep learning-based

approaches attempted to use contour-based techniques to alleviate this issue

but only achieved limited success. To address this challenge, we propose a

novel topology-aware network (TA-Net) to accurately separate densely clustered

and severely deformed glands. The proposed TA-Net has a multitask learning

architecture and enhances the generalization of gland segmentation by learning

shared representation from two tasks: instance segmentation and gland topology

estimation. The proposed topology loss computes gland topology using gland

skeletons and markers. It drives the network to generate segmentation results

that comply with the true gland topology. We validate the proposed approach on

the GlaS and CRAG datasets using three quantitative metrics, F1-score,

object-level Dice coefficient, and object-level Hausdorff distance. Extensive

experiments demonstrate that TA-Net achieves state-of-the-art performance on

the two datasets. TA-Net outperforms other approaches in the presence of

densely clustered glands.

There are no more papers matching your filters at the moment.