University of Vermont

Monash UniversityLeipzig University

Monash UniversityLeipzig University Northeastern University

Northeastern University Carnegie Mellon University

Carnegie Mellon University New York University

New York University Stanford University

Stanford University McGill University

McGill University University of British ColumbiaCSIRO’s Data61IBM Research

University of British ColumbiaCSIRO’s Data61IBM Research Columbia UniversityScaDS.AI

Columbia UniversityScaDS.AI Hugging Face

Hugging Face Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab

Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab MIT

MIT Queen Mary University of LondonUniversity of VermontSAP

Queen Mary University of LondonUniversity of VermontSAP ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",

ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",StarCoder and StarCoderBase are large language models for code developed by The BigCode community, demonstrating state-of-the-art performance among open-access models on Python code generation, achieving 33.6% pass@1 on HumanEval, and strong multi-language capabilities, all while integrating responsible AI practices.

IBM Research developed a framework enabling Large Language Models (LLMs) to detect and manage contextually inappropriate sensitive information that users inadvertently disclose during interactions. This real-time, user-facing system provides reformulation guidance, achieving high privacy gains while preserving query utility.

Scenario reduction (SR) alleviates the computational complexity of scenario-based stochastic optimization with conditional value-at-risk (SBSO-CVaR) by identifying representative scenarios to depict the underlying uncertainty and tail risks. Existing distribution-driven SR methods emphasize statistical similarity but often exclude extreme scenarios, leading to weak tail-risk awareness and insufficient problem-specific representativeness. Instead, this paper proposes an iterative problem-driven scenario reduction framework. Specifically, we integrate the SBSO-CVaR problem structure into SR process and project the original scenario set from the distribution space onto the problem space. Subsequently, to minimize the SR optimality gap with acceptable computation complexity, we propose a tractable iterative problem-driven scenario reduction (IPDSR) method that selects representative scenarios that best approximate the optimality distribution of the original scenario set while preserving tail risks. Furthermore, the iteration process is rendered as a mixed-integer program to enable scenario partitioning and representative scenarios selection. And ex-post problem-driven evaluation indices are proposed to evaluate the SR performance. Numerical experiments show IPDSR significantly outperforms existing SR methods by achieving an optimality gap of less than 1% within an acceptable computation time.

12 Sep 2025

Human language is one of the most expressive tools for conveying intent, yet most artificial or biological systems lack mechanisms to interpret or respond meaningfully to it. Bridging this gap could enable more natural forms of control over complex, decentralized systems. In AI and artificial life, recent work explores how language can specify high-level goals, but most systems still depend on engineered rewards, task-specific supervision, or rigid command sets, limiting generalization to novel instructions. Similar constraints apply in synthetic biology and bioengineering, where the locus of control is often genomic rather than environmental perturbation.

A key open question is whether artificial or biological collectives can be guided by free-form natural language alone, without task-specific tuning or carefully designed evaluation metrics. We provide one possible answer here by showing, for the first time, that simple agents' collective behavior can be guided by free-form language prompts: one AI model transforms an imperative prompt into an intervention that is applied to simulated cells; a second AI model scores how well the prompt describes the resulting cellular dynamics; and the former AI model is evolved to improve the scores generated by the latter.

Unlike previous work, our method does not require engineered fitness functions or domain-specific prompt design. We show that the evolved system generalizes to unseen prompts without retraining. By treating natural language as a control layer, the system suggests a future in which spoken or written prompts could direct computational, robotic, or biological systems to desired behaviors. This work provides a concrete step toward this vision of AI-biology partnerships, in which language replaces mathematical objective functions, fixed rules, and domain-specific programming.

30 Aug 2019

The increasing pace of data collection has led to increasing awareness of

privacy risks, resulting in new data privacy regulations like General data

Protection Regulation (GDPR). Such regulations are an important step, but

automatic compliance checking is challenging. In this work, we present a new

paradigm, Data Capsule, for automatic compliance checking of data privacy

regulations in heterogeneous data processing infrastructures. Our key insight

is to pair up a data subject's data with a policy governing how the data is

processed. Specified in our formal policy language: PrivPolicy, the policy is

created and provided by the data subject alongside the data, and is associated

with the data throughout the life-cycle of data processing (e.g., data

transformation by data processing systems, data aggregation of multiple data

subjects' data). We introduce a solution for static enforcement of privacy

policies based on the concept of residual policies, and present a novel

algorithm based on abstract interpretation for deriving residual policies in

PrivPolicy. Our solution ensures compliance automatically, and is designed for

deployment alongside existing infrastructure. We also design and develop

PrivGuard, a reference data capsule manager that implements all the

functionalities of Data Capsule paradigm.

Continual lifelong learning requires an agent or model to learn many sequentially ordered tasks, building on previous knowledge without catastrophically forgetting it. Much work has gone towards preventing the default tendency of machine learning models to catastrophically forget, yet virtually all such work involves manually-designed solutions to the problem. We instead advocate meta-learning a solution to catastrophic forgetting, allowing AI to learn to continually learn. Inspired by neuromodulatory processes in the brain, we propose A Neuromodulated Meta-Learning Algorithm (ANML). It differentiates through a sequential learning process to meta-learn an activation-gating function that enables context-dependent selective activation within a deep neural network. Specifically, a neuromodulatory (NM) neural network gates the forward pass of another (otherwise normal) neural network called the prediction learning network (PLN). The NM network also thus indirectly controls selective plasticity (i.e. the backward pass of) the PLN. ANML enables continual learning without catastrophic forgetting at scale: it produces state-of-the-art continual learning performance, sequentially learning as many as 600 classes (over 9,000 SGD updates).

In this paper, we present a high-performing solution to the UAVM 2025 Challenge, which focuses on matching narrow FOV street-level images to corresponding satellite imagery using the University-1652 dataset. As panoramic Cross-View Geo-Localisation nears peak performance, it becomes increasingly important to explore more practical problem formulations. Real-world scenarios rarely offer panoramic street-level queries; instead, queries typically consist of limited-FOV images captured with unknown camera parameters. Our work prioritises discovering the highest achievable performance under these constraints, pushing the limits of existing architectures. Our method begins by retrieving candidate satellite image embeddings for a given query, followed by a re-ranking stage that selectively enhances retrieval accuracy within the top candidates. This two-stage approach enables more precise matching, even under the significant viewpoint and scale variations inherent in the task. Through experimentation, we demonstrate that our approach achieves competitive results -specifically attaining R@1 and R@10 retrieval rates of \topone\% and \topten\% respectively. This underscores the potential of optimised retrieval and re-ranking strategies in advancing practical geo-localisation performance. Code is available at this https URL.

Existing multi-label ranking (MLR) frameworks only exploit information deduced from the bipartition of labels into positive and negative sets. Therefore, they do not benefit from ranking among positive labels, which is the novel MLR approach we introduce in this paper. We propose UniMLR, a new MLR paradigm that models implicit class relevance/significance values as probability distributions using the ranking among positive labels, rather than treating them as equally important. This approach unifies ranking and classification tasks associated with MLR. Additionally, we address the challenges of scarcity and annotation bias in MLR datasets by introducing eight synthetic datasets (Ranked MNISTs) generated with varying significance-determining factors, providing an enriched and controllable experimental environment. We statistically demonstrate that our method accurately learns a representation of the positive rank order, which is consistent with the ground truth and proportional to the underlying significance values. Finally, we conduct comprehensive empirical experiments on both real-world and synthetic datasets, demonstrating the value of our proposed framework.

Spreading models capture key dynamics on networks, such as cascading failures in economic systems, (mis)information diffusion, and pathogen transmission. Here, we focus on design intervention problems -- for example, designing optimal vaccination rollouts or wastewater surveillance systems -- which can be solved by comparing outcomes under various counterfactuals. A leading approach to computing these outcomes is message passing, which allows for the rapid and direct computation of the marginal probabilities for each node. However, despite its efficiency, classical message passing tends to overestimate outbreak sizes on real-world networks, leading to incorrect predictions and, thus, interventions. Here, we improve these estimates by using the neighborhood message passing (NMP) framework for the epidemiological calculations. We evaluate the quality of the improved algorithm and demonstrate how it can be used to test possible solutions to three intervention design problems: influence maximization, optimal vaccination, and sentinel surveillance.

01 Oct 2024

Generative AI tools are used to create art-like outputs and sometimes aid in the creative process. These tools have potential benefits for artists, but they also have the potential to harm the art workforce and infringe upon artistic and intellectual property rights. Without explicit consent from artists, Generative AI creators scrape artists' digital work to train Generative AI models and produce art-like outputs at scale. These outputs are now being used to compete with human artists in the marketplace as well as being used by some artists in their generative processes to create art. We surveyed 459 artists to investigate the tension between artists' opinions on Generative AI art's potential utility and harm. This study surveys artists' opinions on the utility and threat of Generative AI art models, fair practices in the disclosure of artistic works in AI art training models, ownership and rights of AI art derivatives, and fair compensation. Results show that a majority of artists believe creators should disclose what art is being used in AI training, that AI outputs should not belong to model creators, and express concerns about AI's impact on the art workforce and who profits from their art. We hope the results of this work will further meaningful collaboration and alignment between the art community and Generative AI researchers and developers.

Aerial imagery analysis is critical for many research fields. However, obtaining frequent high-quality aerial images is not always accessible due to its high effort and cost requirements. One solution is to use the Ground-to-Aerial (G2A) technique to synthesize aerial images from easily collectible ground images. However, G2A is rarely studied, because of its challenges, including but not limited to, the drastic view changes, occlusion, and range of visibility. In this paper, we present a novel Geometric Preserving Ground-to-Aerial (G2A) image synthesis (GPG2A) model that can generate realistic aerial images from ground images. GPG2A consists of two stages. The first stage predicts the Bird's Eye View (BEV) segmentation (referred to as the BEV layout map) from the ground image. The second stage synthesizes the aerial image from the predicted BEV layout map and text descriptions of the ground image. To train our model, we present a new multi-modal cross-view dataset, namely VIGORv2 which is built upon VIGOR with newly collected aerial images, maps, and text descriptions. Our extensive experiments illustrate that GPG2A synthesizes better geometry-preserved aerial images than existing models. We also present two applications, data augmentation for cross-view geo-localization and sketch-based region search, to further verify the effectiveness of our GPG2A. The code and data will be publicly available.

Large-scale networks have been instrumental in shaping the way that we think

about how individuals interact with one another, developing key insights in

mathematical epidemiology, computational social science, and biology. However,

many of the underlying social systems through which diseases spread,

information disseminates, and individuals interact are inherently mediated

through groups of arbitrary size, known as higher-order interactions. There is

a gap between higher-order dynamics of group formation and fragmentation,

contagion spread, and social influence and the data necessary to validate these

higher-order mechanisms. Similarly, few datasets bridge the gap between these

pairwise and higher-order network data. Because of its open API, the Bluesky

social media platform provides a laboratory for observing social ties at scale.

In addition to pairwise following relationships, unlike many other social

networks, Bluesky features user-curated lists known as "starter packs" as a

mechanism for social network growth. We introduce "A Blue Start", a large-scale

network dataset comprising 26.7M users and their 1.6B pairwise following

relationships and 301.3K groups representing starter packs. This dataset will

be an essential resource for the study of higher-order network science.

Over the last million years, human language has emerged and evolved as a fundamental instrument of social communication and semiotic representation. People use language in part to convey emotional information, leading to the central and contingent questions: (1) What is the emotional spectrum of natural language? and (2) Are natural languages neutrally, positively, or negatively biased? Here, we report that the human-perceived positivity of over 10,000 of the most frequently used English words exhibits a clear positive bias. More deeply, we characterize and quantify distributions of word positivity for four large and distinct corpora, demonstrating that their form is broadly invariant with respect to frequency of word use.

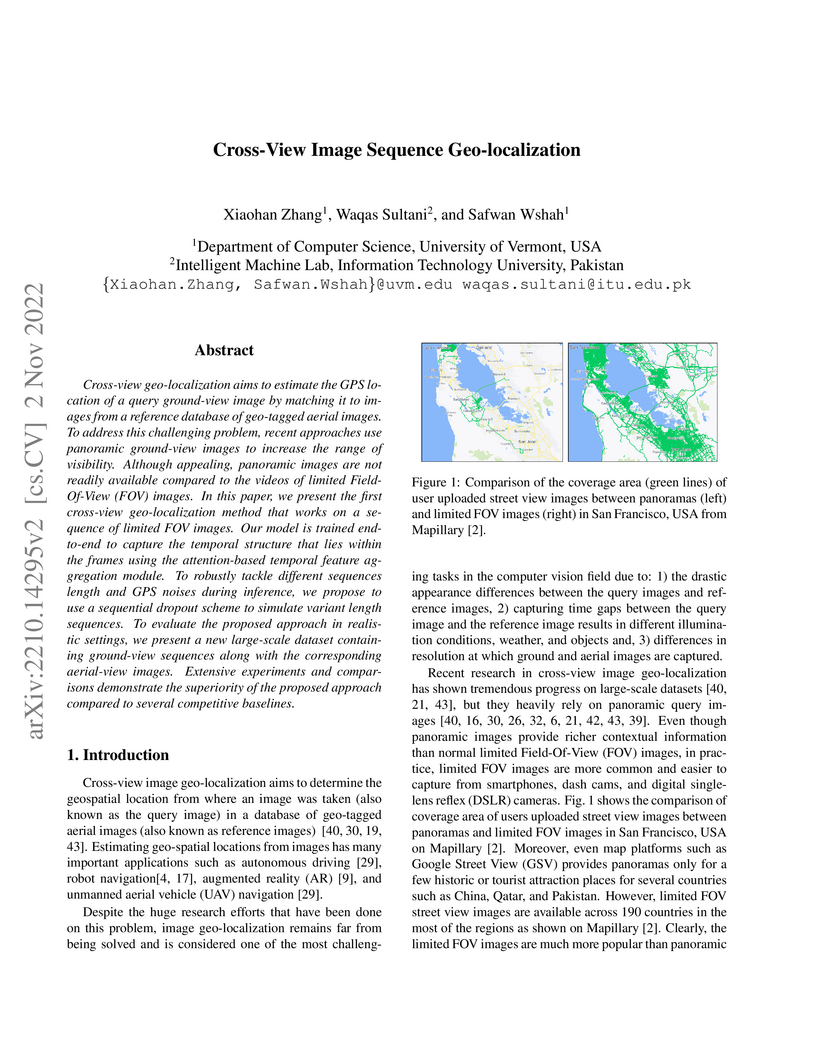

A method for cross-view geo-localization utilizes sequences of limited field-of-view ground images by introducing a transformer-based temporal feature aggregation module. The approach, also featuring sequential dropout for variable sequence length robustness, demonstrated improved retrieval accuracy (R@1 up to 2.07% with ResNet50) compared to adapted baselines and includes a newly collected large-scale dataset.

25 Aug 2025

University of Cambridge

University of Cambridge University of California, Santa Barbara

University of California, Santa Barbara New York University

New York University Stanford UniversityUniversity of HoustonUniversity of Colorado BoulderNew Jersey Institute of TechnologyUniversity of BathUniversity of VermontCarleton CollegeMiddlebury CollegeHamline UniversityHarvey Mudd CollegeUniversidad Nacional Autonoma de MexicoDenison University

Stanford UniversityUniversity of HoustonUniversity of Colorado BoulderNew Jersey Institute of TechnologyUniversity of BathUniversity of VermontCarleton CollegeMiddlebury CollegeHamline UniversityHarvey Mudd CollegeUniversidad Nacional Autonoma de MexicoDenison UniversityMathematical models of complex social systems can enrich social scientific theory, inform interventions, and shape policy. From voting behavior to economic inequality and urban development, such models influence decisions that affect millions of lives. Thus, it is especially important to formulate and present them with transparency, reproducibility, and humility. Modeling in social domains, however, is often uniquely challenging. Unlike in physics or engineering, researchers often lack controlled experiments or abundant, clean data. Observational data is sparse, noisy, partial, and missing in systematic ways. In such an environment, how can we build models that can inform science and decision-making in transparent and responsible ways?

The concept of geo-localization refers to the process of determining where on earth some `entity' is located, typically using Global Positioning System (GPS) coordinates. The entity of interest may be an image, sequence of images, a video, satellite image, or even objects visible within the image. As massive datasets of GPS tagged media have rapidly become available due to smartphones and the internet, and deep learning has risen to enhance the performance capabilities of machine learning models, the fields of visual and object geo-localization have emerged due to its significant impact on a wide range of applications such as augmented reality, robotics, self-driving vehicles, road maintenance, and 3D reconstruction. This paper provides a comprehensive survey of geo-localization involving images, which involves either determining from where an image has been captured (Image geo-localization) or geo-locating objects within an image (Object geo-localization). We will provide an in-depth study, including a summary of popular algorithms, a description of proposed datasets, and an analysis of performance results to illustrate the current state of each field.

Hydrological storm events are a primary driver for transporting water quality constituents such as turbidity, suspended sediments and nutrients. Analyzing the concentration (C) of these water quality constituents in response to increased streamflow discharge (Q), particularly when monitored at high temporal resolution during a hydrological event, helps to characterize the dynamics and flux of such constituents. A conventional approach to storm event analysis is to reduce the C-Q time series to two-dimensional (2-D) hysteresis loops and analyze these 2-D patterns. While effective and informative to some extent, this hysteresis loop approach has limitations because projecting the C-Q time series onto a 2-D plane obscures detail (e.g., temporal variation) associated with the C-Q relationships. In this paper, we address this issue using a multivariate time series clustering approach. Clustering is applied to sequences of river discharge and suspended sediment data (acquired through turbidity-based monitoring) from six watersheds located in the Lake Champlain Basin in the northeastern United States. While clusters of the hydrological storm events using the multivariate time series approach were found to be correlated to 2-D hysteresis loop classifications and watershed locations, the clusters differed from the 2-D hysteresis classifications. Additionally, using available meteorological data associated with storm events, we examine the characteristics of computational clusters of storm events in the study watersheds and identify the features driving the clustering approach.

10 Nov 2024

We use multi-regional input-output analysis to calculate the paid labour, energy, emissions, and material use required to provide basic needs for all people. We calculate two different low-consumption scenarios, using the UK as a case study: (1) a "decent living" scenario, which includes only the bare necessities, and (2) a "good life" scenario, which is based on the minimum living standards demanded by UK residents. We compare the resulting footprints to the current footprint of the UK, and to the footprints of the US, China, India, and a global average. Labour footprints are disaggregated by sector, skill level, and region of origin.

We find that both low-consumption scenarios would still require an unsustainable amount of labour and resources at the global scale. The decent living scenario would require a 26-hour working week, and on a per capita basis, 89 GJ of energy use, 5.9 tonnes of emissions, and 5.7 tonnes of used materials per year. The more socially sustainable good life scenario would require a 53-hour working week, 165 GJ of energy use, 9.9 tonnes of emissions, and 11.5 tonnes of used materials per capita. Both scenarios represent substantial reductions from the UK's current labour footprint of 68 hours per week, which the UK is only able to sustain by importing a substantial portion of its labour from other countries. We conclude that reducing consumption to the level of basic needs is not enough to achieve either social or environmental sustainability. Dramatic improvements in provisioning systems are also required.

12 Sep 2019

Molecular vibrations play a critical role in the charge transport properties of weakly van der Waals bonded organic semiconductors. To understand which specific phonon modes contribute most strongly to the electron-phonon coupling and ensuing thermal energetic disorder in some of the most widely studied high mobility molecular semiconductors, state-of-the-art quantum mechanical simulations of the vibrational modes and the ensuing electron phonon coupling constants are combined with experimental measurements of the low-frequency vibrations using inelastic neutron scattering and terahertz time-domain spectroscopy. In this way, the long-axis sliding motion is identified as a killer phonon mode, which in some molecules contributes more than 80% to the total thermal disorder. Based on this insight, a way to rationalize mobility trends between different materials and derive important molecular design guidelines for new high mobility molecular semiconductors is suggested.

The applicability of computational models to the biological world is an active topic of debate. We argue that a useful path forward results from abandoning hard boundaries between categories and adopting an observer-dependent, pragmatic view. Such a view dissolves the contingent dichotomies driven by human cognitive biases (e.g., tendency to oversimplify) and prior technological limitations in favor of a more continuous, gradualist view necessitated by the study of evolution, developmental biology, and intelligent machines. Efforts to re-shape living systems for biomedical or bioengineering purposes require prediction and control of their function at multiple scales. This is challenging for many reasons, one of which is that living systems perform multiple functions in the same place at the same time. We refer to this as "polycomputing" - the ability of the same substrate to simultaneously compute different things. This ability is an important way in which living things are a kind of computer, but not the familiar, linear, deterministic kind; rather, living things are computers in the broad sense of computational materials as reported in the rapidly-growing physical computing literature. We argue that an observer-centered framework for the computations performed by evolved and designed systems will improve the understanding of meso-scale events, as it has already done at quantum and relativistic scales. Here, we review examples of biological and technological polycomputing, and develop the idea that overloading of different functions on the same hardware is an important design principle that helps understand and build both evolved and designed systems. Learning to hack existing polycomputing substrates, as well as evolve and design new ones, will have massive impacts on regenerative medicine, robotics, and computer engineering.

There are no more papers matching your filters at the moment.