Huawei Cloud

06 Dec 2025

Monash UniversityCSIRO

Monash UniversityCSIRO Chinese Academy of SciencesSichuan University

Chinese Academy of SciencesSichuan University University of Manchester

University of Manchester Beihang University

Beihang University Nanjing University

Nanjing University Zhejiang University

Zhejiang University ByteDanceShanghai AI LabHarbin Institute of Technology

ByteDanceShanghai AI LabHarbin Institute of Technology Beijing Jiaotong University

Beijing Jiaotong University Huawei

Huawei Nanyang Technological UniversityNTUBeijing University of Posts and TelecommunicationsUniversity of Sheffield

Nanyang Technological UniversityNTUBeijing University of Posts and TelecommunicationsUniversity of Sheffield TencentAlibabaHuawei CloudStepFunTeleAIOPPOHong Kong University of Science and Technology (Guangzhou)KuaiShouM-A-PChinese Academy of Sciences, Institute of AutomationUOM

TencentAlibabaHuawei CloudStepFunTeleAIOPPOHong Kong University of Science and Technology (Guangzhou)KuaiShouM-A-PChinese Academy of Sciences, Institute of AutomationUOMA comprehensive synthesis of Large Language Models for automated software development covers the entire model lifecycle, from data curation to autonomous agents, and offers practical guidance derived from empirical experiments on pre-training, fine-tuning, and reinforcement learning, alongside a detailed analysis of challenges and future directions.

Huawei Technologies and Huawei Cloud researchers introduced Ego3D-Bench, a new benchmark for evaluating Vision-Language Models on ego-centric multi-view 3D spatial reasoning in dynamic outdoor scenes. They also developed Ego3D-VLM, a post-training framework that enhances VLM spatial understanding by generating a textual cognitive map from estimated 3D coordinates.

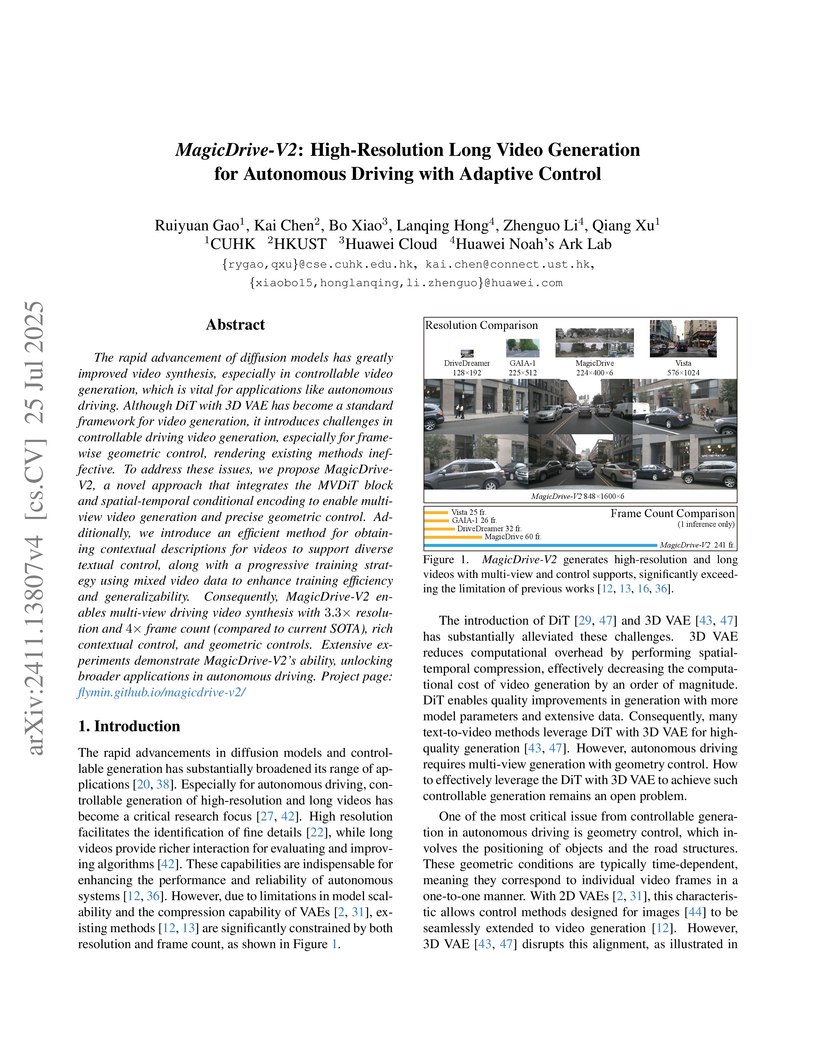

MagicDrive-V2, developed by researchers from CUHK, HKUST, and Huawei Noah's Ark Lab, generates high-resolution, long, multi-view street-view videos with precise adaptive control for autonomous driving applications. It leverages a DiT and 3D VAE with novel spatial-temporal conditional encoding, achieving 3.3x resolution and 4x frame count over prior methods while demonstrating superior realism and control fidelity.

This research provides an extensive investigation into the effectiveness of different visual encoders and multi-level features for Multi-modal Large Language Models (MLLMs). The paper introduces COMM, a model that fuses multi-level features from both CLIP and DINOv2, which leads to enhanced fine-grained perception, improved grounding abilities, and reduced object hallucination across various vision-language tasks.

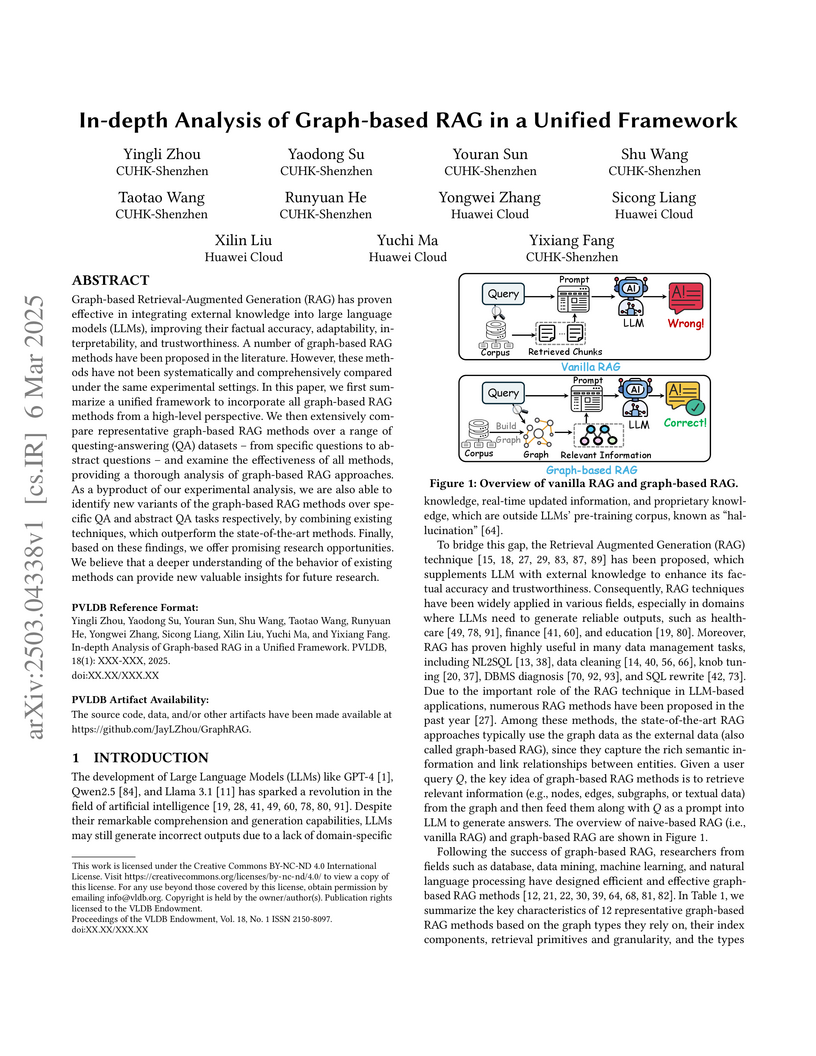

Researchers from CUHK-Shenzhen and Huawei Cloud present the first comprehensive analysis of graph-based RAG methods through a novel unified framework, conducting extensive experiments across 12 representative approaches while introducing two new variants (VGraphRAG and CheapRAG) that achieve state-of-the-art performance with improved efficiency.

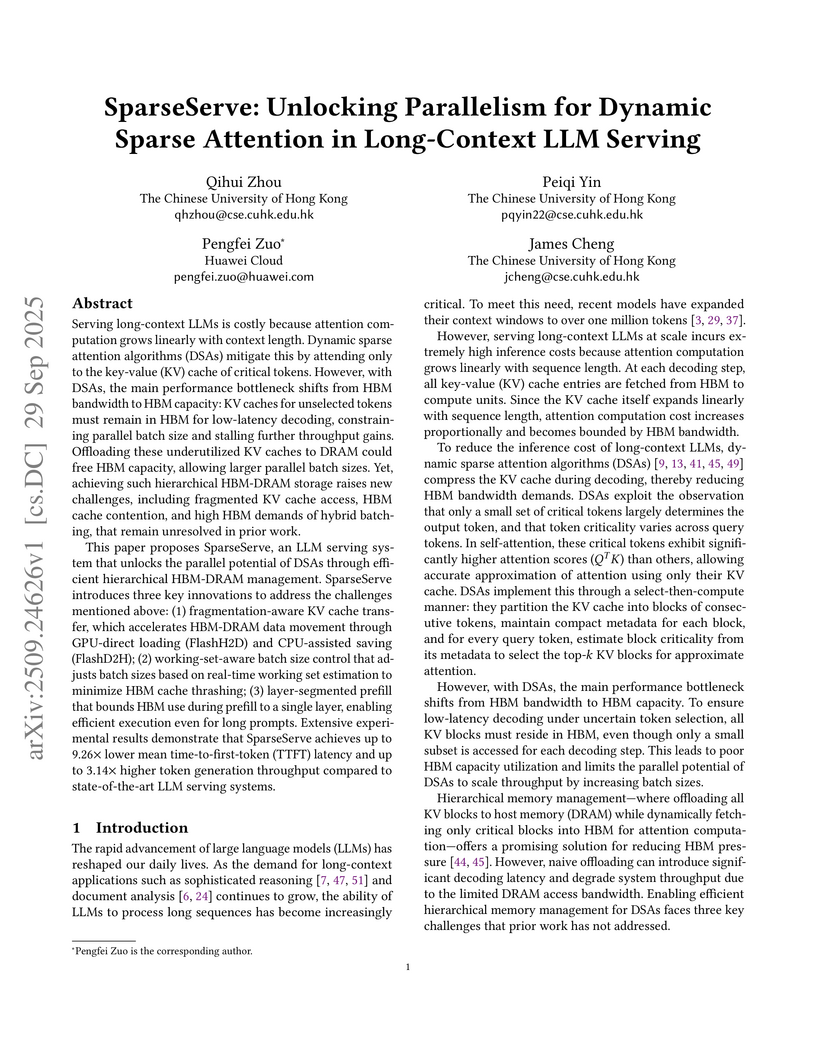

Serving long-context LLMs is costly because attention computation grows linearly with context length. Dynamic sparse attention algorithms (DSAs) mitigate this by attending only to the key-value (KV) cache of critical tokens. However, with DSAs, the main performance bottleneck shifts from HBM bandwidth to HBM capacity: KV caches for unselected tokens must remain in HBM for low-latency decoding, constraining parallel batch size and stalling further throughput gains. Offloading these underutilized KV caches to DRAM could free HBM capacity, allowing larger parallel batch sizes. Yet, achieving such hierarchical HBM-DRAM storage raises new challenges, including fragmented KV cache access, HBM cache contention, and high HBM demands of hybrid batching, that remain unresolved in prior work.

This paper proposes SparseServe, an LLM serving system that unlocks the parallel potential of DSAs through efficient hierarchical HBM-DRAM management. SparseServe introduces three key innovations to address the challenges mentioned above: (1) fragmentation-aware KV cache transfer, which accelerates HBM-DRAM data movement through GPU-direct loading (FlashH2D) and CPU-assisted saving (FlashD2H); (2) working-set-aware batch size control that adjusts batch sizes based on real-time working set estimation to minimize HBM cache thrashing; (3) layer-segmented prefill that bounds HBM use during prefill to a single layer, enabling efficient execution even for long prompts. Extensive experimental results demonstrate that SparseServe achieves up to 9.26x lower mean time-to-first-token (TTFT) latency and up to 3.14x higher token generation throughput compared to state-of-the-art LLM serving systems.

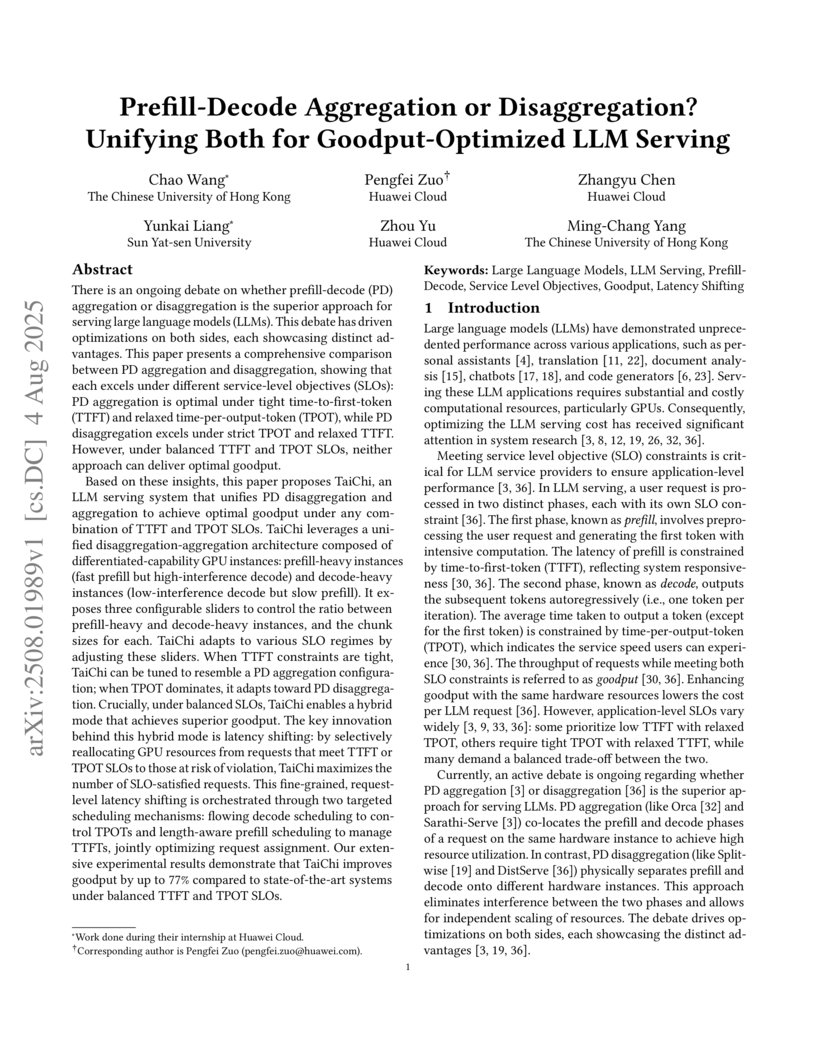

Researchers from The Chinese University of Hong Kong and Huawei Cloud developed TaiChi, an LLM serving system that unifies prefill-decode aggregation and disaggregation via a latency-shifting scheduling paradigm. TaiChi consistently delivered 9-47% higher goodput than aggregation and 29-77% higher than disaggregation, optimizing performance across a broad spectrum of Time-To-First-Token and Time-Per-Output-Token SLOs.

A comprehensive survey categorizes and analyzes LLM inference serving optimization techniques across instance-level, cluster-level, and emerging scenarios, providing a structured taxonomy of methods for improving deployment efficiency while highlighting key challenges and future research directions in areas like KV cache management, load balancing, and long-context processing.

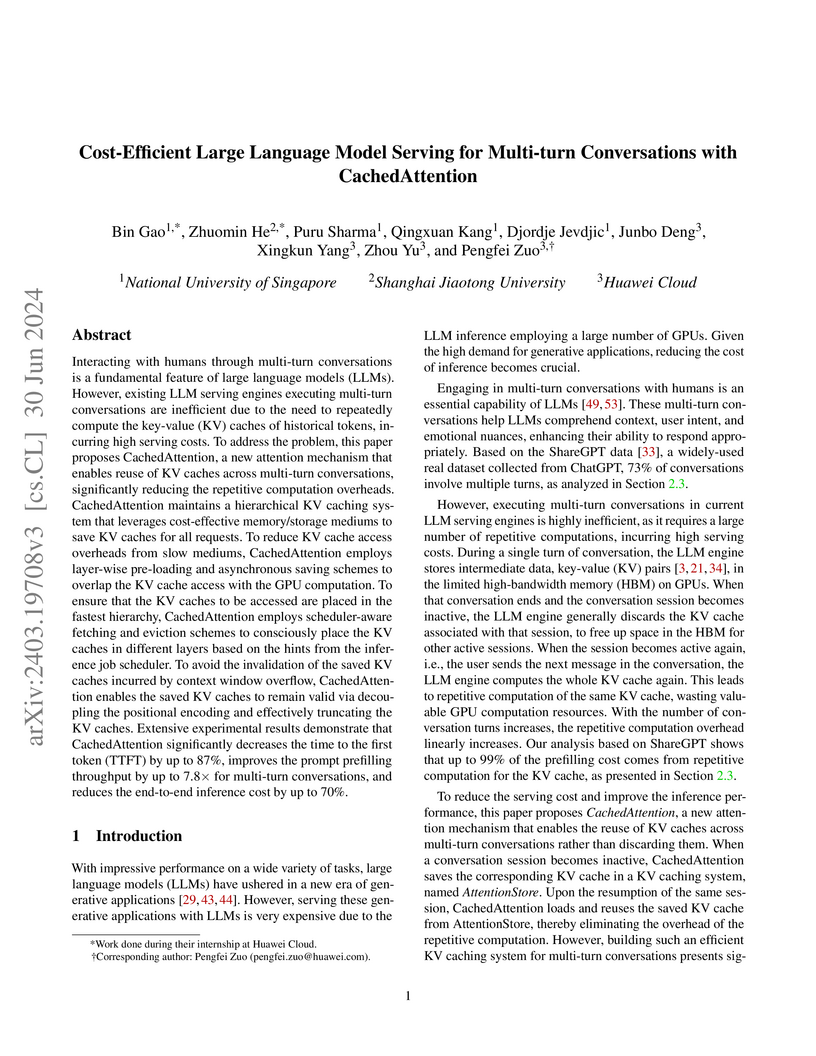

CachedAttention introduces a hierarchical caching system and a positional encoding decoupled truncation scheme to efficiently reuse Key-Value (KV) caches in multi-turn Large Language Model (LLM) conversations, reducing Time to First Token by up to 87% and inference costs by up to 70%. It achieves high cache hit rates (up to 90%) by proactively managing KV caches across HBM, host memory, and disks.

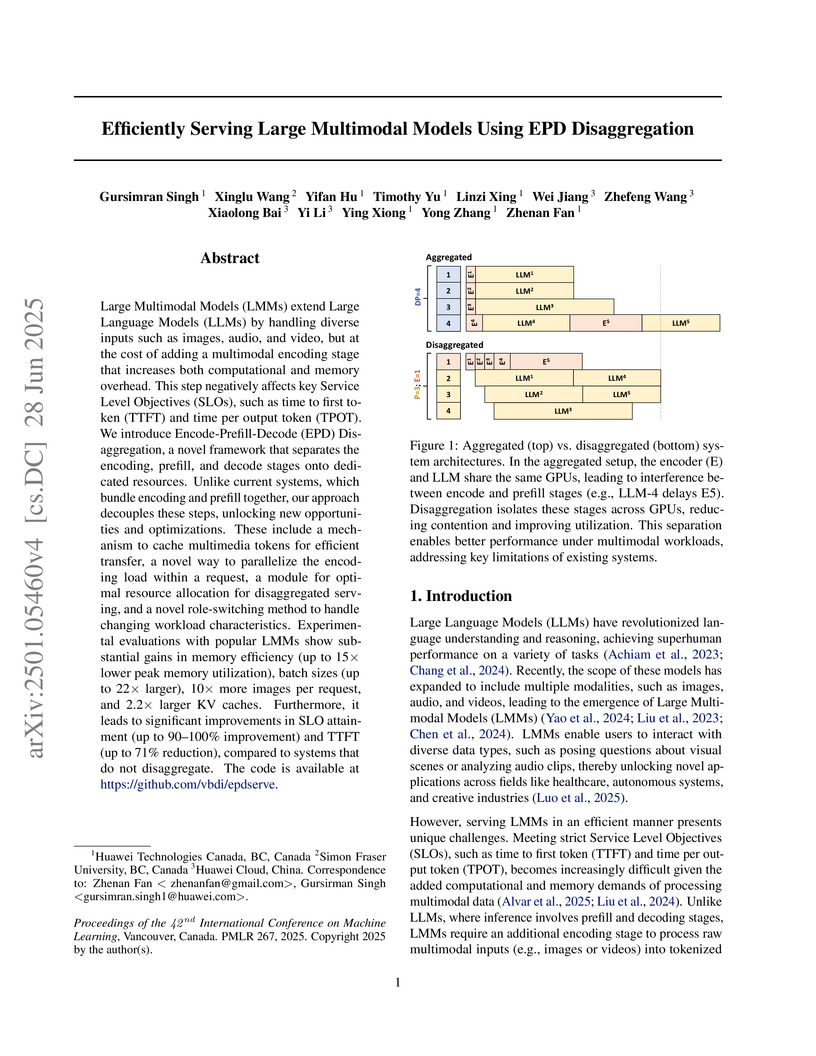

Researchers from Huawei Technologies Canada and Simon Fraser University developed Encode-Prefill-Decode (EPD) Disaggregation, a framework for serving Large Multimodal Models (LMMs) by separating the encoding, prefill, and decode stages onto dedicated resources. This approach improves resource utilization and reduces memory consumption by up to 15x, while significantly enhancing Time to First Token (TTFT) by up to 71.9% compared to existing methods.

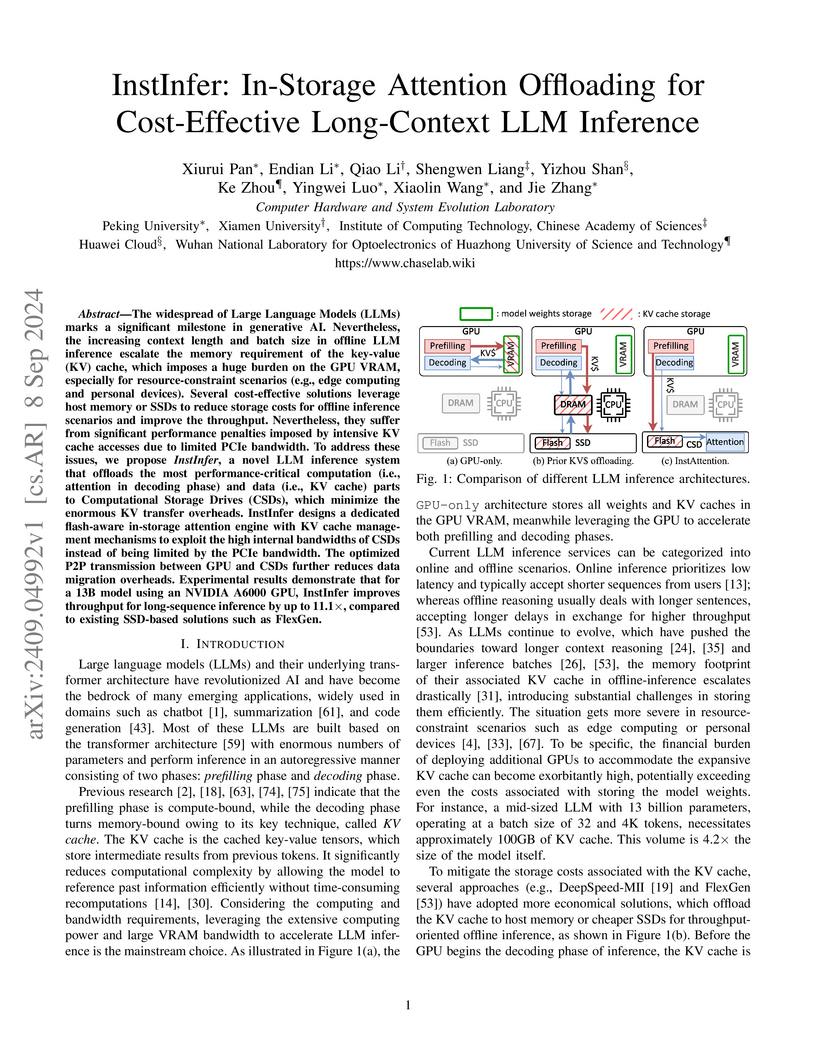

Pan and colleagues developed InstInfer, a system that offloads LLM attention computation to computational storage drives (CSDs) to reduce the key-value cache bottleneck. The system demonstrates up to 11.1x higher throughput and 94.0% lower data migration overheads compared to existing SSD-based solutions for long-context LLM inference.

Recent breakthroughs in reasoning language models have significantly advanced text-based reasoning. On the other hand, Multi-modal Large Language Models (MLLMs) still lag behind, hindered by their outdated internal LLMs. Upgrading these is often prohibitively expensive, as it requires complete vision-language alignment retraining which is costly. To address this issue, we introduce Perception-Reasoning Decoupling, which modularizes the MLLM's reasoning component and makes it easily replaceable. This approach redefines the MLLM's role to convert multi-modal inputs into detailed textual outputs that can be processed by any powerful, external, text-only LLM reasoners. To align the MLLM's perceptual output with the final reasoning task, we propose a novel reinforcement learning algorithm called Visual Perception Optimization (VPO). VPO rewards the MLLM based on the correctness of answers generated by the external reasoner to produce faithful and query-relevant captions. Together, this decoupling pipeline and VPO form our Reasoning-Aligned PerceptIon Decoupling (RAPID) approach. Empirical results show that RAPID achieves significant performance gains on multi-modal reasoning benchmarks. Crucially, RAPID enables a novel inference-time scaling paradigm: Once trained with VPO, the MLLM can be paired with any state-of-the-art LLM reasoner for consistent performance improvement without retraining.

Researchers from Peking University and Huawei Technologies introduced MotionPercept, a large-scale human perceptual evaluation dataset, and MotionCritic, a human motion critic model trained to align with human judgments. The work demonstrates that MotionCritic provides a superior motion quality metric compared to existing methods and can efficiently improve the perceptual quality of generated motions through lightweight fine-tuning of generative models.

3D-MoLM introduces the first framework enabling large language models to directly interpret 3D molecular structures, outperforming prior 1D/2D molecule-text models in tasks like retrieval, captioning, and question answering, especially for 3D-dependent properties. This system integrates a 3D molecular encoder with a language model, fine-tuned on a bespoke 3D-centric instruction dataset.

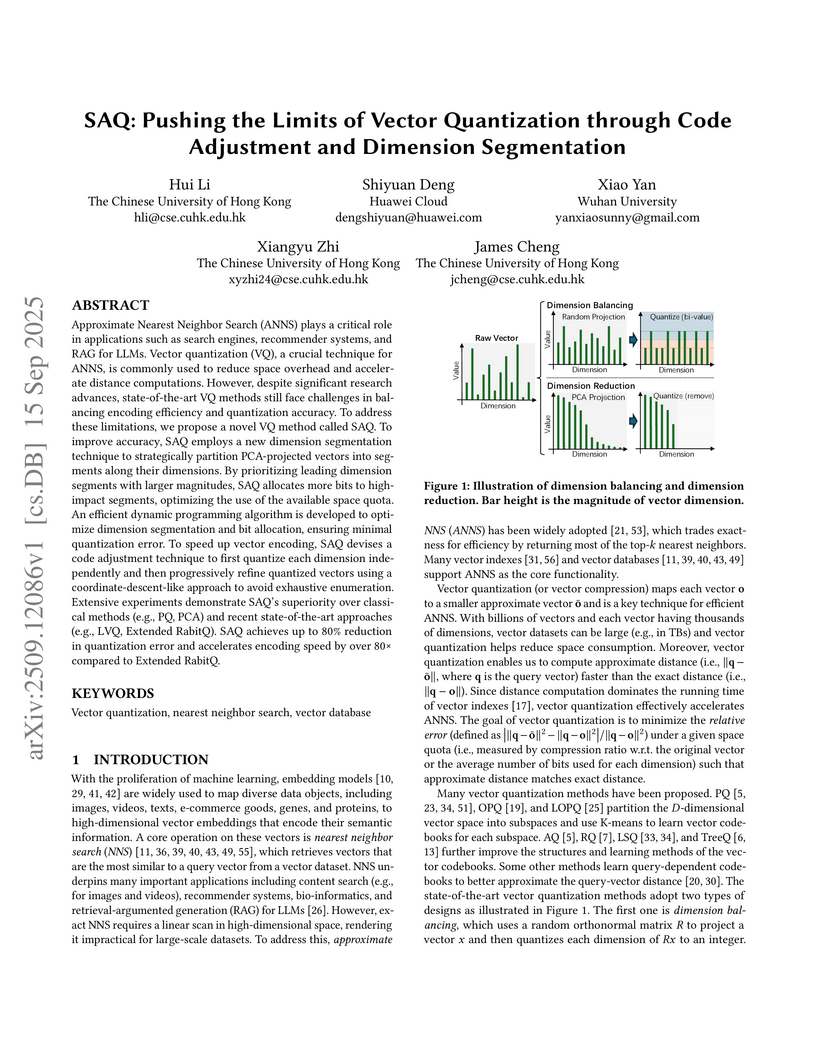

SAQ (Segmented CAQ) advances vector quantization by combining Code Adjustment Quantization for linear-time encoding with Dimension Segmentation for variance-aware bit allocation. This approach significantly reduces approximation error and quantization time, achieving up to 14.6x higher query throughput for Approximate Nearest Neighbor Search while maintaining high recall.

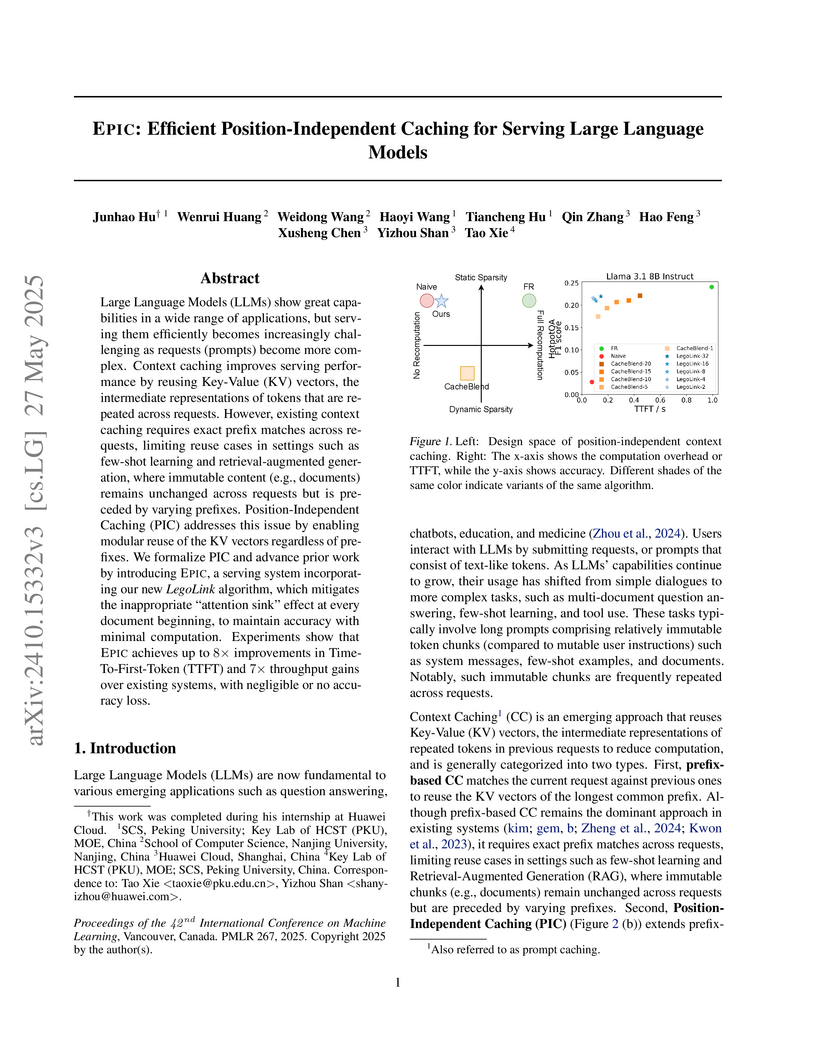

Large Language Models (LLMs) show great capabilities in a wide range of

applications, but serving them efficiently becomes increasingly challenging as

requests (prompts) become more complex. Context caching improves serving

performance by reusing Key-Value (KV) vectors, the intermediate representations

of tokens that are repeated across requests. However, existing context caching

requires exact prefix matches across requests, limiting reuse cases in settings

such as few-shot learning and retrieval-augmented generation, where immutable

content (e.g., documents) remains unchanged across requests but is preceded by

varying prefixes. Position-Independent Caching (PIC) addresses this issue by

enabling modular reuse of the KV vectors regardless of prefixes. We formalize

PIC and advance prior work by introducing EPIC, a serving system incorporating

our new LegoLink algorithm, which mitigates the inappropriate "attention sink"

effect at every document beginning, to maintain accuracy with minimal

computation. Experiments show that EPIC achieves up to 8x improvements in

Time-To-First-Token (TTFT) and 7x throughput gains over existing systems, with

negligible or no accuracy loss.

Recent advancements in multimodal slow-thinking systems have demonstrated

remarkable performance across diverse visual reasoning tasks. However, their

capabilities in text-rich image reasoning tasks remain understudied due to the

lack of a systematic benchmark. To address this gap, we propose OCR-Reasoning,

a comprehensive benchmark designed to systematically assess Multimodal Large

Language Models on text-rich image reasoning tasks. The benchmark comprises

1,069 human-annotated examples spanning 6 core reasoning abilities and 18

practical reasoning tasks in text-rich visual scenarios. Furthermore, unlike

other text-rich image understanding benchmarks that only annotate the final

answers, OCR-Reasoning also annotates the reasoning process simultaneously.

With the annotated reasoning process and the final answers, OCR-Reasoning

evaluates not only the final answers generated by models but also their

reasoning processes, enabling a holistic analysis of their problem-solving

abilities. Leveraging this benchmark, we conducted a comprehensive evaluation

of state-of-the-art MLLMs. Our results demonstrate the limitations of existing

methodologies. Notably, even state-of-the-art MLLMs exhibit substantial

difficulties, with none achieving accuracy surpassing 50\% across

OCR-Reasoning, indicating that the challenges of text-rich image reasoning are

an urgent issue to be addressed. The benchmark and evaluation scripts are

available at this https URL

Text-driven diffusion models have unlocked unprecedented abilities in image generation, whereas their video counterpart still lags behind due to the excessive training cost of temporal modeling. Besides the training burden, the generated videos also suffer from appearance inconsistency and structural flickers, especially in long video synthesis. To address these challenges, we design a \emph{training-free} framework called \textbf{ControlVideo} to enable natural and efficient text-to-video generation. ControlVideo, adapted from ControlNet, leverages coarsely structural consistency from input motion sequences, and introduces three modules to improve video generation. Firstly, to ensure appearance coherence between frames, ControlVideo adds fully cross-frame interaction in self-attention modules. Secondly, to mitigate the flicker effect, it introduces an interleaved-frame smoother that employs frame interpolation on alternated frames. Finally, to produce long videos efficiently, it utilizes a hierarchical sampler that separately synthesizes each short clip with holistic coherency. Empowered with these modules, ControlVideo outperforms the state-of-the-arts on extensive motion-prompt pairs quantitatively and qualitatively. Notably, thanks to the efficient designs, it generates both short and long videos within several minutes using one NVIDIA 2080Ti. Code is available at this https URL.

MemServe proposes a unified system architecture for Large Language Model serving that integrates both inter-request context caching and intra-request disaggregated inference optimizations. The system utilizes an elastic memory pool (MemPool) and a global scheduler, achieving reductions in job completion time and time-to-first-token across various LLM workloads.

Researchers from Soochow University and Huawei Cloud introduce Outlier Tokens Tracing (OTT), a KV cache quantization method that identifies and handles outlier tokens separately during LLM inference, achieving 6.4x memory reduction and 2.3x throughput improvement while maintaining model accuracy through specialized handling of statistical anomalies.

There are no more papers matching your filters at the moment.