Researchers from Monash University and collaborating institutions introduce LongVLM, a VideoLLM that achieves fine-grained understanding of long videos by efficiently integrating local, temporally ordered segment features with global semantic context. The model outperforms previous state-of-the-art methods on the VideoChatGPT benchmark, showing improvements in Detail Orientation (+0.17) and Consistency (+0.65), and achieves higher accuracy on zero-shot video QA datasets like ANET-QA, MSRVTT-QA, and MSVD-QA.

Researchers from Australian National University, University of Sydney, Tencent, and other institutions developed "Motion Anything," a framework for human motion generation that adaptively integrates multimodal conditions like text and music. It employs an attention-based masking strategy to dynamically prioritize motion segments, outperforming prior models in text-to-motion (e.g., 15% lower FID on HumanML3D) and music-to-dance tasks, and introduces a new Text-Music-Dance dataset.

DictAS introduces a self-supervised framework for class-generalizable few-shot anomaly segmentation by modeling anomaly detection as a dictionary lookup task, inspired by human cognition. The approach leverages a frozen CLIP backbone and trains on normal data only, achieving new state-of-the-art performance across diverse industrial and medical datasets while maintaining high efficiency and robustness.

Vision-and-Language Navigation (VLN) suffers from the limited diversity and

scale of training data, primarily constrained by the manual curation of

existing simulators. To address this, we introduce RoomTour3D, a

video-instruction dataset derived from web-based room tour videos that capture

real-world indoor spaces and human walking demonstrations. Unlike existing VLN

datasets, RoomTour3D leverages the scale and diversity of online videos to

generate open-ended human walking trajectories and open-world navigable

instructions. To compensate for the lack of navigation data in online videos,

we perform 3D reconstruction and obtain 3D trajectories of walking paths

augmented with additional information on the room types, object locations and

3D shape of surrounding scenes. Our dataset includes ∼100K open-ended

description-enriched trajectories with ∼200K instructions, and 17K

action-enriched trajectories from 1847 room tour environments. We demonstrate

experimentally that RoomTour3D enables significant improvements across multiple

VLN tasks including CVDN, SOON, R2R, and REVERIE. Moreover, RoomTour3D

facilitates the development of trainable zero-shot VLN agents, showcasing the

potential and challenges of advancing towards open-world navigation.

Shot2Story introduces a new multi-modal benchmark for comprehensive understanding of multi-shot videos, providing detailed shot-level visual and narration captions, high-quality video summaries, and challenging question-answering pairs. The benchmark demonstrates that models leveraging explicit shot structure and audio cues achieve superior performance in complex multi-shot video understanding tasks compared to existing approaches.

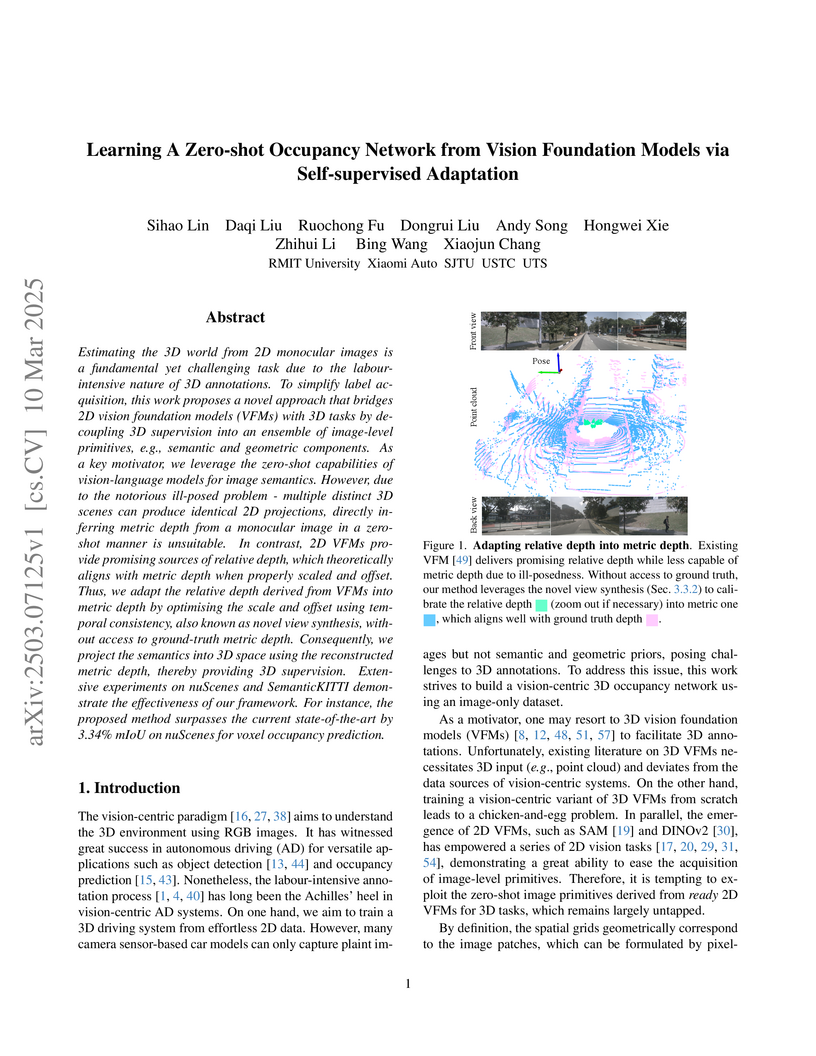

Researchers developed a self-supervised framework for zero-shot 3D occupancy prediction that leverages unlabeled 2D images and Vision Foundation Models. This method converts relative depth from VFMs into metric depth using novel view synthesis, enabling the generation of pseudo-3D supervision to train a 3D occupancy network without 3D annotations.

This paper provides a systematic overview of leveraging Large Language Models for Natural Language Generation evaluation, introducing a coherent taxonomy to categorize existing methods. It details the capabilities and limitations of current LLM-based evaluation techniques, finding them to correlate better with human judgment but incur higher computational costs and exhibit specific biases.

Ensuring the reliability and safety of machine learning models in open-world

deployment is a central challenge in AI safety. This thesis develops both

algorithmic and theoretical foundations to address key reliability issues

arising from distributional uncertainty and unknown classes, from standard

neural networks to modern foundation models like large language models (LLMs).

Traditional learning paradigms, such as empirical risk minimization (ERM),

assume no distribution shift between training and inference, often leading to

overconfident predictions on out-of-distribution (OOD) inputs. This thesis

introduces novel frameworks that jointly optimize for in-distribution accuracy

and reliability to unseen data. A core contribution is the development of an

unknown-aware learning framework that enables models to recognize and handle

novel inputs without labeled OOD data.

We propose new outlier synthesis methods, VOS, NPOS, and DREAM-OOD, to

generate informative unknowns during training. Building on this, we present

SAL, a theoretical and algorithmic framework that leverages unlabeled

in-the-wild data to enhance OOD detection under realistic deployment

conditions. These methods demonstrate that abundant unlabeled data can be

harnessed to recognize and adapt to unforeseen inputs, providing formal

reliability guarantees.

The thesis also extends reliable learning to foundation models. We develop

HaloScope for hallucination detection in LLMs, MLLMGuard for defending against

malicious prompts in multimodal models, and data cleaning methods to denoise

human feedback used for better alignment. These tools target failure modes that

threaten the safety of large-scale models in deployment.

Overall, these contributions promote unknown-aware learning as a new

paradigm, and we hope it can advance the reliability of AI systems with minimal

human efforts.

The integration of conversational agents into our daily lives has become

increasingly common, yet many of these agents cannot engage in deep

interactions with humans. Despite this, there is a noticeable shortage of

datasets that capture multimodal information from human-robot interaction

dialogues. To address this gap, we have recorded a novel multimodal dataset

(MERCI) that encompasses rich embodied interaction data. The process involved

asking participants to complete a questionnaire and gathering their profiles on

ten topics, such as hobbies and favorite music. Subsequently, we initiated

conversations between the robot and the participants, leveraging GPT-4 to

generate contextually appropriate responses based on the participant's profile

and emotional state, as determined by facial expression recognition and

sentiment analysis. Automatic and user evaluations were conducted to assess the

overall quality of the collected data. The results of both evaluations

indicated a high level of naturalness, engagement, fluency, consistency, and

relevance in the conversation, as well as the robot's ability to provide

empathetic responses. It is worth noting that the dataset is derived from

genuine interactions with the robot, involving participants who provided

personal information and conveyed actual emotions.

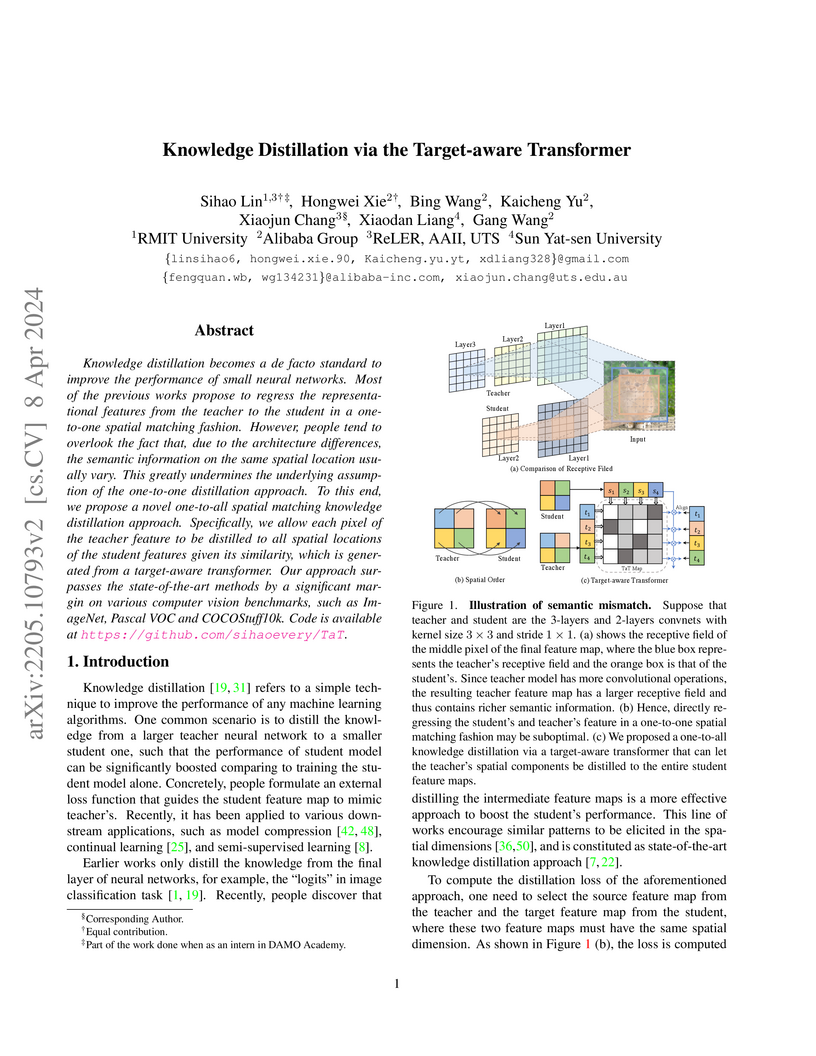

Knowledge distillation becomes a de facto standard to improve the performance of small neural networks. Most of the previous works propose to regress the representational features from the teacher to the student in a one-to-one spatial matching fashion. However, people tend to overlook the fact that, due to the architecture differences, the semantic information on the same spatial location usually vary. This greatly undermines the underlying assumption of the one-to-one distillation approach. To this end, we propose a novel one-to-all spatial matching knowledge distillation approach. Specifically, we allow each pixel of the teacher feature to be distilled to all spatial locations of the student features given its similarity, which is generated from a target-aware transformer. Our approach surpasses the state-of-the-art methods by a significant margin on various computer vision benchmarks, such as ImageNet, Pascal VOC and COCOStuff10k. Code is available at this https URL.

Deep point cloud registration methods face challenges to partial overlaps and

rely on labeled data. To address these issues, we propose UDPReg, an

unsupervised deep probabilistic registration framework for point clouds with

partial overlaps. Specifically, we first adopt a network to learn posterior

probability distributions of Gaussian mixture models (GMMs) from point clouds.

To handle partial point cloud registration, we apply the Sinkhorn algorithm to

predict the distribution-level correspondences under the constraint of the

mixing weights of GMMs. To enable unsupervised learning, we design three

distribution consistency-based losses: self-consistency, cross-consistency, and

local contrastive. The self-consistency loss is formulated by encouraging GMMs

in Euclidean and feature spaces to share identical posterior distributions. The

cross-consistency loss derives from the fact that the points of two partially

overlapping point clouds belonging to the same clusters share the cluster

centroids. The cross-consistency loss allows the network to flexibly learn a

transformation-invariant posterior distribution of two aligned point clouds.

The local contrastive loss facilitates the network to extract discriminative

local features. Our UDPReg achieves competitive performance on the

3DMatch/3DLoMatch and ModelNet/ModelLoNet benchmarks.

The InteR framework introduces an iterative information refinement process that synergistically combines Retrieval Models and Large Language Models for information retrieval. This approach achieved state-of-the-art zero-shot retrieval performance, outperforming previous zero-shot baselines by over 8% absolute MAP gain and often surpassing supervised fine-tuned methods on web search and low-resource benchmarks.

Audio-driven simultaneous gesture generation is vital for human-computer

communication, AI games, and film production. While previous research has shown

promise, there are still limitations. Methods based on VAEs are accompanied by

issues of local jitter and global instability, whereas methods based on

diffusion models are hampered by low generation efficiency. This is because the

denoising process of DDPM in the latter relies on the assumption that the noise

added at each step is sampled from a unimodal distribution, and the noise

values are small. DDIM borrows the idea from the Euler method for solving

differential equations, disrupts the Markov chain process, and increases the

noise step size to reduce the number of denoising steps, thereby accelerating

generation. However, simply increasing the step size during the step-by-step

denoising process causes the results to gradually deviate from the original

data distribution, leading to a significant drop in the quality of the

generated actions and the emergence of unnatural artifacts. In this paper, we

break the assumptions of DDPM and achieves breakthrough progress in denoising

speed and fidelity. Specifically, we introduce a conditional GAN to capture

audio control signals and implicitly match the multimodal denoising

distribution between the diffusion and denoising steps within the same sampling

step, aiming to sample larger noise values and apply fewer denoising steps for

high-speed generation.

20 Jul 2020

Reducing cost and power consumption while maintaining high network access

capability is a key physical-layer requirement of massive Internet of Things

(mIoT) networks. Deploying a hybrid array is a cost- and energy-efficient way

to meet the requirement, but would penalize system degree of freedom (DoF) and

channel estimation accuracy. This is because signals from multiple antennas are

combined by a radio frequency (RF) network of the hybrid array. This paper

presents a novel hybrid uniform circular cylindrical array (UCyA) for mIoT

networks. We design a nested hybrid beamforming structure based on sparse array

techniques and propose the corresponding channel estimation method based on the

second-order channel statistics. As a result, only a small number of RF chains

are required to preserve the DoF of the UCyA. We also propose a new

tensor-based two-dimensional (2-D) direction-of-arrival (DoA) estimation

algorithm tailored for the proposed hybrid array. The algorithm suppresses the

noise components in all tensor modes and operates on the signal data model

directly, hence improving estimation accuracy with an affordable computational

complexity. Corroborated by a Cramer-Rao lower bound (CRLB) analysis,

simulation results show that the proposed hybrid UCyA array and the DoA

estimation algorithm can accurately estimate the 2-D DoAs of a large number of

IoT devices.

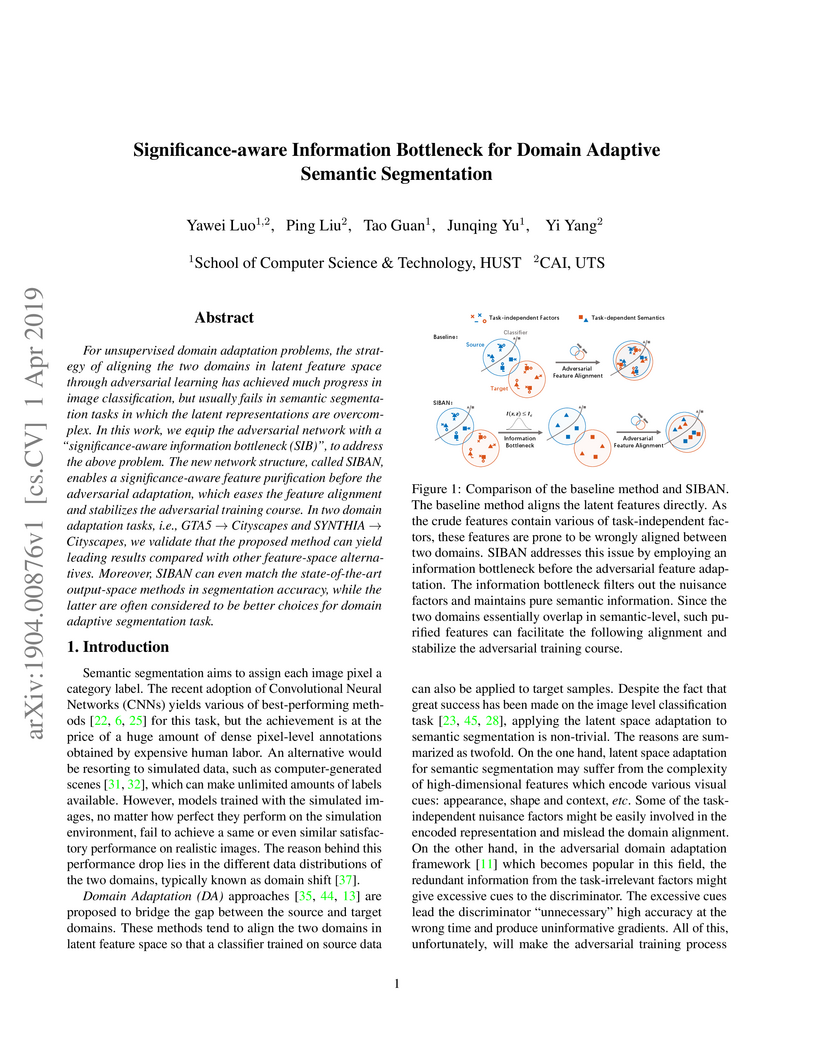

For unsupervised domain adaptation problems, the strategy of aligning the two domains in latent feature space through adversarial learning has achieved much progress in image classification, but usually fails in semantic segmentation tasks in which the latent representations are overcomplex. In this work, we equip the adversarial network with a "significance-aware information bottleneck (SIB)", to address the above problem. The new network structure, called SIBAN, enables a significance-aware feature purification before the adversarial adaptation, which eases the feature alignment and stabilizes the adversarial training course. In two domain adaptation tasks, i.e., GTA5 -> Cityscapes and SYNTHIA -> Cityscapes, we validate that the proposed method can yield leading results compared with other feature-space alternatives. Moreover, SIBAN can even match the state-of-the-art output-space methods in segmentation accuracy, while the latter are often considered to be better choices for domain adaptive segmentation task.

Accurate channel parameter estimation is challenging for wideband

millimeter-wave (mmWave) large-scale hybrid arrays, due to beam squint and much

fewer radio frequency (RF) chains than antennas. This paper presents a novel

joint delay and angle estimation approach for wideband mmWave fully-connected

hybrid uniform cylindrical arrays. We first design a new hybrid beamformer to

reduce the dimension of received signals on the horizontal plane by exploiting

the convergence of the Bessel function, and to reduce the active beams in the

vertical direction through preselection. The important recurrence relationship

of the received signals needed for subspace-based angle and delay estimation is

preserved, even with substantially fewer RF chains than antennas. Then, linear

interpolation is generalized to reconstruct the received signals of the hybrid

beamformer, so that the signals can be coherently combined across the whole

band to suppress the beam squint. As a result, efficient subspace-based

algorithm algorithms can be developed to estimate the angles and delays of

multipath components. The estimated delays and angles are further matched and

correctly associated with different paths in the presence of non-negligible

noises, by putting forth perturbation operations. Simulations show that the

proposed approach can approach the Cram\'{e}r-Rao lower bound (CRLB) of the

estimation with a significantly lower computational complexity than existing

techniques.

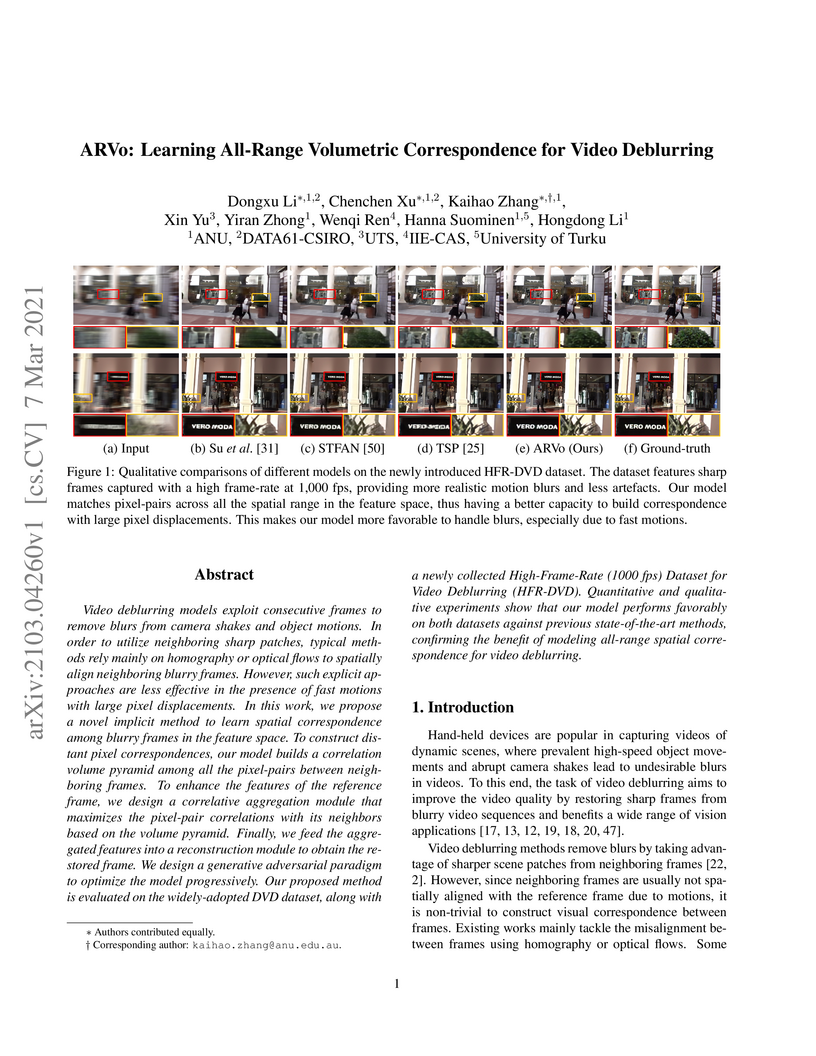

Video deblurring models exploit consecutive frames to remove blurs from

camera shakes and object motions. In order to utilize neighboring sharp

patches, typical methods rely mainly on homography or optical flows to

spatially align neighboring blurry frames. However, such explicit approaches

are less effective in the presence of fast motions with large pixel

displacements. In this work, we propose a novel implicit method to learn

spatial correspondence among blurry frames in the feature space. To construct

distant pixel correspondences, our model builds a correlation volume pyramid

among all the pixel-pairs between neighboring frames. To enhance the features

of the reference frame, we design a correlative aggregation module that

maximizes the pixel-pair correlations with its neighbors based on the volume

pyramid. Finally, we feed the aggregated features into a reconstruction module

to obtain the restored frame. We design a generative adversarial paradigm to

optimize the model progressively. Our proposed method is evaluated on the

widely-adopted DVD dataset, along with a newly collected High-Frame-Rate (1000

fps) Dataset for Video Deblurring (HFR-DVD). Quantitative and qualitative

experiments show that our model performs favorably on both datasets against

previous state-of-the-art methods, confirming the benefit of modeling all-range

spatial correspondence for video deblurring.

Differentiable Architecture Search (DARTS) has received massive attention in

recent years, mainly because it significantly reduces the computational cost

through weight sharing and continuous relaxation. However, more recent works

find that existing differentiable NAS techniques struggle to outperform naive

baselines, yielding deteriorative architectures as the search proceeds. Rather

than directly optimizing the architecture parameters, this paper formulates the

neural architecture search as a distribution learning problem through relaxing

the architecture weights into Gaussian distributions. By leveraging the

natural-gradient variational inference (NGVI), the architecture distribution

can be easily optimized based on existing codebases without incurring more

memory and computational consumption. We demonstrate how the differentiable NAS

benefits from Bayesian principles, enhancing exploration and improving

stability. The experimental results on NAS-Bench-201 and NAS-Bench-1shot1

benchmark datasets confirm the significant improvements the proposed framework

can make. In addition, instead of simply applying the argmax on the learned

parameters, we further leverage the recently-proposed training-free proxies in

NAS to select the optimal architecture from a group architectures drawn from

the optimized distribution, where we achieve state-of-the-art results on the

NAS-Bench-201 and NAS-Bench-1shot1 benchmarks. Our best architecture in the

DARTS search space also obtains competitive test errors with 2.37\%, 15.72\%,

and 24.2\% on CIFAR-10, CIFAR-100, and ImageNet datasets, respectively.

In a model inversion attack, an adversary attempts to reconstruct the data records, used to train a target model, using only the model's output. In launching a contemporary model inversion attack, the strategies discussed are generally based on either predicted confidence score vectors, i.e., black-box attacks, or the parameters of a target model, i.e., white-box attacks. However, in the real world, model owners usually only give out the predicted labels; the confidence score vectors and model parameters are hidden as a defense mechanism to prevent such attacks. Unfortunately, we have found a model inversion method that can reconstruct the input data records based only on the output labels. We believe this is the attack that requires the least information to succeed and, therefore, has the best applicability. The key idea is to exploit the error rate of the target model to compute the median distance from a set of data records to the decision boundary of the target model. The distance, then, is used to generate confidence score vectors which are adopted to train an attack model to reconstruct the data records. The experimental results show that highly recognizable data records can be reconstructed with far less information than existing methods.

Arch-Graph: Acyclic Architecture Relation Predictor for Task-Transferable Neural Architecture Search

Arch-Graph: Acyclic Architecture Relation Predictor for Task-Transferable Neural Architecture Search

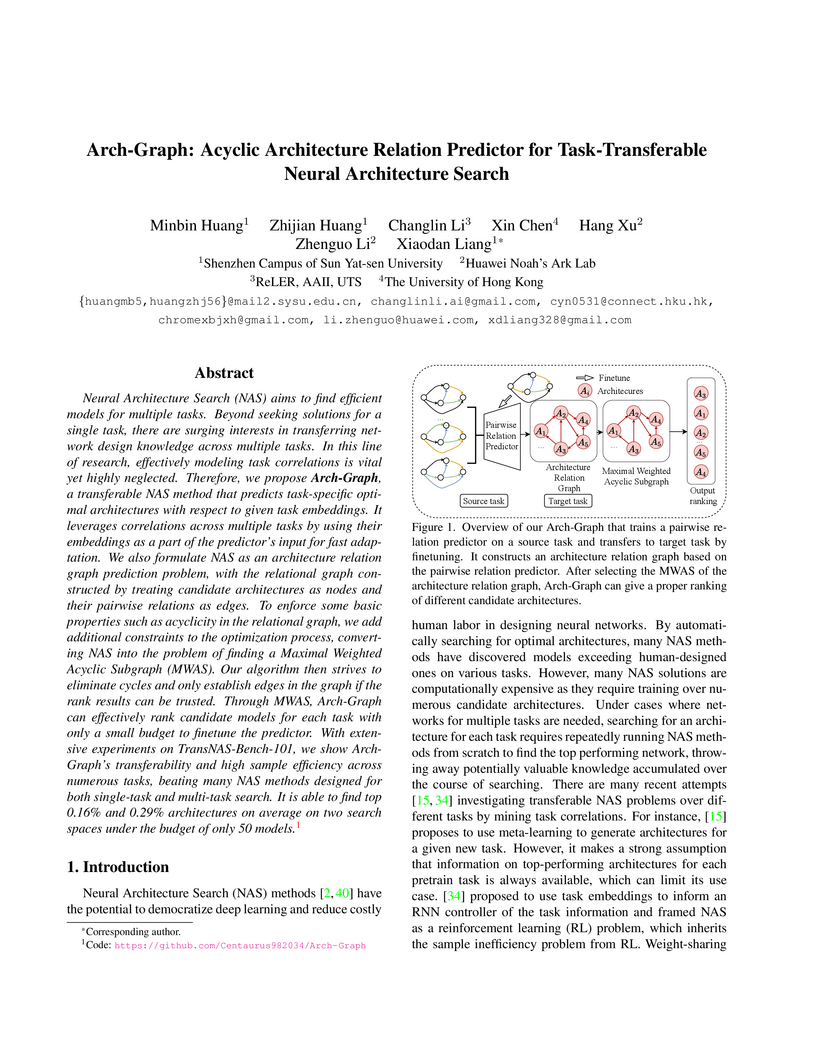

Neural Architecture Search (NAS) aims to find efficient models for multiple

tasks. Beyond seeking solutions for a single task, there are surging interests

in transferring network design knowledge across multiple tasks. In this line of

research, effectively modeling task correlations is vital yet highly neglected.

Therefore, we propose \textbf{Arch-Graph}, a transferable NAS method that

predicts task-specific optimal architectures with respect to given task

embeddings. It leverages correlations across multiple tasks by using their

embeddings as a part of the predictor's input for fast adaptation. We also

formulate NAS as an architecture relation graph prediction problem, with the

relational graph constructed by treating candidate architectures as nodes and

their pairwise relations as edges. To enforce some basic properties such as

acyclicity in the relational graph, we add additional constraints to the

optimization process, converting NAS into the problem of finding a Maximal

Weighted Acyclic Subgraph (MWAS). Our algorithm then strives to eliminate

cycles and only establish edges in the graph if the rank results can be

trusted. Through MWAS, Arch-Graph can effectively rank candidate models for

each task with only a small budget to finetune the predictor. With extensive

experiments on TransNAS-Bench-101, we show Arch-Graph's transferability and

high sample efficiency across numerous tasks, beating many NAS methods designed

for both single-task and multi-task search. It is able to find top 0.16\% and

0.29\% architectures on average on two search spaces under the budget of only

50 models.

There are no more papers matching your filters at the moment.