Ulsan National Institute of Science and Technology

The dynamics of numerous physical systems, such as spins and qubits, can be described as a series of rotation operations, i.e., walks in the manifold of the rotation group. A basic question with practical applications is how likely and under what conditions such walks return to the origin (the identity rotation), which means that the physical system returns to its initial state. In three dimensions, we show that almost every walk in SO(3) or SU(2), even a very complicated one, will preferentially return to the origin simply by traversing the walk twice in a row and uniformly scaling all rotation angles. We explain why traversing the walk only once almost never suffices to return, and comment on the problem in higher dimensions.

Researchers from the University of Oxford and UNIST introduce Decision-informed Neural Networks (DINN), a framework integrating Large Language Models (LLMs) and Decision-Focused Learning for portfolio optimization. DINN achieves higher risk-adjusted returns and improved robustness compared to deep learning baselines, notably yielding a 43.53% annualized return and a 1.04 Sharpe Ratio on the S&P 100 dataset.

Despite their ability to understand chemical knowledge, large language models (LLMs) remain limited in their capacity to propose novel molecules with desired functions (e.g., drug-like properties). In addition, the molecules that LLMs propose can often be challenging to make, and are almost never compatible with automated synthesis approaches. To better enable the discovery of functional small molecules, LLMs need to learn a new molecular language that is more effective in predicting properties and inherently synced with automated synthesis technology. Current molecule LLMs are limited by representing molecules based on atoms. In this paper, we argue that just like tokenizing texts into meaning-bearing (sub-)word tokens instead of characters, molecules should be tokenized at the level of functional building blocks, i.e., parts of molecules that bring unique functions and serve as effective building blocks for real-world automated laboratory synthesis. This motivates us to propose mCLM, a modular Chemical-Language Model that comprises a bilingual language model that understands both natural language descriptions of functions and molecular blocks. mCLM front-loads synthesizability considerations while improving the predicted functions of molecules in a principled manner. mCLM, with only 3B parameters, achieves improvements in synthetic accessibility relative to 7 other leading generative AI methods including GPT-5. When tested on 122 out-of-distribution medicines using only building blocks/tokens that are compatible with automated modular synthesis, mCLM outperforms all baselines in property scores and synthetic accessibility. mCLM can also reason on multiple functions and iteratively self-improve to rescue drug candidates that failed late in clinical trials ("fallen angels").

The PURE framework leverages Large Language Models to build and maintain evolving user profiles by systematically extracting and summarizing information from user reviews. This approach enables train-free personalized recommendations that mitigate LLM token limitations and demonstrates improved performance over other LLM-based methods in continuous sequential recommendation tasks.

Generalization in deep learning is closely tied to the pursuit of flat minima in the loss landscape, yet classical Stochastic Gradient Langevin Dynamics (SGLD) offers no mechanism to bias its dynamics toward such low-curvature solutions. This work introduces Flatness-Aware Stochastic Gradient Langevin Dynamics (fSGLD), designed to efficiently and provably seek flat minima in high-dimensional nonconvex optimization problems. At each iteration, fSGLD uses the stochastic gradient evaluated at parameters perturbed by isotropic Gaussian noise, commonly referred to as Random Weight Perturbation (RWP), thereby optimizing a randomized-smoothing objective that implicitly captures curvature information. Leveraging these properties, we prove that the invariant measure of fSGLD stays close to a stationary measure concentrated on the global minimizers of a loss function regularized by the Hessian trace whenever the inverse temperature and the scale of random weight perturbation are properly coupled. This result provides a rigorous theoretical explanation for the benefits of random weight perturbation. In particular, we establish non-asymptotic convergence guarantees in Wasserstein distance with the best known rate and derive an excess-risk bound for the Hessian-trace regularized objective. Extensive experiments on noisy-label and large-scale vision tasks, in both training-from-scratch and fine-tuning settings, demonstrate that fSGLD achieves superior or comparable generalization and robustness to baseline algorithms while maintaining the computational cost of SGD, about half that of SAM. Hessian-spectrum analysis further confirms that fSGLD converges to significantly flatter minima.

Researchers from UNIST developed three stable classes of Neural Stochastic Differential Equations (Neural SDEs), including Langevin-type, Linear Noise, and Geometric SDEs, designed to overcome training instability and provide theoretical robustness against distribution shifts in irregular time series data. These models consistently achieve superior performance in interpolation, forecasting, and classification tasks, maintaining high accuracy even with significant missing data.

Researchers from Ulsan National Institute of Science and Technology (UNIST) and LG AI Research developed "THEME," a hierarchical contrastive learning framework that integrates semantic stock representations from textual data with temporal market dynamics. This system achieves significant improvements in thematic stock retrieval precision and enhances investment portfolio performance, outperforming real-world thematic ETFs and generic large language models for this specialized financial application.

Forecasting complex time series is an important yet challenging problem that involves various industrial applications. Recently, masked time-series modeling has been proposed to effectively model temporal dependencies for forecasting by reconstructing masked segments from unmasked ones. However, since the semantic information in time series is involved in intricate temporal variations generated by multiple time series components, simply masking a raw time series ignores the inherent semantic structure, which may cause MTM to learn spurious temporal patterns present in the raw data. To capture distinct temporal semantics, we show that masked modeling techniques should address entangled patterns through a decomposition approach. Specifically, we propose ST-MTM, a masked time-series modeling framework with seasonal-trend decomposition, which includes a novel masking method for the seasonal-trend components that incorporates different temporal variations from each component. ST-MTM uses a period masking strategy for seasonal components to produce multiple masked seasonal series based on inherent multi-periodicity and a sub-series masking strategy for trend components to mask temporal regions that share similar variations. The proposed masking method presents an effective pre-training task for learning intricate temporal variations and dependencies. Additionally, ST-MTM introduces a contrastive learning task to support masked modeling by enhancing contextual consistency among multiple masked seasonal representations. Experimental results show that our proposed ST-MTM achieves consistently superior forecasting performance compared to existing masked modeling, contrastive learning, and supervised forecasting methods.

Markowitz laid the foundation of portfolio theory through the mean-variance optimization (MVO) framework. However, the effectiveness of MVO is contingent on the precise estimation of expected returns, variances, and covariances of asset returns, which are typically uncertain. Machine learning models are becoming useful in estimating uncertain parameters, and such models are trained to minimize prediction errors, such as mean squared errors (MSE), which treat prediction errors uniformly across assets. Recent studies have pointed out that this approach would lead to suboptimal decisions and proposed Decision-Focused Learning (DFL) as a solution, integrating prediction and optimization to improve decision-making outcomes. While studies have shown DFL's potential to enhance portfolio performance, the detailed mechanisms of how DFL modifies prediction models for MVO remain unexplored. This study investigates how DFL adjusts stock return prediction models to optimize decisions in MVO. Theoretically, we show that DFL's gradient can be interpreted as tilting the MSE-based prediction errors by the inverse covariance matrix, effectively incorporating inter-asset correlations into the learning process, while MSE treats each asset's error independently. This tilting mechanism leads to systematic prediction biases where DFL overestimates returns for assets included in portfolios while underestimating excluded assets. Our findings reveal why DFL achieves superior portfolio performance despite higher prediction errors. The strategic biases are features, not flaws.

PosterLlama introduces an LLM-based multi-modal model that reformulates layout generation as HTML sequence generation, integrating an LLM's design knowledge with fine-grained visual understanding. The approach achieves state-of-the-art performance in content-aware layout generation and demonstrates robustness against dataset artifacts, supporting diverse conditional generation tasks.

Northwestern Polytechnical University Northeastern University

Northeastern University Sun Yat-Sen UniversityGhent UniversityKorea University

Sun Yat-Sen UniversityGhent UniversityKorea University Nanjing University

Nanjing University Zhejiang University

Zhejiang University University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute

University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute Yale UniversityUniversitat Pompeu Fabra

Yale UniversityUniversitat Pompeu Fabra NVIDIA

NVIDIA Huawei

Huawei Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology

Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology King’s College LondonSingapore University of Technology and Design

King’s College LondonSingapore University of Technology and Design Aalto University

Aalto University Virginia TechUniversity of HoustonEast China Normal University

Virginia TechUniversity of HoustonEast China Normal University KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

Northeastern University

Northeastern University Sun Yat-Sen UniversityGhent UniversityKorea University

Sun Yat-Sen UniversityGhent UniversityKorea University Nanjing University

Nanjing University Zhejiang University

Zhejiang University University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute

University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute Yale UniversityUniversitat Pompeu Fabra

Yale UniversityUniversitat Pompeu Fabra NVIDIA

NVIDIA Huawei

Huawei Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology

Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology King’s College LondonSingapore University of Technology and Design

King’s College LondonSingapore University of Technology and Design Aalto University

Aalto University Virginia TechUniversity of HoustonEast China Normal University

Virginia TechUniversity of HoustonEast China Normal University KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécomA comprehensive white paper from the GenAINet Initiative introduces Large Telecom Models (LTMs) as a novel framework for integrating AI into telecommunications infrastructure, providing a detailed roadmap for innovation while addressing critical challenges in scalability, hardware requirements, and regulatory compliance through insights from a diverse coalition of academic, industry and regulatory experts.

Chinese Academy of Sciences

Chinese Academy of Sciences University of Science and Technology of China

University of Science and Technology of China Shanghai Jiao Tong University

Shanghai Jiao Tong University Nagoya UniversityInstitut de Robòtica i Informàtica Industrial

Nagoya UniversityInstitut de Robòtica i Informàtica Industrial Aalborg University

Aalborg University EPFL

EPFL University of Tokyo

University of Tokyo Huazhong University of Science and TechnologyUlsan National Institute of Science and TechnologyKeio UniversitySoutheast UniversityBeijing University of Posts and TelecommunicationsKing Abdullah University of Science and TechnologyUniversitat de BarcelonaUniversity of TsukubaUCLouvainMichigan Technological UniversityUniversity of LiègeUniversity of Science and TechnologyKorea Institute of Science and TechnologyUniversity of the Bundeswehr MunichShenzhen Institutes of Advanced TechnologyMax-Planck Institute for InformaticsComputer Vision CenterUniversidad Industrial de SantanderSuzhou Institute for Advanced ResearchState Key Laboratory of Networking and Switching TechnologyLeipzig University of Applied SciencesEscuela Superior Politecnica del LitoralEVS Broadcast EquipmentIntellindust AI LabOpus AI ResearchSportradarTAHAKOMEidos.aiKIST Schoolint8.ioMIXI Inc.Intelligent Perception and Image Understanding LabPlaybox Inc.Laboratory for Biosignal Processing

Huazhong University of Science and TechnologyUlsan National Institute of Science and TechnologyKeio UniversitySoutheast UniversityBeijing University of Posts and TelecommunicationsKing Abdullah University of Science and TechnologyUniversitat de BarcelonaUniversity of TsukubaUCLouvainMichigan Technological UniversityUniversity of LiègeUniversity of Science and TechnologyKorea Institute of Science and TechnologyUniversity of the Bundeswehr MunichShenzhen Institutes of Advanced TechnologyMax-Planck Institute for InformaticsComputer Vision CenterUniversidad Industrial de SantanderSuzhou Institute for Advanced ResearchState Key Laboratory of Networking and Switching TechnologyLeipzig University of Applied SciencesEscuela Superior Politecnica del LitoralEVS Broadcast EquipmentIntellindust AI LabOpus AI ResearchSportradarTAHAKOMEidos.aiKIST Schoolint8.ioMIXI Inc.Intelligent Perception and Image Understanding LabPlaybox Inc.Laboratory for Biosignal ProcessingThe SoccerNet 2025 Challenges mark the fifth annual edition of the SoccerNet open benchmarking effort, dedicated to advancing computer vision research in football video understanding. This year's challenges span four vision-based tasks: (1) Team Ball Action Spotting, focused on detecting ball-related actions in football broadcasts and assigning actions to teams; (2) Monocular Depth Estimation, targeting the recovery of scene geometry from single-camera broadcast clips through relative depth estimation for each pixel; (3) Multi-View Foul Recognition, requiring the analysis of multiple synchronized camera views to classify fouls and their severity; and (4) Game State Reconstruction, aimed at localizing and identifying all players from a broadcast video to reconstruct the game state on a 2D top-view of the field. Across all tasks, participants were provided with large-scale annotated datasets, unified evaluation protocols, and strong baselines as starting points. This report presents the results of each challenge, highlights the top-performing solutions, and provides insights into the progress made by the community. The SoccerNet Challenges continue to serve as a driving force for reproducible, open research at the intersection of computer vision, artificial intelligence, and sports. Detailed information about the tasks, challenges, and leaderboards can be found at this https URL, with baselines and development kits available at this https URL.

07 Oct 2025

This research extends the understanding of relevant deformations in 2d (0,2) supersymmetric gauge theories beyond mass terms to include non-mass holomorphic couplings. The paper establishes a geometric criterion for renormalization group (RG) flow direction using the Sasaki-Einstein 7-manifold volume and derives operator scaling dimensions directly from divisor volumes in the dual Calabi-Yau geometry. The work also demonstrates the interplay of these deformations with triality and birational transformations, showing the invariance of the R-symmetry refined Hilbert series.

30 Sep 2025

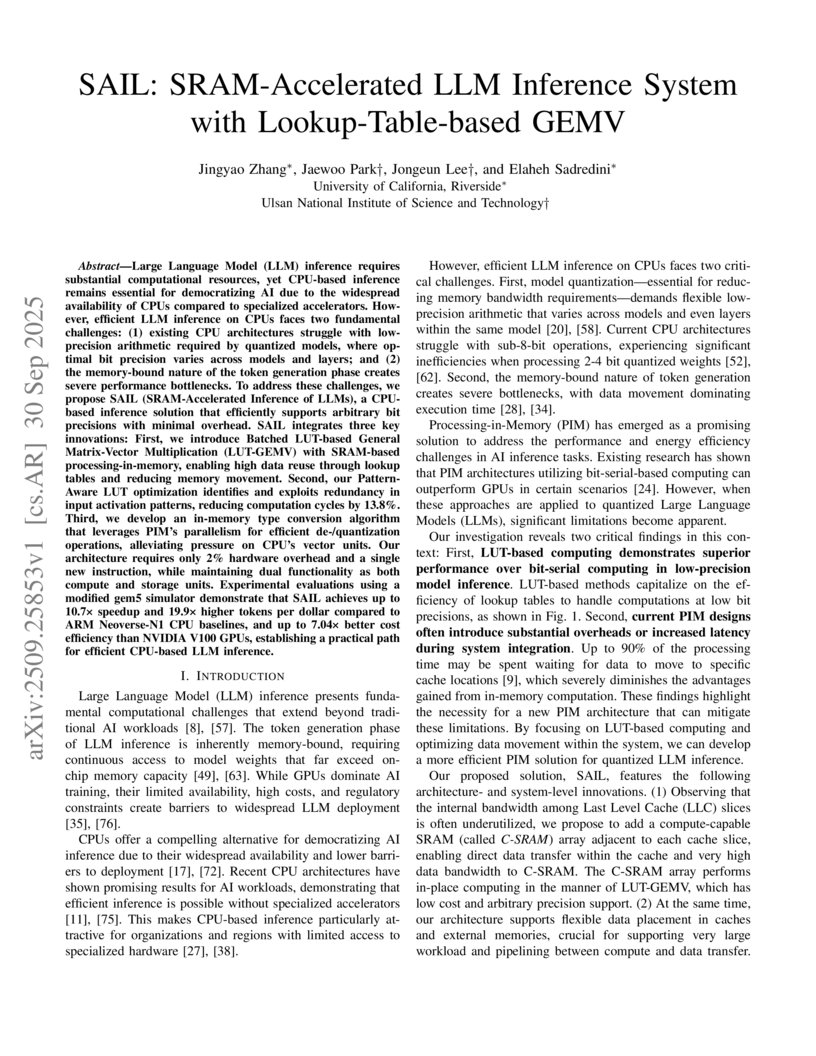

Large Language Model (LLM) inference requires substantial computational resources, yet CPU-based inference remains essential for democratizing AI due to the widespread availability of CPUs compared to specialized accelerators. However, efficient LLM inference on CPUs faces two fundamental challenges: (1) existing CPU architectures struggle with low-precision arithmetic required by quantized models, where optimal bit precision varies across models and layers; and (2) the memory-bound nature of the token generation phase creates severe performance bottlenecks. To address these challenges, we propose SAIL (SRAM-Accelerated Inference of LLMs), a CPU-based inference solution that efficiently supports arbitrary bit precisions with minimal overhead. SAIL integrates three key innovations: First, we introduce Batched LUT-based General Matrix-Vector Multiplication (LUT-GEMV) with SRAM-based processing-in-memory, enabling high data reuse through lookup tables and reducing memory movement. Second, our Pattern-Aware LUT optimization identifies and exploits redundancy in input activation patterns, reducing computation cycles by 13.8\%. Third, we develop an in-memory type conversion algorithm that leverages PIM's parallelism for efficient de-/quantization operations, alleviating pressure on CPU's vector units. Our architecture requires only 2\% hardware overhead and a single new instruction, while maintaining dual functionality as both compute and storage units. Experimental evaluations using a modified gem5 simulator demonstrate that SAIL achieves up to 10.7x speedup and 19.9x higher tokens per dollar compared to ARM Neoverse-N1 CPU baselines, and up to 7.04x better cost efficiency than NVIDIA V100 GPUs, establishing a practical path for efficient CPU-based LLM inference.

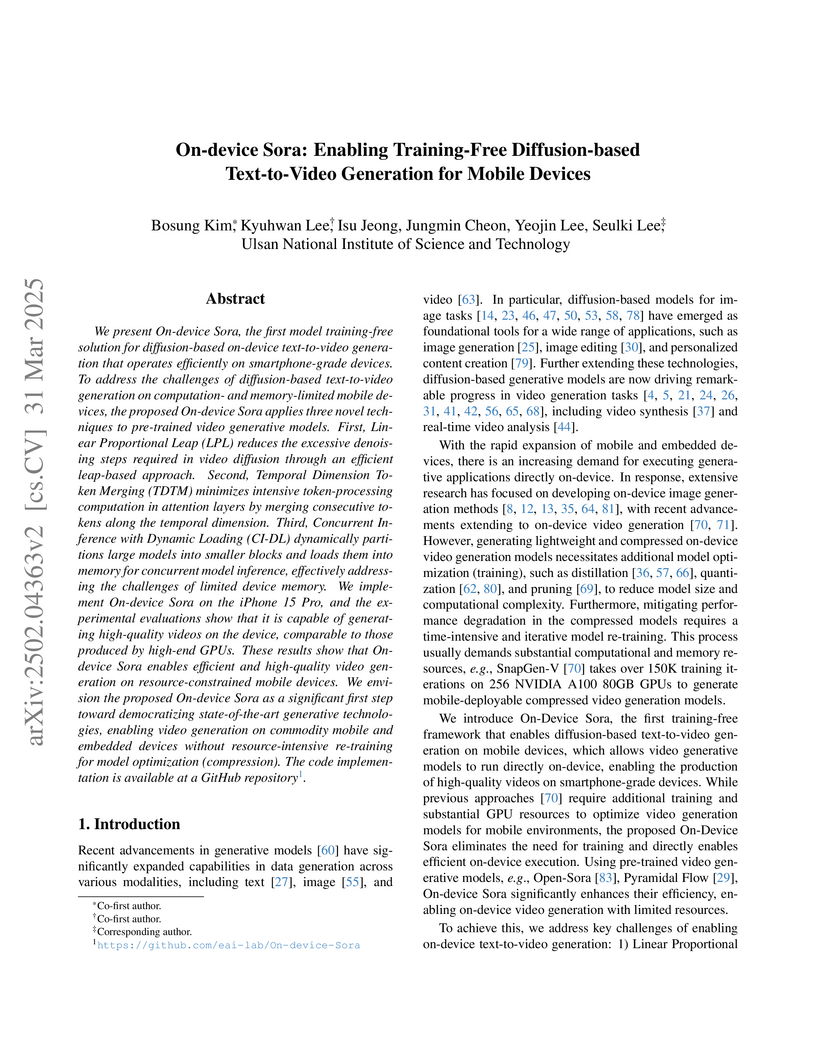

We present On-device Sora, the first model training-free solution for

diffusion-based on-device text-to-video generation that operates efficiently on

smartphone-grade devices. To address the challenges of diffusion-based

text-to-video generation on computation- and memory-limited mobile devices, the

proposed On-device Sora applies three novel techniques to pre-trained video

generative models. First, Linear Proportional Leap (LPL) reduces the excessive

denoising steps required in video diffusion through an efficient leap-based

approach. Second, Temporal Dimension Token Merging (TDTM) minimizes intensive

token-processing computation in attention layers by merging consecutive tokens

along the temporal dimension. Third, Concurrent Inference with Dynamic Loading

(CI-DL) dynamically partitions large models into smaller blocks and loads them

into memory for concurrent model inference, effectively addressing the

challenges of limited device memory. We implement On-device Sora on the iPhone

15 Pro, and the experimental evaluations show that it is capable of generating

high-quality videos on the device, comparable to those produced by high-end

GPUs. These results show that On-device Sora enables efficient and high-quality

video generation on resource-constrained mobile devices. We envision the

proposed On-device Sora as a significant first step toward democratizing

state-of-the-art generative technologies, enabling video generation on

commodity mobile and embedded devices without resource-intensive re-training

for model optimization (compression). The code implementation is available at a

GitHub repository(this https URL).

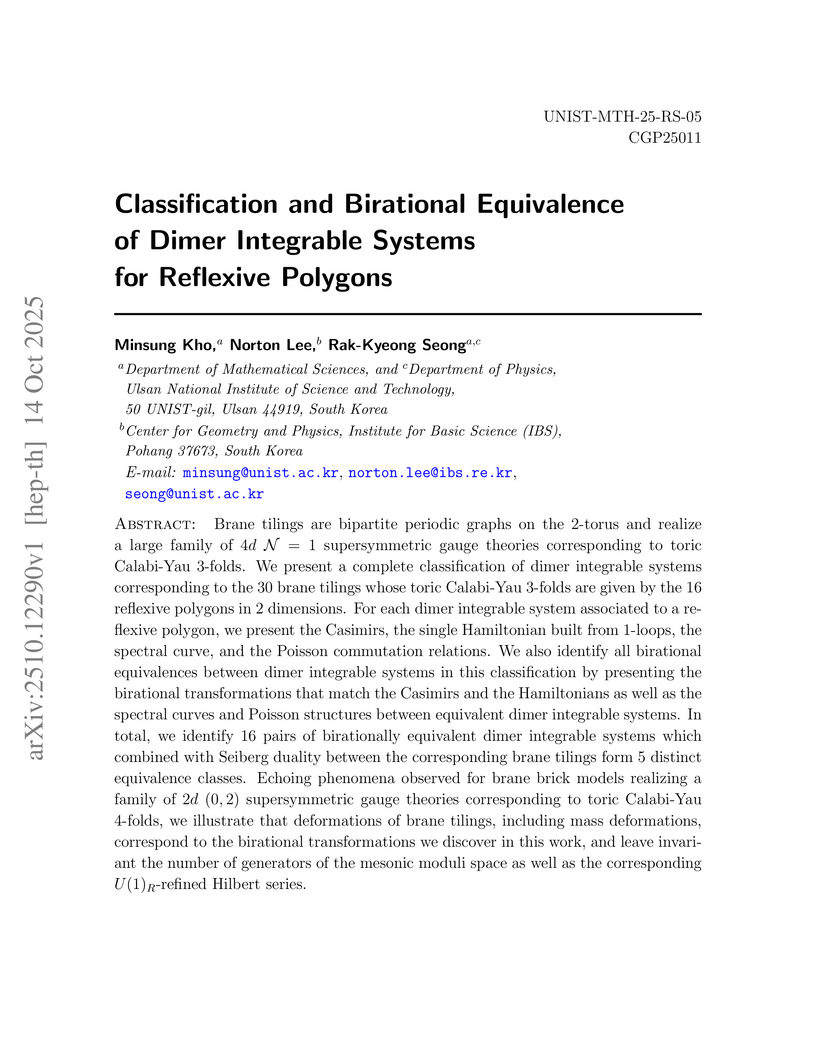

Brane tilings are bipartite periodic graphs on the 2-torus and realize a large family of 4d N=1 supersymmetric gauge theories corresponding to toric Calabi-Yau 3-folds. We present a complete classification of dimer integrable systems corresponding to the 30 brane tilings whose toric Calabi-Yau 3-folds are given by the 16 reflexive polygons in 2 dimensions. For each dimer integrable system associated to a reflexive polygon, we present the Casimirs, the single Hamiltonian built from 1-loops, the spectral curve, and the Poisson commutation relations. We also identify all birational equivalences between dimer integrable systems in this classification by presenting the birational transformations that match the Casimirs and the Hamiltonians as well as the spectral curves and Poisson structures between equivalent dimer integrable systems. In total, we identify 16 pairs of birationally equivalent dimer integrable systems which combined with Seiberg duality between the corresponding brane tilings form 5 distinct equivalence classes. Echoing phenomena observed for brane brick models realizing a family of 2d (0,2) supersymmetric gauge theories corresponding to toric Calabi-Yau 4-folds, we illustrate that deformations of brane tilings, including mass deformations, correspond to the birational transformations we discover in this work, and leave invariant the number of generators of the mesonic moduli space as well as the corresponding U(1)R-refined Hilbert series.

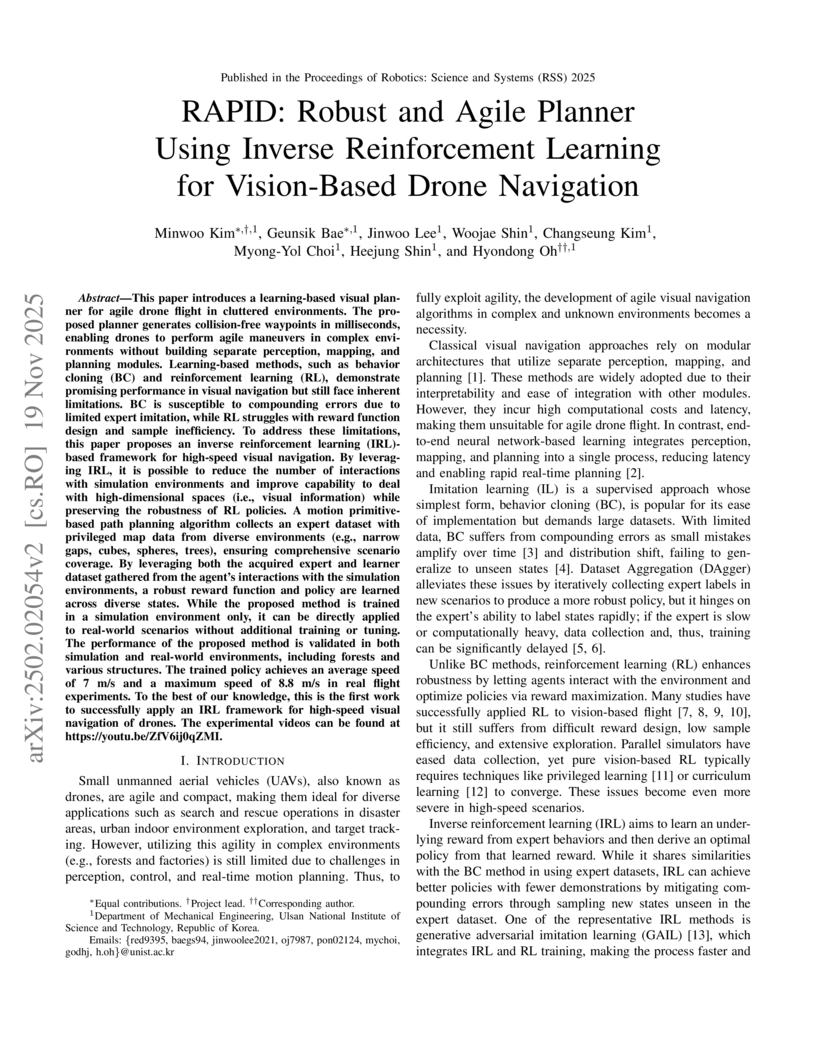

This paper introduces a learning-based visual planner for agile drone flight in cluttered environments. The proposed planner generates collision-free waypoints in milliseconds, enabling drones to perform agile maneuvers in complex environments without building separate perception, mapping, and planning modules. Learning-based methods, such as behavior cloning (BC) and reinforcement learning (RL), demonstrate promising performance in visual navigation but still face inherent limitations. BC is susceptible to compounding errors due to limited expert imitation, while RL struggles with reward function design and sample inefficiency. To address these limitations, this paper proposes an inverse reinforcement learning (IRL)-based framework for high-speed visual navigation. By leveraging IRL, it is possible to reduce the number of interactions with simulation environments and improve capability to deal with high-dimensional spaces while preserving the robustness of RL policies. A motion primitive-based path planning algorithm collects an expert dataset with privileged map data from diverse environments, ensuring comprehensive scenario coverage. By leveraging both the acquired expert and learner dataset gathered from the agent's interactions with the simulation environments, a robust reward function and policy are learned across diverse states. While the proposed method is trained in a simulation environment only, it can be directly applied to real-world scenarios without additional training or tuning. The performance of the proposed method is validated in both simulation and real-world environments, including forests and various structures. The trained policy achieves an average speed of 7 m/s and a maximum speed of 8.8 m/s in real flight experiments. To the best of our knowledge, this is the first work to successfully apply an IRL framework for high-speed visual navigation of drones.

Twisted bilayer MoTe2 near two-degree twists has emerged as a platform for exotic correlated topological phases, including ferromagnetism and a non-Abelian fractional spin Hall insulator. Here we reveal the unexpected emergence of an intervalley superconducting phase that intervenes between these two states in the half-filled second moiré bands. Using a continuum model and exact diagonalization, we identify superconductivity through multiple signatures: negative binding energy, a dominant pair-density eigenvalue, finite superfluid stiffness, and pairing symmetry consistent with a time-reversal-symmetric nodal extended s-wave state. Remarkably, our numerical calculation suggests a continuous transition between superconductivity and the non-Abelian fractional spin Hall insulator, in which topology and symmetry evolve simultaneously, supported by an effective field-theory description. Our results establish higher moiré bands as fertile ground for intertwined superconductivity and topological order, and point to experimentally accessible routes for realizing superconductivity in twisted bilayer MoTe2.

Handling missing data in time series classification remains a significant challenge in various domains. Traditional methods often rely on imputation, which may introduce bias or fail to capture the underlying temporal dynamics. In this paper, we propose TANDEM (Temporal Attention-guided Neural Differential Equations for Missingness), an attention-guided neural differential equation framework that effectively classifies time series data with missing values. Our approach integrates raw observation, interpolated control path, and continuous latent dynamics through a novel attention mechanism, allowing the model to focus on the most informative aspects of the data. We evaluate TANDEM on 30 benchmark datasets and a real-world medical dataset, demonstrating its superiority over existing state-of-the-art methods. Our framework not only improves classification accuracy but also provides insights into the handling of missing data, making it a valuable tool in practice.

12 Oct 2025

Hybrid oscillator-qubit processors have recently demonstrated high-fidelity control of both continuous- and discrete-variable information processing. However, most of the quantum algorithms remain limited to homogeneous quantum architectures. Here, we present a compiler for hybrid oscillator-qubit processors, implementing state preparation and time evolution. In hybrid oscillator-qubit processors, this compiler invokes generalized quantum signal processing (GQSP) to constructively synthesize arbitrary bosonic phase gates with moderate circuit depth O(log(1/{\varepsilon})). The approximation cost is scaled by the Fourier bandwidth of the target bosonic phase, rather than by the degree of nonlinearity. Armed with GQSP, nonadiabatic molecular dynamics can be decomposed with arbitrary-phase potential propagators. Compared to fully discrete encodings, our approach avoids the overhead of truncating continuous variables, showing linear dependence on the number of vibration modes while trading success probability for circuit depth. We validate our method on the uracil cation, a canonical system whose accurate modeling requires anharmonic vibronic models, estimating the cost for state preparation and time evolution.

There are no more papers matching your filters at the moment.