KVFlow introduces a workflow-aware KV cache management framework that leverages an "Agent Step Graph" and overlapped prefetching to enhance serving efficiency for LLM-based multi-agent workflows. It achieved up to 1.83x speedup for single workflows and 2.19x for concurrent workflows compared to reactive caching methods.

Benchmark quality is critical for meaningful evaluation and sustained progress in time series forecasting, particularly given the recent rise of pretrained models. Existing benchmarks often have narrow domain coverage or overlook important real-world settings, such as tasks with covariates. Additionally, their aggregation procedures often lack statistical rigor, making it unclear whether observed performance differences reflect true improvements or random variation. Many benchmarks also fail to provide infrastructure for consistent evaluation or are too rigid to integrate into existing pipelines. To address these gaps, we propose fev-bench, a benchmark comprising 100 forecasting tasks across seven domains, including 46 tasks with covariates. Supporting the benchmark, we introduce fev, a lightweight Python library for benchmarking forecasting models that emphasizes reproducibility and seamless integration with existing workflows. Usingfev, fev-bench employs principled aggregation methods with bootstrapped confidence intervals to report model performance along two complementary dimensions: win rates and skill scores. We report results on fev-bench for various pretrained, statistical and baseline models, and identify promising directions for future research.

This survey paper from Amazon and AWS provides a comprehensive review of the current landscape for applying Large Language Models to tabular data, covering tasks such as prediction, data generation, and table understanding. It systematically categorizes key techniques, datasets, and metrics, finding that LLMs excel in table understanding and generation when appropriately serialized and prompted, though they often do not yet surpass traditional methods for prediction tasks.

Marconi, developed by researchers at Princeton University and Amazon Web Services, is the first system to implement efficient prefix caching for Hybrid Large Language Models, which combine Attention and State Space Model layers. It introduces intelligent cache admission and FLOP-aware eviction policies, achieving significantly higher token hit rates and reduced Time To First Token latency compared to previous approaches.

Towards Effective GenAI Multi-Agent Collaboration: Design and Evaluation for Enterprise Applications

Towards Effective GenAI Multi-Agent Collaboration: Design and Evaluation for Enterprise Applications

Researchers at AWS Bedrock developed a hierarchical multi-agent collaboration framework designed for enterprise applications, addressing complex tasks through coordinated specialist agents. The framework achieved a 90% Goal Success Rate across diverse domains and demonstrated improved communication efficiency through mechanisms like payload referencing and dynamic routing.

The A2AS framework introduces a runtime security and self-defense model for agentic AI systems, addressing prompt injection and other vulnerabilities through native, context-window-integrated controls. This approach aims to provide robust protection without incurring significant latency, external dependencies, or performance degradation often associated with current security solutions.

12 Nov 2025

We present CID-GraphRAG (Conversational Intent-Driven Graph Retrieval Augmented Generation), a novel framework that addresses the limitations of existing dialogue systems in maintaining both contextual coherence and goal-oriented progression in multi-turn customer service conversations. Unlike traditional RAG systems that rely solely on semantic similarity (Conversation RAG) or standard knowledge graphs (GraphRAG), CID-GraphRAG constructs dynamic intent transition graphs from goal achieved historical dialogues and implements a dual-retrieval mechanism that adaptively balances intent-based graph traversal with semantic search. This approach enables the system to simultaneously leverage both conversional intent flow patterns and contextual semantics, significantly improving retrieval quality and response quality. In extensive experiments on real-world customer service dialogues, we employ both automatic metrics and LLM-as-judge assessments, demonstrating that CID-GraphRAG significantly outperforms both semantic-based Conversation RAG and intent-based GraphRAG baselines across all evaluation criteria. Quantitatively, CID-GraphRAG demonstrates substantial improvements over Conversation RAG across automatic metrics, with relative gains of 11% in BLEU, 5% in ROUGE-L, 6% in METEOR, and most notably, a 58% improvement in response quality according to LLM-as-judge evaluations. These results demonstrate that the integration of intent transition structures with semantic retrieval creates a synergistic effect that neither approach achieves independently, establishing CID-GraphRAG as an effective framework for addressing the challenges of maintaining contextual coherence and goal-oriented progression in knowledge-intensive multi-turn dialogues.

UCLA researchers developed a formal definition and efficient "scrubbing" methods for selective forgetting in deep networks, allowing information about specific training data to be removed from model weights while providing a measurable bound on retained information. These methods demonstrate superior information removal and utility preservation compared to baseline approaches by applying controlled noise to model weights based on information theory and stochastic gradient descent stability.

Researchers introduce a unified framework, ALDI, for Domain Adaptive Object Detection, which exposes systematic benchmarking flaws and allows for fair comparisons among methods. They also propose ALDI++, a new method that achieves state-of-the-art performance across diverse benchmarks, and release CFC-DAOD, a real-world dataset for environmental monitoring.

Researchers from Caltech, the University of Washington, and AWS Center for Quantum Computing developed a quantum algorithm for efficient wavepacket preparation that achieves superexponential efficiency gains. Their work demonstrated inelastic particle production in quantum simulations of one-dimensional Ising field theory, implemented on a 100-qubit IBM quantum computer, with results validated against classical matrix product state simulations after error mitigation.

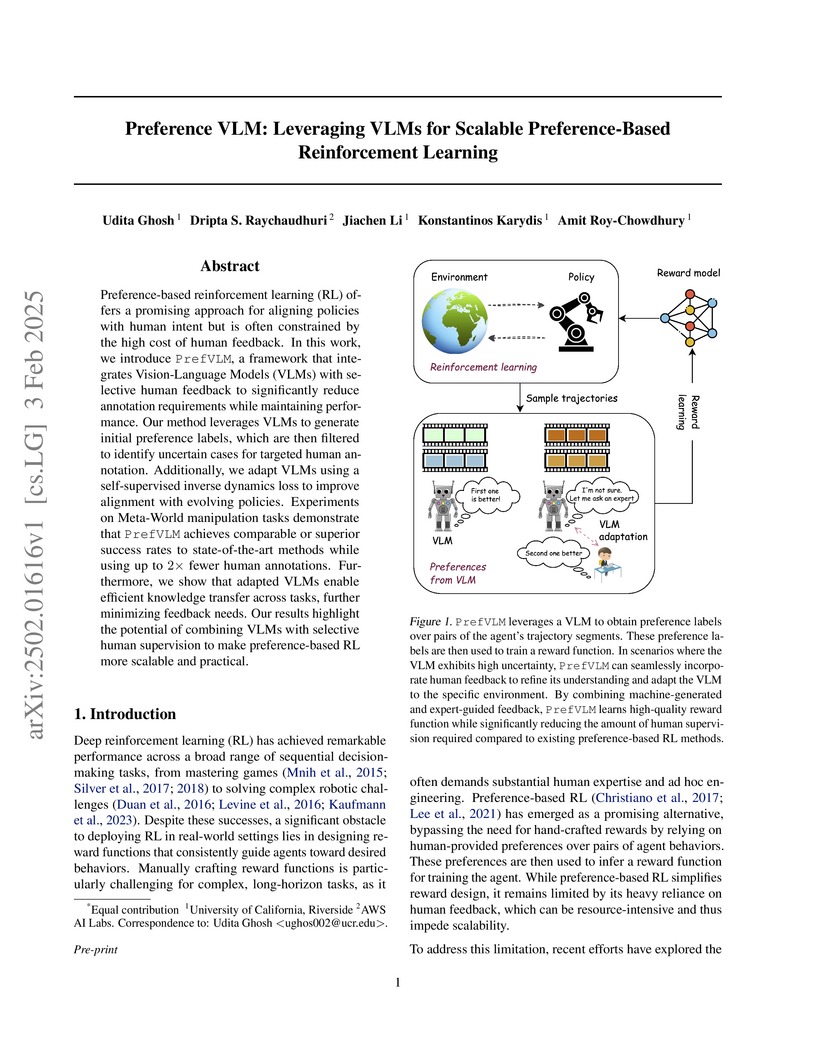

PrefVLM introduces a framework that significantly reduces the human feedback required for preference-based Reinforcement Learning by synergistically integrating Vision-Language Models with selective human feedback. The approach achieves comparable or better robotic task success rates using half the human annotations and demonstrates a fourfold reduction in feedback for knowledge transfer to new tasks.

We present an efficient machine learning (ML) algorithm for predicting any unknown quantum process E over n qubits. For a wide range of distributions D on arbitrary n-qubit states, we show that this ML algorithm can learn to predict any local property of the output from the unknown process~E, with a small average error over input states drawn from D. The ML algorithm is computationally efficient even when the unknown process is a quantum circuit with exponentially many gates. Our algorithm combines efficient procedures for learning properties of an unknown state and for learning a low-degree approximation to an unknown observable. The analysis hinges on proving new norm inequalities, including a quantum analogue of the classical Bohnenblust-Hille inequality, which we derive by giving an improved algorithm for optimizing local Hamiltonians. Numerical experiments on predicting quantum dynamics with evolution time up to 106 and system size up to 50 qubits corroborate our proof. Overall, our results highlight the potential for ML models to predict the output of complex quantum dynamics much faster than the time needed to run the process itself.

TASK2VEC proposes a method to create fixed-dimensional vectorial representations of machine learning tasks using the Fisher Information Matrix of a probe network. These task embeddings enable efficient meta-learning, showing strong correlation with semantic and taxonomic task similarities and demonstrating substantial improvements in selecting optimal pre-trained models for new tasks, particularly in low-data environments.

12 Nov 2025

Increasing the thinking budget of AI models can significantly improve accuracy, but not all questions warrant the same amount of reasoning. Users may prefer to allocate different amounts of reasoning effort depending on how they value output quality versus latency and cost. To leverage this tradeoff effectively, users need fine-grained control over the amount of thinking used for a particular query, but few approaches enable such control. Existing methods require users to specify the absolute number of desired tokens, but this requires knowing the difficulty of the problem beforehand to appropriately set the token budget for a query. To address these issues, we propose Adaptive Effort Control, a self-adaptive reinforcement learning method that trains models to use a user-specified fraction of tokens relative to the current average chain-of-thought length for each query. This approach eliminates dataset- and phase-specific tuning while producing better cost-accuracy tradeoff curves compared to standard methods. Users can dynamically adjust the cost-accuracy trade-off through a continuous effort parameter specified at inference time. We observe that the model automatically learns to allocate resources proportionally to the task difficulty and, across model scales ranging from 1.5B to 32B parameters, our approach enables a 2-3x reduction in chain-of-thought length while maintaining or improving performance relative to the base model used for RL training.

Covariate shifts are a common problem in predictive modeling on real-world problems. This paper proposes addressing the covariate shift problem by minimizing Maximum Mean Discrepancy (MMD) statistics between the training and test sets in either feature input space, feature representation space, or both. We designed three techniques that we call MMD Representation, MMD Mask, and MMD Hybrid to deal with the scenarios where only a distribution shift exists, only a missingness shift exists, or both types of shift exist, respectively. We find that integrating an MMD loss component helps models use the best features for generalization and avoid dangerous extrapolation as much as possible for each test sample. Models treated with this MMD approach show better performance, calibration, and extrapolation on the test set.

27 Jun 2025

Researchers advanced Quantum-Classical Auxiliary Field Quantum Monte Carlo, demonstrating its application to a nickel-catalyzed reaction using 24 qubits on an IonQ Forte quantum computer. The work substantially reduced classical post-processing time by orders of magnitude through algorithmic innovations and GPU acceleration, making the hybrid method viable for chemically relevant systems.

19 Mar 2025

Software updates, including bug repair and feature additions, are frequent in

modern applications but they often leave test suites outdated, resulting in

undetected bugs and increased chances of system failures. A recent study by

Meta revealed that 14%-22% of software failures stem from outdated tests that

fail to reflect changes in the codebase. This highlights the need to keep tests

in sync with code changes to ensure software reliability.

In this paper, we present UTFix, a novel approach for repairing unit tests

when their corresponding focal methods undergo changes. UTFix addresses two

critical issues: assertion failure and reduced code coverage caused by changes

in the focal method. Our approach leverages language models to repair unit

tests by providing contextual information such as static code slices, dynamic

code slices, and failure messages. We evaluate UTFix on our generated synthetic

benchmarks (Tool-Bench), and real-world benchmarks. Tool- Bench includes

diverse changes from popular open-source Python GitHub projects, where UTFix

successfully repaired 89.2% of assertion failures and achieved 100% code

coverage for 96 tests out of 369 tests. On the real-world benchmarks, UTFix

repairs 60% of assertion failures while achieving 100% code coverage for 19 out

of 30 unit tests. To the best of our knowledge, this is the first comprehensive

study focused on unit test in evolving Python projects. Our contributions

include the development of UTFix, the creation of Tool-Bench and real-world

benchmarks, and the demonstration of the effectiveness of LLM-based methods in

addressing unit test failures due to software evolution.

Humans acquire language through implicit learning, absorbing complex patterns

without explicit awareness. While LLMs demonstrate impressive linguistic

capabilities, it remains unclear whether they exhibit human-like pattern

recognition during in-context learning at inferencing level. We adapted three

classic artificial language learning experiments spanning morphology,

morphosyntax, and syntax to systematically evaluate implicit learning at

inferencing level in two state-of-the-art OpenAI models: gpt-4o and o3-mini.

Our results reveal linguistic domain-specific alignment between models and

human behaviors, o3-mini aligns better in morphology while both models align in

syntax.

We present Fortuna, an open-source library for uncertainty quantification in deep learning. Fortuna supports a range of calibration techniques, such as conformal prediction that can be applied to any trained neural network to generate reliable uncertainty estimates, and scalable Bayesian inference methods that can be applied to Flax-based deep neural networks trained from scratch for improved uncertainty quantification and accuracy. By providing a coherent framework for advanced uncertainty quantification methods, Fortuna simplifies the process of benchmarking and helps practitioners build robust AI systems.

13 Sep 2025

Universality and scaling laws are hallmarks of equilibrium phase transitions and critical phenomena. However, extending these concepts to non-equilibrium systems is an outstanding challenge. Despite recent progress in the study of dynamical phases, the universality classes and scaling laws for non-equilibrium phenomena are far less understood than those in equilibrium. In this work, using a trapped-ion quantum simulator with single-spin resolution, we investigate the non-equilibrium nature of critical fluctuations following a quantum quench to the critical point. We probe the scaling of spin fluctuations after a series of quenches to the critical Hamiltonian of a long-range Ising model. With systems of up to 50 spins, we show that the amplitude and timescale of the post-quench fluctuations scale with system size with distinct universal critical exponents, depending on the quench protocol. While a generic quench can lead to thermal critical behavior, we find that a second quench from one critical state to another (i.e.~a double quench) results in a new universal non-equilibrium behavior, identified by a set of critical exponents distinct from their equilibrium counterparts. Our results demonstrate the ability of quantum simulators to explore universal scaling beyond equilibrium.

There are no more papers matching your filters at the moment.